Search Results for author: Vladimir Braverman

Found 42 papers, 14 papers with code

Communication-Efficient Federated Learning with Sketching

no code implementations • ICML 2020 • Daniel Rothchild, Ashwinee Panda, Enayat Ullah, Nikita Ivkin, Vladimir Braverman, Joseph Gonzalez, Ion Stoica, Raman Arora

A key insight in the design of FedSketchedSGD is that, because the Count Sketch is linear, momentum and error accumulation can both be carried out within the sketch.

KIVI: A Tuning-Free Asymmetric 2bit Quantization for KV Cache

1 code implementation • 5 Feb 2024 • Zirui Liu, Jiayi Yuan, Hongye Jin, Shaochen Zhong, Zhaozhuo Xu, Vladimir Braverman, Beidi Chen, Xia Hu

This memory demand increases with larger batch sizes and longer context lengths.

ORBSLAM3-Enhanced Autonomous Toy Drones: Pioneering Indoor Exploration

no code implementations • 20 Dec 2023 • Murad Tukan, Fares Fares, Yotam Grufinkle, Ido Talmor, Loay Mualem, Vladimir Braverman, Dan Feldman

In response to this formidable challenge, we introduce a real-time autonomous indoor exploration system tailored for drones equipped with a monocular \emph{RGB} camera.

How Many Pretraining Tasks Are Needed for In-Context Learning of Linear Regression?

no code implementations • 12 Oct 2023 • Jingfeng Wu, Difan Zou, Zixiang Chen, Vladimir Braverman, Quanquan Gu, Peter L. Bartlett

Transformers pretrained on diverse tasks exhibit remarkable in-context learning (ICL) capabilities, enabling them to solve unseen tasks solely based on input contexts without adjusting model parameters.

Scaling Distributed Multi-task Reinforcement Learning with Experience Sharing

no code implementations • 11 Jul 2023 • Sanae Amani, Khushbu Pahwa, Vladimir Braverman, Lin F. Yang

Our research demonstrates that to achieve $\epsilon$-optimal policies for all $M$ tasks, a single agent using DistMT-LSVI needs to run a total number of episodes that is at most $\tilde{\mathcal{O}}({d^3H^6(\epsilon^{-2}+c_{\rm sep}^{-2})}\cdot M/N)$, where $c_{\rm sep}>0$ is a constant representing task separability, $H$ is the horizon of each episode, and $d$ is the feature dimension of the dynamics and rewards.

Private Federated Frequency Estimation: Adapting to the Hardness of the Instance

no code implementations • NeurIPS 2023 • Jingfeng Wu, Wennan Zhu, Peter Kairouz, Vladimir Braverman

For single-round FFE, it is known that count sketching is nearly information-theoretically optimal for achieving the fundamental accuracy-communication trade-offs [Chen et al., 2022].

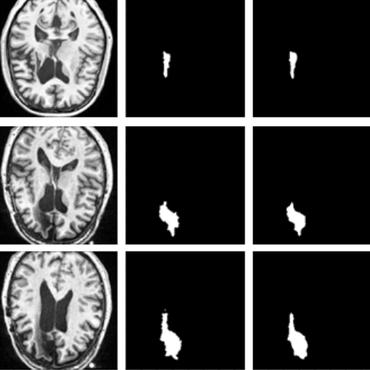

A framework for dynamically training and adapting deep reinforcement learning models to different, low-compute, and continuously changing radiology deployment environments

no code implementations • 8 Jun 2023 • Guangyao Zheng, Shuhao Lai, Vladimir Braverman, Michael A. Jacobs, Vishwa S. Parekh

While Deep Reinforcement Learning has been widely researched in medical imaging, the training and deployment of these models usually require powerful GPUs.

Multi-environment lifelong deep reinforcement learning for medical imaging

no code implementations • 31 May 2023 • Guangyao Zheng, Shuhao Lai, Vladimir Braverman, Michael A. Jacobs, Vishwa S. Parekh

Deep reinforcement learning(DRL) is increasingly being explored in medical imaging.

AutoCoreset: An Automatic Practical Coreset Construction Framework

1 code implementation • 19 May 2023 • Alaa Maalouf, Murad Tukan, Vladimir Braverman, Daniela Rus

A coreset is a tiny weighted subset of an input set, that closely resembles the loss function, with respect to a certain set of queries.

Fixed Design Analysis of Regularization-Based Continual Learning

no code implementations • 17 Mar 2023 • Haoran Li, Jingfeng Wu, Vladimir Braverman

We consider a continual learning (CL) problem with two linear regression tasks in the fixed design setting, where the feature vectors are assumed fixed and the labels are assumed to be random variables.

Asynchronous Decentralized Federated Lifelong Learning for Landmark Localization in Medical Imaging

no code implementations • 12 Mar 2023 • Guangyao Zheng, Michael A. Jacobs, Vladimir Braverman, Vishwa S. Parekh

Federated learning is a recent development in the machine learning area that allows a system of devices to train on one or more tasks without sharing their data to a single location or device.

Provable Data Subset Selection For Efficient Neural Network Training

1 code implementation • 9 Mar 2023 • Murad Tukan, Samson Zhou, Alaa Maalouf, Daniela Rus, Vladimir Braverman, Dan Feldman

In this paper, we introduce the first algorithm to construct coresets for \emph{RBFNNs}, i. e., small weighted subsets that approximate the loss of the input data on any radial basis function network and thus approximate any function defined by an \emph{RBFNN} on the larger input data.

Finite-Sample Analysis of Learning High-Dimensional Single ReLU Neuron

no code implementations • 3 Mar 2023 • Jingfeng Wu, Difan Zou, Zixiang Chen, Vladimir Braverman, Quanquan Gu, Sham M. Kakade

On the other hand, we provide some negative results for stochastic gradient descent (SGD) for ReLU regression with symmetric Bernoulli data: if the model is well-specified, the excess risk of SGD is provably no better than that of GLM-tron ignoring constant factors, for each problem instance; and in the noiseless case, GLM-tron can achieve a small risk while SGD unavoidably suffers from a constant risk in expectation.

Selective experience replay compression using coresets for lifelong deep reinforcement learning in medical imaging

no code implementations • 22 Feb 2023 • Guangyao Zheng, Samson Zhou, Vladimir Braverman, Michael A. Jacobs, Vishwa S. Parekh

Selective experience replay aims to recount selected experiences from previous tasks to avoid catastrophic forgetting.

From Local to Global: Spectral-Inspired Graph Neural Networks

1 code implementation • 24 Sep 2022 • Ningyuan Huang, Soledad Villar, Carey E. Priebe, Da Zheng, Chengyue Huang, Lin Yang, Vladimir Braverman

Graph Neural Networks (GNNs) are powerful deep learning methods for Non-Euclidean data.

The Power and Limitation of Pretraining-Finetuning for Linear Regression under Covariate Shift

no code implementations • 3 Aug 2022 • Jingfeng Wu, Difan Zou, Vladimir Braverman, Quanquan Gu, Sham M. Kakade

Our bounds suggest that for a large class of linear regression instances, transfer learning with $O(N^2)$ source data (and scarce or no target data) is as effective as supervised learning with $N$ target data.

Pretrained Models for Multilingual Federated Learning

1 code implementation • NAACL 2022 • Orion Weller, Marc Marone, Vladimir Braverman, Dawn Lawrie, Benjamin Van Durme

Since the advent of Federated Learning (FL), research has applied these methods to natural language processing (NLP) tasks.

Sparsity and Heterogeneous Dropout for Continual Learning in the Null Space of Neural Activations

no code implementations • 12 Mar 2022 • Ali Abbasi, Parsa Nooralinejad, Vladimir Braverman, Hamed Pirsiavash, Soheil Kolouri

Overcoming catastrophic forgetting in deep neural networks has become an active field of research in recent years.

New Coresets for Projective Clustering and Applications

1 code implementation • 8 Mar 2022 • Murad Tukan, Xuan Wu, Samson Zhou, Vladimir Braverman, Dan Feldman

$(j, k)$-projective clustering is the natural generalization of the family of $k$-clustering and $j$-subspace clustering problems.

Risk Bounds of Multi-Pass SGD for Least Squares in the Interpolation Regime

no code implementations • 7 Mar 2022 • Difan Zou, Jingfeng Wu, Vladimir Braverman, Quanquan Gu, Sham M. Kakade

Stochastic gradient descent (SGD) has achieved great success due to its superior performance in both optimization and generalization.

Cross-Domain Federated Learning in Medical Imaging

no code implementations • 18 Dec 2021 • Vishwa S Parekh, Shuhao Lai, Vladimir Braverman, Jeff Leal, Steven Rowe, Jay J Pillai, Michael A Jacobs

Federated learning is increasingly being explored in the field of medical imaging to train deep learning models on large scale datasets distributed across different data centers while preserving privacy by avoiding the need to transfer sensitive patient information.

Last Iterate Risk Bounds of SGD with Decaying Stepsize for Overparameterized Linear Regression

no code implementations • 12 Oct 2021 • Jingfeng Wu, Difan Zou, Vladimir Braverman, Quanquan Gu, Sham M. Kakade

In this paper, we provide a problem-dependent analysis on the last iterate risk bounds of SGD with decaying stepsize, for (overparameterized) linear regression problems.

Gap-Dependent Unsupervised Exploration for Reinforcement Learning

1 code implementation • 11 Aug 2021 • Jingfeng Wu, Vladimir Braverman, Lin F. Yang

In particular, for an unknown finite-horizon Markov decision process, the algorithm takes only $\widetilde{\mathcal{O}} (1/\epsilon \cdot (H^3SA / \rho + H^4 S^2 A) )$ episodes of exploration, and is able to obtain an $\epsilon$-optimal policy for a post-revealed reward with sub-optimality gap at least $\rho$, where $S$ is the number of states, $A$ is the number of actions, and $H$ is the length of the horizon, obtaining a nearly \emph{quadratic saving} in terms of $\epsilon$.

The Benefits of Implicit Regularization from SGD in Least Squares Problems

no code implementations • NeurIPS 2021 • Difan Zou, Jingfeng Wu, Vladimir Braverman, Quanquan Gu, Dean P. Foster, Sham M. Kakade

Stochastic gradient descent (SGD) exhibits strong algorithmic regularization effects in practice, which has been hypothesized to play an important role in the generalization of modern machine learning approaches.

Adversarial Robustness of Streaming Algorithms through Importance Sampling

no code implementations • NeurIPS 2021 • Vladimir Braverman, Avinatan Hassidim, Yossi Matias, Mariano Schain, Sandeep Silwal, Samson Zhou

In this paper, we introduce adversarially robust streaming algorithms for central machine learning and algorithmic tasks, such as regression and clustering, as well as their more general counterparts, subspace embedding, low-rank approximation, and coreset construction.

Lifelong Learning with Sketched Structural Regularization

no code implementations • 17 Apr 2021 • Haoran Li, Aditya Krishnan, Jingfeng Wu, Soheil Kolouri, Praveen K. Pilly, Vladimir Braverman

In practice and due to computational constraints, most SR methods crudely approximate the importance matrix by its diagonal.

Benign Overfitting of Constant-Stepsize SGD for Linear Regression

no code implementations • 23 Mar 2021 • Difan Zou, Jingfeng Wu, Vladimir Braverman, Quanquan Gu, Sham M. Kakade

More specifically, for SGD with iterate averaging, we demonstrate the sharpness of the established excess risk bound by proving a matching lower bound (up to constant factors).

Accommodating Picky Customers: Regret Bound and Exploration Complexity for Multi-Objective Reinforcement Learning

1 code implementation • NeurIPS 2021 • Jingfeng Wu, Vladimir Braverman, Lin F. Yang

We formalize this problem as an episodic learning problem on a Markov decision process, where transitions are unknown and a reward function is the inner product of a preference vector with pre-specified multi-objective reward functions.

Multi-Objective Reinforcement Learning

Multi-Objective Reinforcement Learning

reinforcement-learning

reinforcement-learning

Sketch and Scale: Geo-distributed tSNE and UMAP

no code implementations • 11 Nov 2020 • Viska Wei, Nikita Ivkin, Vladimir Braverman, Alexander Szalay

Running machine learning analytics over geographically distributed datasets is a rapidly arising problem in the world of data management policies ensuring privacy and data security.

Direction Matters: On the Implicit Bias of Stochastic Gradient Descent with Moderate Learning Rate

no code implementations • ICLR 2021 • Jingfeng Wu, Difan Zou, Vladimir Braverman, Quanquan Gu

Understanding the algorithmic bias of \emph{stochastic gradient descent} (SGD) is one of the key challenges in modern machine learning and deep learning theory.

Data-Independent Structured Pruning of Neural Networks via Coresets

no code implementations • 19 Aug 2020 • Ben Mussay, Daniel Feldman, Samson Zhou, Vladimir Braverman, Margarita Osadchy

Our method is based on the coreset framework and it approximates the output of a layer of neurons/filters by a coreset of neurons/filters in the previous layer and discards the rest.

Obtaining Adjustable Regularization for Free via Iterate Averaging

1 code implementation • ICML 2020 • Jingfeng Wu, Vladimir Braverman, Lin F. Yang

In sum, we obtain adjustable regularization for free for a large class of optimization problems and resolve an open question raised by Neu and Rosasco.

FetchSGD: Communication-Efficient Federated Learning with Sketching

no code implementations • 15 Jul 2020 • Daniel Rothchild, Ashwinee Panda, Enayat Ullah, Nikita Ivkin, Ion Stoica, Vladimir Braverman, Joseph Gonzalez, Raman Arora

A key insight in the design of FetchSGD is that, because the Count Sketch is linear, momentum and error accumulation can both be carried out within the sketch.

Multitask radiological modality invariant landmark localization using deep reinforcement learning

2 code implementations • MIDL 2019 • Vishwa S. Parekh, Alex E. Bocchieri, Vladimir Braverman, Michael A. Jacobs

As a result, to develop a radiological decision support system, it would need to be equipped with potentially hundreds of deep learning models with each model trained for a specific task or organ.

Multiparametric Deep Learning Tissue Signatures for Muscular Dystrophy: Preliminary Results

no code implementations • 1 Aug 2019 • Alex E. Bocchieri, Vishwa S. Parekh, Kathryn R. Wagner. Shivani Ahlawat, Vladimir Braverman, Doris G. Leung, Michael A. Jacobs

A current clinical challenge is identifying limb girdle muscular dystrophy 2I(LGMD2I)tissue changes in the thighs, in particular, separating fat, fat-infiltrated muscle, and muscle tissue.

Data-Independent Neural Pruning via Coresets

no code implementations • ICLR 2020 • Ben Mussay, Margarita Osadchy, Vladimir Braverman, Samson Zhou, Dan Feldman

We propose the first efficient, data-independent neural pruning algorithm with a provable trade-off between its compression rate and the approximation error for any future test sample.

Streaming Quantiles Algorithms with Small Space and Update Time

1 code implementation • 29 Jun 2019 • Nikita Ivkin, Edo Liberty, Kevin Lang, Zohar Karnin, Vladimir Braverman

Approximating quantiles and distributions over streaming data has been studied for roughly two decades now.

On the Noisy Gradient Descent that Generalizes as SGD

1 code implementation • ICML 2020 • Jingfeng Wu, Wenqing Hu, Haoyi Xiong, Jun Huan, Vladimir Braverman, Zhanxing Zhu

The gradient noise of SGD is considered to play a central role in the observed strong generalization abilities of deep learning.

Communication-efficient distributed SGD with Sketching

2 code implementations • NeurIPS 2019 • Nikita Ivkin, Daniel Rothchild, Enayat Ullah, Vladimir Braverman, Ion Stoica, Raman Arora

Large-scale distributed training of neural networks is often limited by network bandwidth, wherein the communication time overwhelms the local computation time.

Coresets for Ordered Weighted Clustering

1 code implementation • 11 Mar 2019 • Vladimir Braverman, Shaofeng H. -C. Jiang, Robert Krauthgamer, Xuan Wu

We design coresets for Ordered k-Median, a generalization of classical clustering problems such as k-Median and k-Center, that offers a more flexible data analysis, like easily combining multiple objectives (e. g., to increase fairness or for Pareto optimization).

Data Structures and Algorithms

Differentially Private Robust Low-Rank Approximation

no code implementations • NeurIPS 2018 • Raman Arora, Vladimir Braverman, Jalaj Upadhyay

In this paper, we study the following robust low-rank matrix approximation problem: given a matrix $A \in \R^{n \times d}$, find a rank-$k$ matrix $B$, while satisfying differential privacy, such that $ \norm{ A - B }_p \leq \alpha \mathsf{OPT}_k(A) + \tau,$ where $\norm{ M }_p$ is the entry-wise $\ell_p$-norm and $\mathsf{OPT}_k(A):=\min_{\mathsf{rank}(X) \leq k} \norm{ A - X}_p$.

Online Factorization and Partition of Complex Networks From Random Walks

no code implementations • 22 May 2017 • Lin F. Yang, Vladimir Braverman, Tuo Zhao, Mengdi Wang

We formulate this into a nonconvex stochastic factorization problem and propose an efficient and scalable stochastic generalized Hebbian algorithm.