Search Results for author: Seoung Wug Oh

Found 33 papers, 21 papers with code

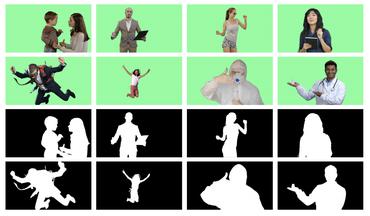

MaGGIe: Masked Guided Gradual Human Instance Matting

no code implementations • 24 Apr 2024 • Chuong Huynh, Seoung Wug Oh, Abhinav Shrivastava, Joon-Young Lee

Human matting is a foundation task in image and video processing, where human foreground pixels are extracted from the input.

VISAGE: Video Instance Segmentation with Appearance-Guided Enhancement

1 code implementation • 8 Dec 2023 • Hanjung Kim, Jaehyun Kang, Miran Heo, Sukjun Hwang, Seoung Wug Oh, Seon Joo Kim

By effectively resolving the over-reliance on location information, we achieve state-of-the-art results on YouTube-VIS 2019/2021 and Occluded VIS (OVIS).

Putting the Object Back into Video Object Segmentation

1 code implementation • 19 Oct 2023 • Ho Kei Cheng, Seoung Wug Oh, Brian Price, Joon-Young Lee, Alexander Schwing

We present Cutie, a video object segmentation (VOS) network with object-level memory reading, which puts the object representation from memory back into the video object segmentation result.

Ranked #1 on

Semi-Supervised Video Object Segmentation

on MOSE

Ranked #1 on

Semi-Supervised Video Object Segmentation

on MOSE

Tracking Anything with Decoupled Video Segmentation

1 code implementation • ICCV 2023 • Ho Kei Cheng, Seoung Wug Oh, Brian Price, Alexander Schwing, Joon-Young Lee

To 'track anything' without training on video data for every individual task, we develop a decoupled video segmentation approach (DEVA), composed of task-specific image-level segmentation and class/task-agnostic bi-directional temporal propagation.

Ranked #1 on

Unsupervised Video Object Segmentation

on DAVIS 2016 val

(using extra training data)

Ranked #1 on

Unsupervised Video Object Segmentation

on DAVIS 2016 val

(using extra training data)

Open-Vocabulary Video Segmentation

Open-Vocabulary Video Segmentation

Open-World Video Segmentation

+7

Open-World Video Segmentation

+7

In-N-Out: Faithful 3D GAN Inversion with Volumetric Decomposition for Face Editing

no code implementations • 9 Feb 2023 • Yiran Xu, Zhixin Shu, Cameron Smith, Seoung Wug Oh, Jia-Bin Huang

3D-aware GANs offer new capabilities for view synthesis while preserving the editing functionalities of their 2D counterparts.

Mask-Guided Matting in the Wild

no code implementations • CVPR 2023 • KwanYong Park, Sanghyun Woo, Seoung Wug Oh, In So Kweon, Joon-Young Lee

Mask-guided matting has shown great practicality compared to traditional trimap-based methods.

Bridging Images and Videos: A Simple Learning Framework for Large Vocabulary Video Object Detection

no code implementations • 20 Dec 2022 • Sanghyun Woo, KwanYong Park, Seoung Wug Oh, In So Kweon, Joon-Young Lee

First, no tracking supervisions are in LVIS, which leads to inconsistent learning of detection (with LVIS and TAO) and tracking (only with TAO).

Tracking by Associating Clips

no code implementations • 20 Dec 2022 • Sanghyun Woo, KwanYong Park, Seoung Wug Oh, In So Kweon, Joon-Young Lee

The tracking-by-detection paradigm today has become the dominant method for multi-object tracking and works by detecting objects in each frame and then performing data association across frames.

A Generalized Framework for Video Instance Segmentation

1 code implementation • CVPR 2023 • Miran Heo, Sukjun Hwang, Jeongseok Hyun, Hanjung Kim, Seoung Wug Oh, Joon-Young Lee, Seon Joo Kim

Notably, we greatly outperform the state-of-the-art on the long VIS benchmark (OVIS), improving 5. 6 AP with ResNet-50 backbone.

Ranked #6 on

Video Instance Segmentation

on YouTube-VIS 2021

(using extra training data)

Ranked #6 on

Video Instance Segmentation

on YouTube-VIS 2021

(using extra training data)

Per-Clip Video Object Segmentation

1 code implementation • CVPR 2022 • KwanYong Park, Sanghyun Woo, Seoung Wug Oh, In So Kweon, Joon-Young Lee

In this per-clip inference scheme, we update the memory with an interval and simultaneously process a set of consecutive frames (i. e. clip) between the memory updates.

One-Trimap Video Matting

1 code implementation • 27 Jul 2022 • Hongje Seong, Seoung Wug Oh, Brian Price, Euntai Kim, Joon-Young Lee

A key of OTVM is the joint modeling of trimap propagation and alpha prediction.

Error Compensation Framework for Flow-Guided Video Inpainting

no code implementations • 21 Jul 2022 • Jaeyeon Kang, Seoung Wug Oh, Seon Joo Kim

The key to video inpainting is to use correlation information from as many reference frames as possible.

VITA: Video Instance Segmentation via Object Token Association

1 code implementation • 9 Jun 2022 • Miran Heo, Sukjun Hwang, Seoung Wug Oh, Joon-Young Lee, Seon Joo Kim

Specifically, we use an image object detector as a means of distilling object-specific contexts into object tokens.

Ranked #11 on

Video Instance Segmentation

on YouTube-VIS 2021

(using extra training data)

Ranked #11 on

Video Instance Segmentation

on YouTube-VIS 2021

(using extra training data)

Cannot See the Forest for the Trees: Aggregating Multiple Viewpoints to Better Classify Objects in Videos

1 code implementation • CVPR 2022 • Sukjun Hwang, Miran Heo, Seoung Wug Oh, Seon Joo Kim

The set classifier is plug-and-playable to existing object trackers, and highly improves the performance of long-tailed object tracking.

VISOLO: Grid-Based Space-Time Aggregation for Efficient Online Video Instance Segmentation

1 code implementation • CVPR 2022 • Su Ho Han, Sukjun Hwang, Seoung Wug Oh, Yeonchool Park, Hyunwoo Kim, Min-Jung Kim, Seon Joo Kim

We also introduce cooperatively operating modules that aggregate information from available frames, in order to enrich the features for all subtasks in VIS.

Hierarchical Memory Matching Network for Video Object Segmentation

1 code implementation • ICCV 2021 • Hongje Seong, Seoung Wug Oh, Joon-Young Lee, Seongwon Lee, Suhyeon Lee, Euntai Kim

Based on a recent memory-based method [33], we propose two advanced memory read modules that enable us to perform memory reading in multiple scales while exploiting temporal smoothness.

Tackling the Ill-Posedness of Super-Resolution Through Adaptive Target Generation

1 code implementation • CVPR 2021 • Younghyun Jo, Seoung Wug Oh, Peter Vajda, Seon Joo Kim

By the one-to-many nature of the super-resolution (SR) problem, a single low-resolution (LR) image can be mapped to many high-resolution (HR) images.

Ranked #3 on

Blind Super-Resolution

on DIV2KRK - 4x upscaling

Ranked #3 on

Blind Super-Resolution

on DIV2KRK - 4x upscaling

Video Instance Segmentation using Inter-Frame Communication Transformers

1 code implementation • NeurIPS 2021 • Sukjun Hwang, Miran Heo, Seoung Wug Oh, Seon Joo Kim

We propose a novel end-to-end solution for video instance segmentation (VIS) based on transformers.

Ranked #32 on

Video Instance Segmentation

on YouTube-VIS validation

Ranked #32 on

Video Instance Segmentation

on YouTube-VIS validation

Polygonal Point Set Tracking

1 code implementation • CVPR 2021 • Gunhee Nam, Miran Heo, Seoung Wug Oh, Joon-Young Lee, Seon Joo Kim

Since the existing datasets are not suitable to validate our method, we build a new polygonal point set tracking dataset and demonstrate the superior performance of our method over the baselines and existing contour-based VOS methods.

Exemplar-Based Open-Set Panoptic Segmentation Network

3 code implementations • CVPR 2021 • Jaedong Hwang, Seoung Wug Oh, Joon-Young Lee, Bohyung Han

We extend panoptic segmentation to the open-world and introduce an open-set panoptic segmentation (OPS) task.

Single-shot Path Integrated Panoptic Segmentation

no code implementations • 3 Dec 2020 • Sukjun Hwang, Seoung Wug Oh, Seon Joo Kim

Panoptic segmentation, which is a novel task of unifying instance segmentation and semantic segmentation, has attracted a lot of attention lately.

Cross-Identity Motion Transfer for Arbitrary Objects through Pose-Attentive Video Reassembling

no code implementations • ECCV 2020 • Subin Jeon, Seonghyeon Nam, Seoung Wug Oh, Seon Joo Kim

To reduce the training-testing discrepancy of the self-supervised learning, a novel cross-identity training scheme is additionally introduced.

Deep Space-Time Video Upsampling Networks

1 code implementation • ECCV 2020 • Jaeyeon Kang, Younghyun Jo, Seoung Wug Oh, Peter Vajda, Seon Joo Kim

Video super-resolution (VSR) and frame interpolation (FI) are traditional computer vision problems, and the performance have been improving by incorporating deep learning recently.

Learning the Loss Functions in a Discriminative Space for Video Restoration

no code implementations • 20 Mar 2020 • Younghyun Jo, Jaeyeon Kang, Seoung Wug Oh, Seonghyeon Nam, Peter Vajda, Seon Joo Kim

Our framework is similar to GANs in that we iteratively train two networks - a generator and a loss network.

DMV: Visual Object Tracking via Part-level Dense Memory and Voting-based Retrieval

no code implementations • 20 Mar 2020 • Gunhee Nam, Seoung Wug Oh, Joon-Young Lee, Seon Joo Kim

We propose a novel memory-based tracker via part-level dense memory and voting-based retrieval, called DMV.

Copy-and-Paste Networks for Deep Video Inpainting

1 code implementation • ICCV 2019 • Sungho Lee, Seoung Wug Oh, DaeYeun Won, Seon Joo Kim

We propose a novel DNN-based framework called the Copy-and-Paste Networks for video inpainting that takes advantage of additional information in other frames of the video.

Ranked #6 on

Video Inpainting

on DAVIS

Ranked #6 on

Video Inpainting

on DAVIS

Onion-Peel Networks for Deep Video Completion

1 code implementation • ICCV 2019 • Seoung Wug Oh, Sungho Lee, Joon-Young Lee, Seon Joo Kim

Given a set of reference images and a target image with holes, our network fills the hole by referring the contents in the reference images.

Fast User-Guided Video Object Segmentation by Interaction-and-Propagation Networks

1 code implementation • CVPR 2019 • Seoung Wug Oh, Joon-Young Lee, Ning Xu, Seon Joo Kim

We propose a new multi-round training scheme for the interactive video object segmentation so that the networks can learn how to understand the user's intention and update incorrect estimations during the training.

Ranked #6 on

Interactive Video Object Segmentation

on DAVIS 2017

(AUC-J metric)

Ranked #6 on

Interactive Video Object Segmentation

on DAVIS 2017

(AUC-J metric)

Video Object Segmentation using Space-Time Memory Networks

3 code implementations • ICCV 2019 • Seoung Wug Oh, Joon-Young Lee, Ning Xu, Seon Joo Kim

In our framework, the past frames with object masks form an external memory, and the current frame as the query is segmented using the mask information in the memory.

Ranked #4 on

Interactive Video Object Segmentation

on DAVIS 2017

(using extra training data)

Ranked #4 on

Interactive Video Object Segmentation

on DAVIS 2017

(using extra training data)

Deep Video Super-Resolution Network Using Dynamic Upsampling Filters Without Explicit Motion Compensation

1 code implementation • CVPR 2018 • Younghyun Jo, Seoung Wug Oh, Jaeyeon Kang, Seon Joo Kim

We propose a novel end-to-end deep neural network that generates dynamic upsampling filters and a residual image, which are computed depending on the local spatio-temporal neighborhood of each pixel to avoid explicit motion compensation.

Ranked #6 on

Video Super-Resolution

on Vid4 - 4x upscaling

Ranked #6 on

Video Super-Resolution

on Vid4 - 4x upscaling

Fast Video Object Segmentation by Reference-Guided Mask Propagation

2 code implementations • CVPR 2018 • Seoung Wug Oh, Joon-Young Lee, Kalyan Sunkavalli, Seon Joo Kim

We validate our method on four benchmark sets that cover single and multiple object segmentation.

Approaching the Computational Color Constancy as a Classification Problem through Deep Learning

no code implementations • 29 Aug 2016 • Seoung Wug Oh, Seon Joo Kim

Computational color constancy refers to the problem of computing the illuminant color so that the images of a scene under varying illumination can be normalized to an image under the canonical illumination.

Do It Yourself Hyperspectral Imaging With Everyday Digital Cameras

no code implementations • CVPR 2016 • Seoung Wug Oh, Michael S. Brown, Marc Pollefeys, Seon Joo Kim

In particular, due to the differences in spectral sensitivities of the cameras, different cameras yield different RGB measurements for the same spectral signal.