Search Results for author: Xingjun Ma

Found 71 papers, 38 papers with code

Exploring the Vulnerability of Natural Language Processing Models via Universal Adversarial Texts

1 code implementation • ALTA 2021 • Xinzhe Li, Ming Liu, Xingjun Ma, Longxiang Gao

Universal adversarial texts (UATs) refer to short pieces of text units that can largely affect the predictions of NLP models.

The Dog Walking Theory: Rethinking Convergence in Federated Learning

no code implementations • 18 Apr 2024 • Kun Zhai, Yifeng Gao, Xingjun Ma, Difan Zou, Guangnan Ye, Yu-Gang Jiang

In this paper, we study the convergence of FL on non-IID data and propose a novel \emph{Dog Walking Theory} to formulate and identify the missing element in existing research.

The Double-Edged Sword of Input Perturbations to Robust Accurate Fairness

no code implementations • 1 Apr 2024 • Xuran Li, Peng Wu, Yanting Chen, Xingjun Ma, Zhen Zhang, Kaixiang Dong

Deep neural networks (DNNs) are known to be sensitive to adversarial input perturbations, leading to a reduction in either prediction accuracy or individual fairness.

Whose Side Are You On? Investigating the Political Stance of Large Language Models

1 code implementation • 15 Mar 2024 • Pagnarasmey Pit, Xingjun Ma, Mike Conway, Qingyu Chen, James Bailey, Henry Pit, Putrasmey Keo, Watey Diep, Yu-Gang Jiang

Large Language Models (LLMs) have gained significant popularity for their application in various everyday tasks such as text generation, summarization, and information retrieval.

Hufu: A Modality-Agnositc Watermarking System for Pre-Trained Transformers via Permutation Equivariance

no code implementations • 9 Mar 2024 • Hengyuan Xu, Liyao Xiang, Xingjun Ma, Borui Yang, Baochun Li

The permutation equivariance ensures minimal interference between these two sets of model weights and thus high fidelity on downstream tasks.

Unlearnable Examples For Time Series

no code implementations • 3 Feb 2024 • Yujing Jiang, Xingjun Ma, Sarah Monazam Erfani, James Bailey

In this work, we introduce the first UE generation method to protect time series data from unauthorized training by deep learning models.

Multi-Trigger Backdoor Attacks: More Triggers, More Threats

no code implementations • 27 Jan 2024 • Yige Li, Xingjun Ma, Jiabo He, Hanxun Huang, Yu-Gang Jiang

Arguably, real-world backdoor attacks can be much more complex, e. g., the existence of multiple adversaries for the same dataset if it is of high value.

LDReg: Local Dimensionality Regularized Self-Supervised Learning

1 code implementation • 19 Jan 2024 • Hanxun Huang, Ricardo J. G. B. Campello, Sarah Monazam Erfani, Xingjun Ma, Michael E. Houle, James Bailey

Representations learned via self-supervised learning (SSL) can be susceptible to dimensional collapse, where the learned representation subspace is of extremely low dimensionality and thus fails to represent the full data distribution and modalities.

End-to-End Anti-Backdoor Learning on Images and Time Series

no code implementations • 6 Jan 2024 • Yujing Jiang, Xingjun Ma, Sarah Monazam Erfani, Yige Li, James Bailey

Backdoor attacks present a substantial security concern for deep learning models, especially those utilized in applications critical to safety and security.

Adversarial Prompt Tuning for Vision-Language Models

1 code implementation • 19 Nov 2023 • Jiaming Zhang, Xingjun Ma, Xin Wang, Lingyu Qiu, Jiaqi Wang, Yu-Gang Jiang, Jitao Sang

With the rapid advancement of multimodal learning, pre-trained Vision-Language Models (VLMs) such as CLIP have demonstrated remarkable capacities in bridging the gap between visual and language modalities.

Fake Alignment: Are LLMs Really Aligned Well?

1 code implementation • 10 Nov 2023 • Yixu Wang, Yan Teng, Kexin Huang, Chengqi Lyu, Songyang Zhang, Wenwei Zhang, Xingjun Ma, Yu-Gang Jiang, Yu Qiao, Yingchun Wang

The growing awareness of safety concerns in large language models (LLMs) has sparked considerable interest in the evaluation of safety.

On the Importance of Spatial Relations for Few-shot Action Recognition

no code implementations • 14 Aug 2023 • Yilun Zhang, Yuqian Fu, Xingjun Ma, Lizhe Qi, Jingjing Chen, Zuxuan Wu, Yu-Gang Jiang

We are thus motivated to investigate the importance of spatial relations and propose a more accurate few-shot action recognition method that leverages both spatial and temporal information.

Learning from Heterogeneity: A Dynamic Learning Framework for Hypergraphs

no code implementations • 7 Jul 2023 • Tiehua Zhang, Yuze Liu, Zhishu Shen, Xingjun Ma, Xin Chen, Xiaowei Huang, Jun Yin, Jiong Jin

Graph neural network (GNN) has gained increasing popularity in recent years owing to its capability and flexibility in modeling complex graph structure data.

Reconstructive Neuron Pruning for Backdoor Defense

1 code implementation • 24 May 2023 • Yige Li, Xixiang Lyu, Xingjun Ma, Nodens Koren, Lingjuan Lyu, Bo Li, Yu-Gang Jiang

Specifically, RNP first unlearns the neurons by maximizing the model's error on a small subset of clean samples and then recovers the neurons by minimizing the model's error on the same data.

IMAP: Intrinsically Motivated Adversarial Policy

no code implementations • 4 May 2023 • Xiang Zheng, Xingjun Ma, Shengjie Wang, Xinyu Wang, Chao Shen, Cong Wang

Our experiments validate the effectiveness of the four types of adversarial intrinsic regularizers and BR in enhancing black-box adversarial policy learning across a variety of environments.

Distilling Cognitive Backdoor Patterns within an Image

1 code implementation • 26 Jan 2023 • Hanxun Huang, Xingjun Ma, Sarah Erfani, James Bailey

We conduct extensive experiments to show that CD can robustly detect a wide range of advanced backdoor attacks.

Unlearnable Clusters: Towards Label-agnostic Unlearnable Examples

1 code implementation • CVPR 2023 • Jiaming Zhang, Xingjun Ma, Qi Yi, Jitao Sang, Yu-Gang Jiang, YaoWei Wang, Changsheng Xu

Furthermore, we propose to leverage VisionandLanguage Pre-trained Models (VLPMs) like CLIP as the surrogate model to improve the transferability of the crafted UCs to diverse domains.

CIM: Constrained Intrinsic Motivation for Sparse-Reward Continuous Control

no code implementations • 28 Nov 2022 • Xiang Zheng, Xingjun Ma, Cong Wang

Intrinsic motivation is a promising exploration technique for solving reinforcement learning tasks with sparse or absent extrinsic rewards.

Backdoor Attacks on Time Series: A Generative Approach

1 code implementation • 15 Nov 2022 • Yujing Jiang, Xingjun Ma, Sarah Monazam Erfani, James Bailey

We find that, compared to images, it can be more challenging to achieve the two goals on time series.

Fine-mixing: Mitigating Backdoors in Fine-tuned Language Models

1 code implementation • 18 Oct 2022 • Zhiyuan Zhang, Lingjuan Lyu, Xingjun Ma, Chenguang Wang, Xu sun

In this work, we take the first step to exploit the pre-trained (unfine-tuned) weights to mitigate backdoors in fine-tuned language models.

Transferable Unlearnable Examples

1 code implementation • 18 Oct 2022 • Jie Ren, Han Xu, Yuxuan Wan, Xingjun Ma, Lichao Sun, Jiliang Tang

The unlearnable strategies have been introduced to prevent third parties from training on the data without permission.

Backdoor Attacks on Crowd Counting

1 code implementation • 12 Jul 2022 • Yuhua Sun, Tailai Zhang, Xingjun Ma, Pan Zhou, Jian Lou, Zichuan Xu, Xing Di, Yu Cheng, Lichao

In this paper, we propose two novel Density Manipulation Backdoor Attacks (DMBA$^{-}$ and DMBA$^{+}$) to attack the model to produce arbitrarily large or small density estimations.

CalFAT: Calibrated Federated Adversarial Training with Label Skewness

1 code implementation • 30 May 2022 • Chen Chen, Yuchen Liu, Xingjun Ma, Lingjuan Lyu

In this paper, we study the problem of FAT under label skewness, and reveal one root cause of the training instability and natural accuracy degradation issues: skewed labels lead to non-identical class probabilities and heterogeneous local models.

VeriFi: Towards Verifiable Federated Unlearning

no code implementations • 25 May 2022 • Xiangshan Gao, Xingjun Ma, Jingyi Wang, Youcheng Sun, Bo Li, Shouling Ji, Peng Cheng, Jiming Chen

One desirable property for FL is the implementation of the right to be forgotten (RTBF), i. e., a leaving participant has the right to request to delete its private data from the global model.

Few-Shot Backdoor Attacks on Visual Object Tracking

1 code implementation • ICLR 2022 • Yiming Li, Haoxiang Zhong, Xingjun Ma, Yong Jiang, Shu-Tao Xia

Visual object tracking (VOT) has been widely adopted in mission-critical applications, such as autonomous driving and intelligent surveillance systems.

On the Convergence and Robustness of Adversarial Training

no code implementations • 15 Dec 2021 • Yisen Wang, Xingjun Ma, James Bailey, JinFeng Yi, BoWen Zhou, Quanquan Gu

In this paper, we propose such a criterion, namely First-Order Stationary Condition for constrained optimization (FOSC), to quantitatively evaluate the convergence quality of adversarial examples found in the inner maximization.

Gradient Driven Rewards to Guarantee Fairness in Collaborative Machine Learning

no code implementations • NeurIPS 2021 • Xinyi Xu, Lingjuan Lyu, Xingjun Ma, Chenglin Miao, Chuan Sheng Foo, Bryan Kian Hsiang Low

In this paper, we adopt federated learning as a gradient-based formalization of collaborative machine learning, propose a novel cosine gradient Shapley value to evaluate the agents’ uploaded model parameter updates/gradients, and design theoretically guaranteed fair rewards in the form of better model performance.

SpineOne: A One-Stage Detection Framework for Degenerative Discs and Vertebrae

no code implementations • 28 Oct 2021 • Jiabo He, Wei Liu, Yu Wang, Xingjun Ma, Xian-Sheng Hua

Spinal degeneration plagues many elders, office workers, and even the younger generations.

Alpha-IoU: A Family of Power Intersection over Union Losses for Bounding Box Regression

1 code implementation • NeurIPS 2021 • Jiabo He, Sarah Erfani, Xingjun Ma, James Bailey, Ying Chi, Xian-Sheng Hua

Bounding box (bbox) regression is a fundamental task in computer vision.

Anti-Backdoor Learning: Training Clean Models on Poisoned Data

1 code implementation • NeurIPS 2021 • Yige Li, Xixiang Lyu, Nodens Koren, Lingjuan Lyu, Bo Li, Xingjun Ma

From this view, we identify two inherent characteristics of backdoor attacks as their weaknesses: 1) the models learn backdoored data much faster than learning with clean data, and the stronger the attack the faster the model converges on backdoored data; 2) the backdoor task is tied to a specific class (the backdoor target class).

ECG-ATK-GAN: Robustness against Adversarial Attacks on ECGs using Conditional Generative Adversarial Networks

1 code implementation • 17 Oct 2021 • Khondker Fariha Hossain, Sharif Amit Kamran, Alireza Tavakkoli, Xingjun Ma

The experiment confirms that our model is more robust against such adversarial attacks for classifying arrhythmia with high accuracy.

Exploring Architectural Ingredients of Adversarially Robust Deep Neural Networks

1 code implementation • NeurIPS 2021 • Hanxun Huang, Yisen Wang, Sarah Monazam Erfani, Quanquan Gu, James Bailey, Xingjun Ma

Specifically, we make the following key observations: 1) more parameters (higher model capacity) does not necessarily help adversarial robustness; 2) reducing capacity at the last stage (the last group of blocks) of the network can actually improve adversarial robustness; and 3) under the same parameter budget, there exists an optimal architectural configuration for adversarial robustness.

Understanding Graph Learning with Local Intrinsic Dimensionality

no code implementations • 29 Sep 2021 • Xiaojun Guo, Xingjun Ma, Yisen Wang

Many real-world problems can be formulated as graphs and solved by graph learning techniques.

FedDiscrete: A Secure Federated Learning Algorithm Against Weight Poisoning

no code implementations • 29 Sep 2021 • Yutong Dai, Xingjun Ma, Lichao Sun

Federated learning (FL) is a privacy-aware collaborative learning paradigm that allows multiple parties to jointly train a machine learning model without sharing their private data.

Revisiting Adversarial Robustness Distillation: Robust Soft Labels Make Student Better

1 code implementation • ICCV 2021 • Bojia Zi, Shihao Zhao, Xingjun Ma, Yu-Gang Jiang

We empirically demonstrate the effectiveness of our RSLAD approach over existing adversarial training and distillation methods in improving the robustness of small models against state-of-the-art attacks including the AutoAttack.

ECG-Adv-GAN: Detecting ECG Adversarial Examples with Conditional Generative Adversarial Networks

no code implementations • 16 Jul 2021 • Khondker Fariha Hossain, Sharif Amit Kamran, Alireza Tavakkoli, Lei Pan, Xingjun Ma, Sutharshan Rajasegarar, Chandan Karmaker

Moreover, the model is conditioned on class-specific ECG signals to synthesize realistic adversarial examples.

Adversarial Interaction Attacks: Fooling AI to Misinterpret Human Intentions

no code implementations • ICML Workshop AML 2021 • Nodens Koren, Xingjun Ma, Qiuhong Ke, Yisen Wang, James Bailey

Understanding the actions of both humans and artificial intelligence (AI) agents is important before modern AI systems can be fully integrated into our daily life.

Noise Doesn't Lie: Towards Universal Detection of Deep Inpainting

no code implementations • 3 Jun 2021 • Ang Li, Qiuhong Ke, Xingjun Ma, Haiqin Weng, Zhiyuan Zong, Feng Xue, Rui Zhang

A promising countermeasure against such forgeries is deep inpainting detection, which aims to locate the inpainted regions in an image.

$\alpha$-IoU: A Family of Power Intersection over Union Losses for Bounding Box Regression

1 code implementation • NeurIPS 2021 • Jiabo He, Sarah Monazam Erfani, Xingjun Ma, James Bailey, Ying Chi, Xian-Sheng Hua

Bounding box (bbox) regression is a fundamental task in computer vision.

Dual Head Adversarial Training

1 code implementation • 21 Apr 2021 • Yujing Jiang, Xingjun Ma, Sarah Monazam Erfani, James Bailey

Deep neural networks (DNNs) are known to be vulnerable to adversarial examples/attacks, raising concerns about their reliability in safety-critical applications.

Improving Adversarial Robustness via Channel-wise Activation Suppressing

1 code implementation • ICLR 2021 • Yang Bai, Yuyuan Zeng, Yong Jiang, Shu-Tao Xia, Xingjun Ma, Yisen Wang

The study of adversarial examples and their activation has attracted significant attention for secure and robust learning with deep neural networks (DNNs).

Anomaly Detection for Scenario-based Insider Activities using CGAN Augmented Data

no code implementations • 15 Feb 2021 • R G Gayathri, Atul Sajjanhar, Yong Xiang, Xingjun Ma

Insider threats are the cyber attacks from within the trusted entities of an organization.

RobOT: Robustness-Oriented Testing for Deep Learning Systems

1 code implementation • 11 Feb 2021 • Jingyi Wang, Jialuo Chen, Youcheng Sun, Xingjun Ma, Dongxia Wang, Jun Sun, Peng Cheng

A key part of RobOT is a quantitative measurement on 1) the value of each test case in improving model robustness (often via retraining), and 2) the convergence quality of the model robustness improvement.

Software Engineering

What Do Deep Nets Learn? Class-wise Patterns Revealed in the Input Space

no code implementations • 18 Jan 2021 • Shihao Zhao, Xingjun Ma, Yisen Wang, James Bailey, Bo Li, Yu-Gang Jiang

In this paper, we focus on image classification and propose a method to visualize and understand the class-wise knowledge (patterns) learned by DNNs under three different settings including natural, backdoor and adversarial.

Adversarial Interaction Attack: Fooling AI to Misinterpret Human Intentions

no code implementations • 17 Jan 2021 • Nodens Koren, Qiuhong Ke, Yisen Wang, James Bailey, Xingjun Ma

Understanding the actions of both humans and artificial intelligence (AI) agents is important before modern AI systems can be fully integrated into our daily life.

Neural Attention Distillation: Erasing Backdoor Triggers from Deep Neural Networks

1 code implementation • ICLR 2021 • Yige Li, Xixiang Lyu, Nodens Koren, Lingjuan Lyu, Bo Li, Xingjun Ma

NAD utilizes a teacher network to guide the finetuning of the backdoored student network on a small clean subset of data such that the intermediate-layer attention of the student network aligns with that of the teacher network.

Unlearnable Examples: Making Personal Data Unexploitable

1 code implementation • ICLR 2021 • Hanxun Huang, Xingjun Ma, Sarah Monazam Erfani, James Bailey, Yisen Wang

This paper raises the question: \emph{can data be made unlearnable for deep learning models?}

WildDeepfake: A Challenging Real-World Dataset for Deepfake Detection

1 code implementation • 5 Jan 2021 • Bojia Zi, Minghao Chang, Jingjing Chen, Xingjun Ma, Yu-Gang Jiang

WildDeepfake is a small dataset that can be used, in addition to existing datasets, to develop and test the effectiveness of deepfake detectors against real-world deepfakes.

Neural Architecture Search via Combinatorial Multi-Armed Bandit

no code implementations • 1 Jan 2021 • Hanxun Huang, Xingjun Ma, Sarah M. Erfani, James Bailey

NAS can be performed via policy gradient, evolutionary algorithms, differentiable architecture search or tree-search methods.

Privacy and Robustness in Federated Learning: Attacks and Defenses

no code implementations • 7 Dec 2020 • Lingjuan Lyu, Han Yu, Xingjun Ma, Chen Chen, Lichao Sun, Jun Zhao, Qiang Yang, Philip S. Yu

Besides training powerful global models, it is of paramount importance to design FL systems that have privacy guarantees and are resistant to different types of adversaries.

Imbalanced Gradients: A New Cause of Overestimated Adversarial Robustness

no code implementations • 28 Sep 2020 • Linxi Jiang, Xingjun Ma, Zejia Weng, James Bailey, Yu-Gang Jiang

Evaluating the robustness of a defense model is a challenging task in adversarial robustness research.

Short-Term and Long-Term Context Aggregation Network for Video Inpainting

no code implementations • ECCV 2020 • Ang Li, Shanshan Zhao, Xingjun Ma, Mingming Gong, Jianzhong Qi, Rui Zhang, DaCheng Tao, Ramamohanarao Kotagiri

Video inpainting aims to restore missing regions of a video and has many applications such as video editing and object removal.

How to Democratise and Protect AI: Fair and Differentially Private Decentralised Deep Learning

no code implementations • 18 Jul 2020 • Lingjuan Lyu, Yitong Li, Karthik Nandakumar, Jiangshan Yu, Xingjun Ma

This paper firstly considers the research problem of fairness in collaborative deep learning, while ensuring privacy.

Reflection Backdoor: A Natural Backdoor Attack on Deep Neural Networks

3 code implementations • ECCV 2020 • Yunfei Liu, Xingjun Ma, James Bailey, Feng Lu

A backdoor attack installs a backdoor into the victim model by injecting a backdoor pattern into a small proportion of the training data.

Imbalanced Gradients: A Subtle Cause of Overestimated Adversarial Robustness

1 code implementation • 24 Jun 2020 • Xingjun Ma, Linxi Jiang, Hanxun Huang, Zejia Weng, James Bailey, Yu-Gang Jiang

Evaluating the robustness of a defense model is a challenging task in adversarial robustness research.

Normalized Loss Functions for Deep Learning with Noisy Labels

4 code implementations • ICML 2020 • Xingjun Ma, Hanxun Huang, Yisen Wang, Simone Romano, Sarah Erfani, James Bailey

However, in practice, simply being robust is not sufficient for a loss function to train accurate DNNs.

Ranked #30 on

Image Classification

on mini WebVision 1.0

(ImageNet Top-1 Accuracy metric)

Ranked #30 on

Image Classification

on mini WebVision 1.0

(ImageNet Top-1 Accuracy metric)

Improving Adversarial Robustness Requires Revisiting Misclassified Examples

1 code implementation • ICLR 2020 • Yisen Wang, Difan Zou, Jin-Feng Yi, James Bailey, Xingjun Ma, Quanquan Gu

In this paper, we investigate the distinctive influence of misclassified and correctly classified examples on the final robustness of adversarial training.

Adversarial Camouflage: Hiding Physical-World Attacks with Natural Styles

1 code implementation • CVPR 2020 • Ranjie Duan, Xingjun Ma, Yisen Wang, James Bailey, A. K. Qin, Yun Yang

Deep neural networks (DNNs) are known to be vulnerable to adversarial examples.

Clean-Label Backdoor Attacks on Video Recognition Models

1 code implementation • CVPR 2020 • Shihao Zhao, Xingjun Ma, Xiang Zheng, James Bailey, Jingjing Chen, Yu-Gang Jiang

We propose the use of a universal adversarial trigger as the backdoor trigger to attack video recognition models, a situation where backdoor attacks are likely to be challenged by the above 4 strict conditions.

Skip Connections Matter: On the Transferability of Adversarial Examples Generated with ResNets

3 code implementations • ICLR 2020 • Dongxian Wu, Yisen Wang, Shu-Tao Xia, James Bailey, Xingjun Ma

We find that using more gradients from the skip connections rather than the residual modules according to a decay factor, allows one to craft adversarial examples with high transferability.

Symmetric Cross Entropy for Robust Learning with Noisy Labels

4 code implementations • ICCV 2019 • Yisen Wang, Xingjun Ma, Zaiyi Chen, Yuan Luo, Jin-Feng Yi, James Bailey

In this paper, we show that DNN learning with Cross Entropy (CE) exhibits overfitting to noisy labels on some classes ("easy" classes), but more surprisingly, it also suffers from significant under learning on some other classes ("hard" classes).

Ranked #43 on

Image Classification

on Clothing1M

Ranked #43 on

Image Classification

on Clothing1M

Generative Image Inpainting with Submanifold Alignment

no code implementations • 1 Aug 2019 • Ang Li, Jianzhong Qi, Rui Zhang, Xingjun Ma, Kotagiri Ramamohanarao

Image inpainting aims at restoring missing regions of corrupted images, which has many applications such as image restoration and object removal.

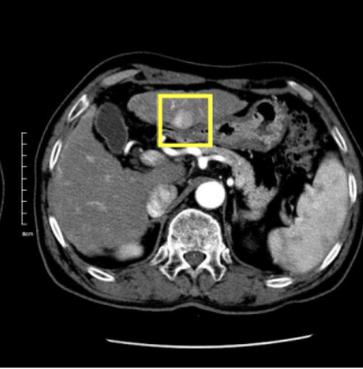

Understanding Adversarial Attacks on Deep Learning Based Medical Image Analysis Systems

no code implementations • 24 Jul 2019 • Xingjun Ma, Yuhao Niu, Lin Gu, Yisen Wang, Yitian Zhao, James Bailey, Feng Lu

This raises safety concerns about the deployment of these systems in clinical settings.

Towards Fair and Privacy-Preserving Federated Deep Models

1 code implementation • 4 Jun 2019 • Lingjuan Lyu, Jiangshan Yu, Karthik Nandakumar, Yitong Li, Xingjun Ma, Jiong Jin, Han Yu, Kee Siong Ng

This problem can be addressed by either a centralized framework that deploys a central server to train a global model on the joint data from all parties, or a distributed framework that leverages a parameter server to aggregate local model updates.

Quality Evaluation of GANs Using Cross Local Intrinsic Dimensionality

no code implementations • 2 May 2019 • Sukarna Barua, Xingjun Ma, Sarah Monazam Erfani, Michael E. Houle, James Bailey

In this paper, we demonstrate that an intrinsic dimensional characterization of the data space learned by a GAN model leads to an effective evaluation metric for GAN quality.

Black-box Adversarial Attacks on Video Recognition Models

no code implementations • 10 Apr 2019 • Linxi Jiang, Xingjun Ma, Shaoxiang Chen, James Bailey, Yu-Gang Jiang

Using three benchmark video datasets, we demonstrate that V-BAD can craft both untargeted and targeted attacks to fool two state-of-the-art deep video recognition models.

Dimensionality-Driven Learning with Noisy Labels

2 code implementations • ICML 2018 • Xingjun Ma, Yisen Wang, Michael E. Houle, Shuo Zhou, Sarah M. Erfani, Shu-Tao Xia, Sudanthi Wijewickrema, James Bailey

Datasets with significant proportions of noisy (incorrect) class labels present challenges for training accurate Deep Neural Networks (DNNs).

Ranked #39 on

Image Classification

on mini WebVision 1.0

Ranked #39 on

Image Classification

on mini WebVision 1.0

Iterative Learning with Open-set Noisy Labels

1 code implementation • CVPR 2018 • Yisen Wang, Weiyang Liu, Xingjun Ma, James Bailey, Hongyuan Zha, Le Song, Shu-Tao Xia

We refer to this more complex scenario as the \textbf{open-set noisy label} problem and show that it is nontrivial in order to make accurate predictions.

Characterizing Adversarial Subspaces Using Local Intrinsic Dimensionality

1 code implementation • ICLR 2018 • Xingjun Ma, Bo Li, Yisen Wang, Sarah M. Erfani, Sudanthi Wijewickrema, Grant Schoenebeck, Dawn Song, Michael E. Houle, James Bailey

Deep Neural Networks (DNNs) have recently been shown to be vulnerable against adversarial examples, which are carefully crafted instances that can mislead DNNs to make errors during prediction.

Providing Effective Real-time Feedback in Simulation-based Surgical Training

no code implementations • 30 Jun 2017 • Xingjun Ma, Sudanthi Wijewickrema, Yun Zhou, Shuo Zhou, Stephen O'Leary, James Bailey

Experimental results in a temporal bone surgery simulation show that the proposed method is able to extract highly effective feedback at a high level of efficiency.

Adversarial Generation of Real-time Feedback with Neural Networks for Simulation-based Training

no code implementations • 4 Mar 2017 • Xingjun Ma, Sudanthi Wijewickrema, Shuo Zhou, Yun Zhou, Zakaria Mhammedi, Stephen O'Leary, James Bailey

It is the aim of this paper to develop an efficient and effective feedback generation method for the provision of real-time feedback in SBT.