Image Generation

1996 papers with code • 85 benchmarks • 67 datasets

Image Generation (synthesis) is the task of generating new images from an existing dataset.

- Unconditional generation refers to generating samples unconditionally from the dataset, i.e. $p(y)$

- Conditional image generation (subtask) refers to generating samples conditionally from the dataset, based on a label, i.e. $p(y|x)$.

In this section, you can find state-of-the-art leaderboards for unconditional generation. For conditional generation, and other types of image generations, refer to the subtasks.

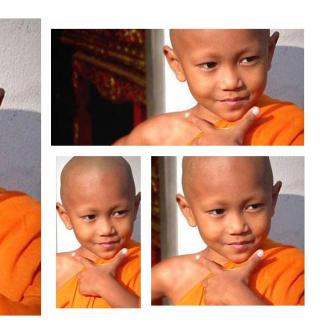

( Image credit: StyleGAN )

Libraries

Use these libraries to find Image Generation models and implementationsDatasets

Subtasks

-

Image-to-Image Translation

Image-to-Image Translation

-

Image Inpainting

Image Inpainting

-

Text-to-Image Generation

Text-to-Image Generation

-

Conditional Image Generation

Conditional Image Generation

-

Conditional Image Generation

Conditional Image Generation

-

Face Generation

Face Generation

-

3D Generation

3D Generation

-

Image Harmonization

Image Harmonization

-

Pose Transfer

Pose Transfer

-

3D-Aware Image Synthesis

3D-Aware Image Synthesis

-

Facial Inpainting

Facial Inpainting

-

Layout-to-Image Generation

Layout-to-Image Generation

-

ROI-based image generation

ROI-based image generation

-

Image Generation from Scene Graphs

Image Generation from Scene Graphs

-

Pose-Guided Image Generation

Pose-Guided Image Generation

-

User Constrained Thumbnail Generation

User Constrained Thumbnail Generation

-

Handwritten Word Generation

Handwritten Word Generation

-

Chinese Landscape Painting Generation

Chinese Landscape Painting Generation

-

person reposing

person reposing

-

Infinite Image Generation

Infinite Image Generation

-

Multi class one-shot image synthesis

Multi class one-shot image synthesis

-

Single class few-shot image synthesis

Single class few-shot image synthesis

Latest papers with no code

Synthesizing Iris Images using Generative Adversarial Networks: Survey and Comparative Analysis

In this paper, we present a comprehensive review of state-of-the-art GAN-based synthetic iris image generation techniques, evaluating their strengths and limitations in producing realistic and useful iris images that can be used for both training and testing iris recognition systems and presentation attack detectors.

BlenderAlchemy: Editing 3D Graphics with Vision-Language Models

Specifically, we design a vision-based edit generator and state evaluator to work together to find the correct sequence of actions to achieve the goal.

MuseumMaker: Continual Style Customization without Catastrophic Forgetting

To deal with catastrophic forgetting amongst past learned styles, we devise a dual regularization for shared-LoRA module to optimize the direction of model update, which could regularize the diffusion model from both weight and feature aspects, respectively.

Conditional Distribution Modelling for Few-Shot Image Synthesis with Diffusion Models

Few-shot image synthesis entails generating diverse and realistic images of novel categories using only a few example images.

Sketch2Human: Deep Human Generation with Disentangled Geometry and Appearance Control

This work presents Sketch2Human, the first system for controllable full-body human image generation guided by a semantic sketch (for geometry control) and a reference image (for appearance control).

SkinGEN: an Explainable Dermatology Diagnosis-to-Generation Framework with Interactive Vision-Language Models

With the continuous advancement of vision language models (VLMs) technology, remarkable research achievements have emerged in the dermatology field, the fourth most prevalent human disease category.

From Parts to Whole: A Unified Reference Framework for Controllable Human Image Generation

Addressing this, we introduce Parts2Whole, a novel framework designed for generating customized portraits from multiple reference images, including pose images and various aspects of human appearance.

Multimodal Large Language Model is a Human-Aligned Annotator for Text-to-Image Generation

Recent studies have demonstrated the exceptional potentials of leveraging human preference datasets to refine text-to-image generative models, enhancing the alignment between generated images and textual prompts.

FINEMATCH: Aspect-based Fine-grained Image and Text Mismatch Detection and Correction

To address this, we propose FineMatch, a new aspect-based fine-grained text and image matching benchmark, focusing on text and image mismatch detection and correction.

ID-Aligner: Enhancing Identity-Preserving Text-to-Image Generation with Reward Feedback Learning

The rapid development of diffusion models has triggered diverse applications.

CIFAR-10

CIFAR-10

ImageNet

ImageNet

CIFAR-100

CIFAR-100

MNIST

MNIST

Cityscapes

Cityscapes

CelebA

CelebA

Fashion-MNIST

Fashion-MNIST

CUB-200-2011

CUB-200-2011

FFHQ

FFHQ

Oxford 102 Flower

Oxford 102 Flower