Probabilistic Autoencoder

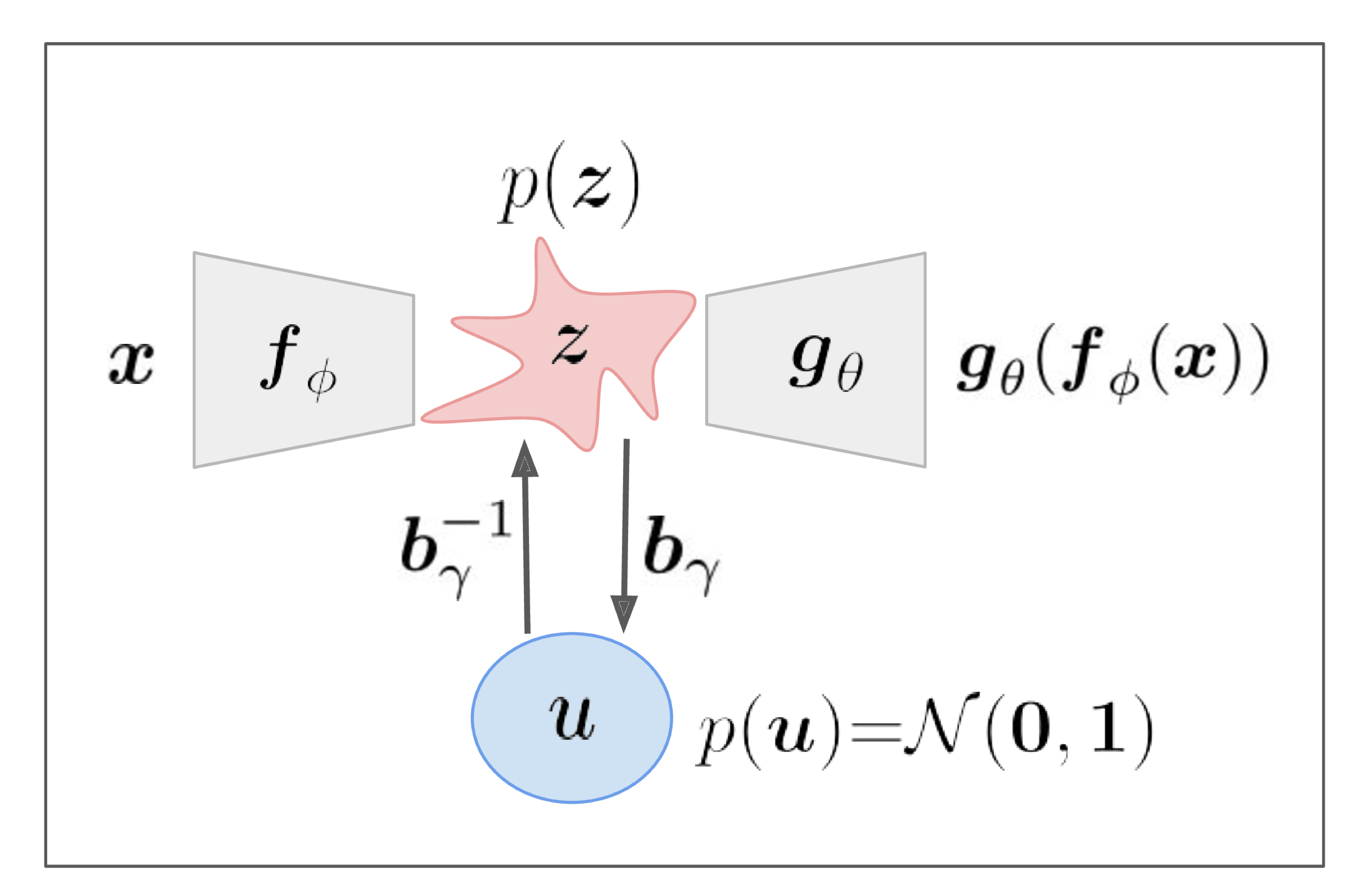

Principal Component Analysis (PCA) minimizes the reconstruction error given a class of linear models of fixed component dimensionality. Probabilistic PCA adds a probabilistic structure by learning the probability distribution of the PCA latent space weights, thus creating a generative model. Autoencoders (AE) minimize the reconstruction error in a class of nonlinear models of fixed latent space dimensionality and outperform PCA at fixed dimensionality. Here, we introduce the Probabilistic Autoencoder (PAE) that learns the probability distribution of the AE latent space weights using a normalizing flow (NF). The PAE is fast and easy to train and achieves small reconstruction errors, high sample quality, and good performance in downstream tasks. We compare the PAE to Variational AE (VAE), showing that the PAE trains faster, reaches a lower reconstruction error, and produces good sample quality without requiring special tuning parameters or training procedures. We further demonstrate that the PAE is a powerful model for performing the downstream tasks of probabilistic image reconstruction in the context of Bayesian inference of inverse problems for inpainting and denoising applications. Finally, we identify latent space density from NF as a promising outlier detection metric.

PDF Abstract

MNIST

MNIST

CelebA

CelebA

Fashion-MNIST

Fashion-MNIST