X$^2$-VLM: All-In-One Pre-trained Model For Vision-Language Tasks

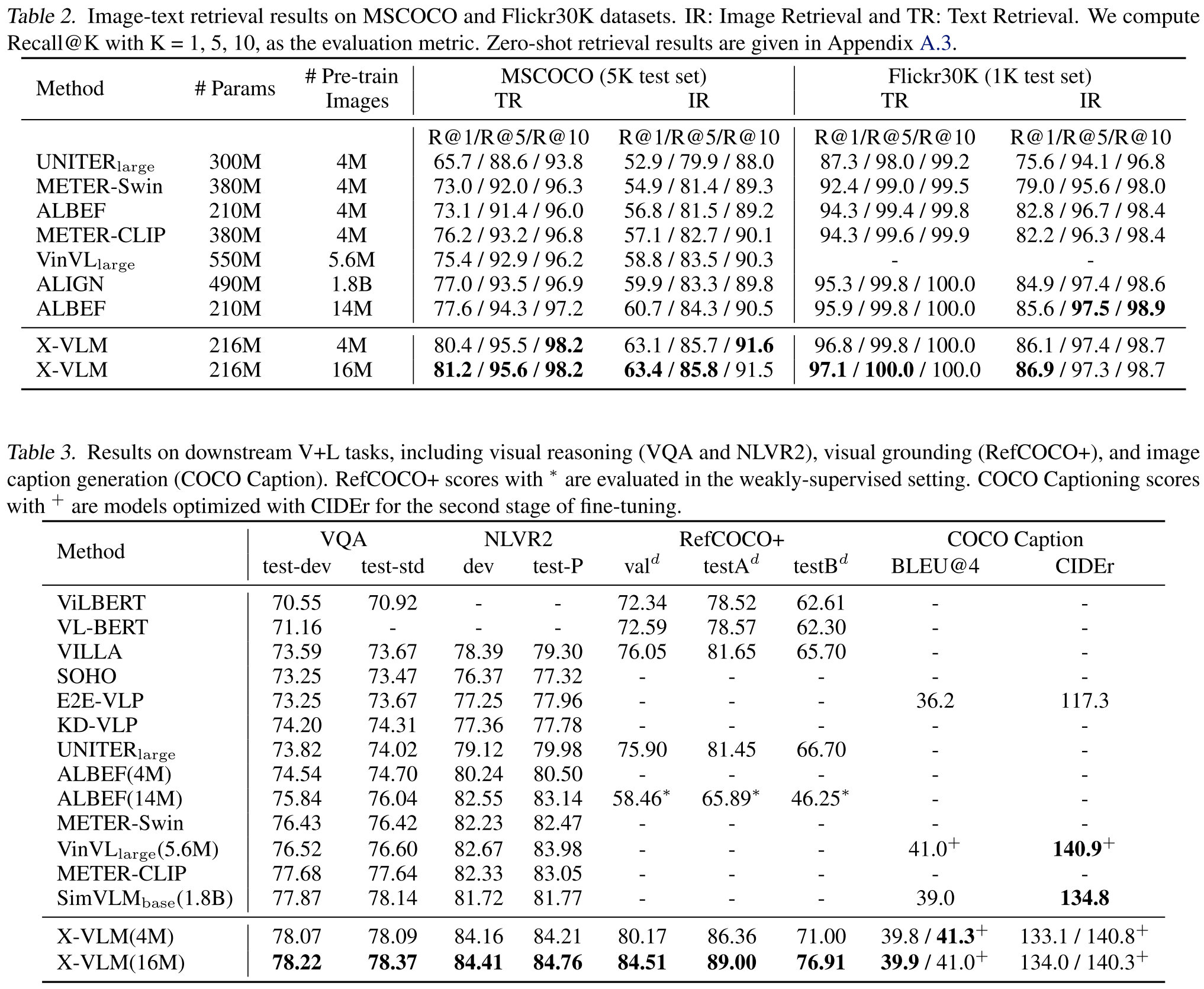

Vision language pre-training aims to learn alignments between vision and language from a large amount of data. Most existing methods only learn image-text alignments. Some others utilize pre-trained object detectors to leverage vision language alignments at the object level. In this paper, we propose to learn multi-grained vision language alignments by a unified pre-training framework that learns multi-grained aligning and multi-grained localization simultaneously. Based on it, we present X$^2$-VLM, an all-in-one model with a flexible modular architecture, in which we further unify image-text pre-training and video-text pre-training in one model. X$^2$-VLM is able to learn unlimited visual concepts associated with diverse text descriptions. Experiment results show that X$^2$-VLM performs the best on base and large scale for both image-text and video-text tasks, making a good trade-off between performance and model scale. Moreover, we show that the modular design of X$^2$-VLM results in high transferability for it to be utilized in any language or domain. For example, by simply replacing the text encoder with XLM-R, X$^2$-VLM outperforms state-of-the-art multilingual multi-modal pre-trained models without any multilingual pre-training. The code and pre-trained models are available at https://github.com/zengyan-97/X2-VLM.

PDF AbstractCode

Results from the Paper

Ranked #1 on

Cross-Modal Retrieval

on Flickr30k

(using extra training data)

Ranked #1 on

Cross-Modal Retrieval

on Flickr30k

(using extra training data)

MS COCO

MS COCO

Visual Genome

Visual Genome

Flickr30k

Flickr30k

MSR-VTT

MSR-VTT

Visual Question Answering v2.0

Visual Question Answering v2.0

RefCOCO

RefCOCO

HowTo100M

HowTo100M

WebVid

WebVid

Objects365

Objects365

CC12M

CC12M

NLVR

NLVR