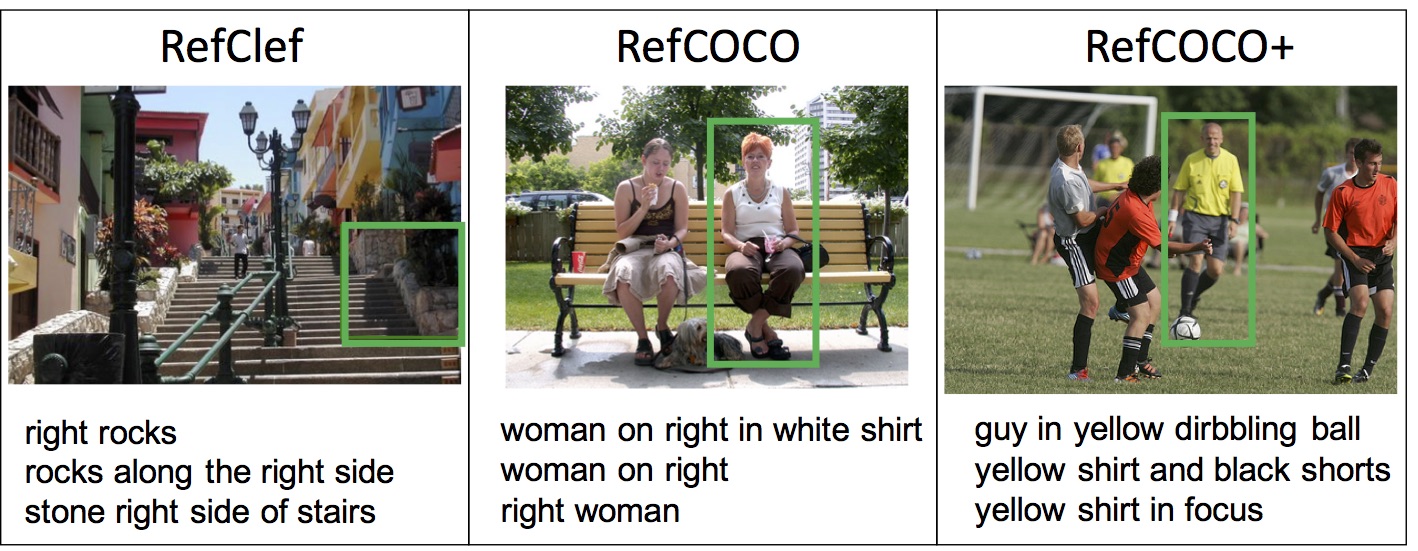

RefCOCO

Introduced by Kazemzadeh et al. in ReferItGame: Referring to Objects in Photographs of Natural ScenesThe RefCOCO dataset is a referring expression generation (REG) dataset used for tasks related to understanding natural language expressions that refer to specific objects in images. Here are the key details about RefCOCO:

-

Collection Method: The dataset was collected using the ReferitGame, a two-player game. In this game, the first player views an image with a segmented target object and writes a natural language expression referring to that object. The second player sees only the image and the referring expression and must click on the corresponding object. If both players perform correctly, they earn points and switch roles; otherwise, they receive a new object and image for description.

-

Dataset Variants: RefCOCO: Contains 142,209 refer expressions for 50,000 objects across 19,994 images. RefCOCO+: Includes 141,564 expressions for 49,856 objects in 19,992 images. RefCOCOg: This variant has 25,799 images, 95,010 referring expressions, and 49,822 object instances.

-

Language and Restrictions: RefCOCO allows any type of language in the referring expressions. RefCOCO+ disallows location words in expressions to focus purely on appearance-based descriptions (e.g., "the man in the yellow polka-dotted shirt") rather than viewer-dependent descriptions (e.g., "the second man from the left").

These datasets serve as valuable resources for tasks like referring expression segmentation, comprehension, and visual grounding in computer vision research.

Benchmarks

Papers

| Paper | Code | Results | Date | Stars |

|---|