Point-GCC: Universal Self-supervised 3D Scene Pre-training via Geometry-Color Contrast

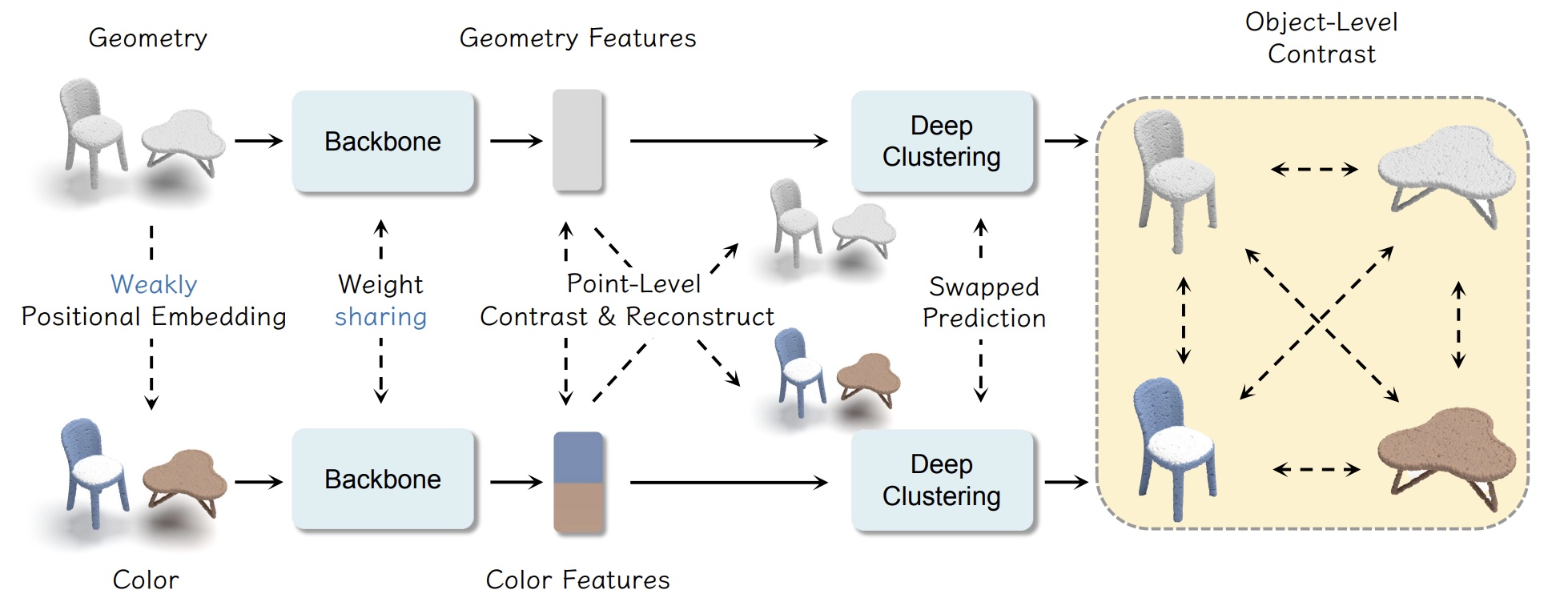

Geometry and color information provided by the point clouds are both crucial for 3D scene understanding. Two pieces of information characterize the different aspects of point clouds, but existing methods lack an elaborate design for the discrimination and relevance. Hence we explore a 3D self-supervised paradigm that can better utilize the relations of point cloud information. Specifically, we propose a universal 3D scene pre-training framework via Geometry-Color Contrast (Point-GCC), which aligns geometry and color information using a Siamese network. To take care of actual application tasks, we design (i) hierarchical supervision with point-level contrast and reconstruct and object-level contrast based on the novel deep clustering module to close the gap between pre-training and downstream tasks; (ii) architecture-agnostic backbone to adapt for various downstream models. Benefiting from the object-level representation associated with downstream tasks, Point-GCC can directly evaluate model performance and the result demonstrates the effectiveness of our methods. Transfer learning results on a wide range of tasks also show consistent improvements across all datasets. e.g., new state-of-the-art object detection results on SUN RGB-D and S3DIS datasets. Codes will be released at https://github.com/Asterisci/Point-GCC.

PDF Abstract

ScanNet

ScanNet

SUN RGB-D

SUN RGB-D

S3DIS

S3DIS