Universal Segmentation at Arbitrary Granularity with Language Instruction

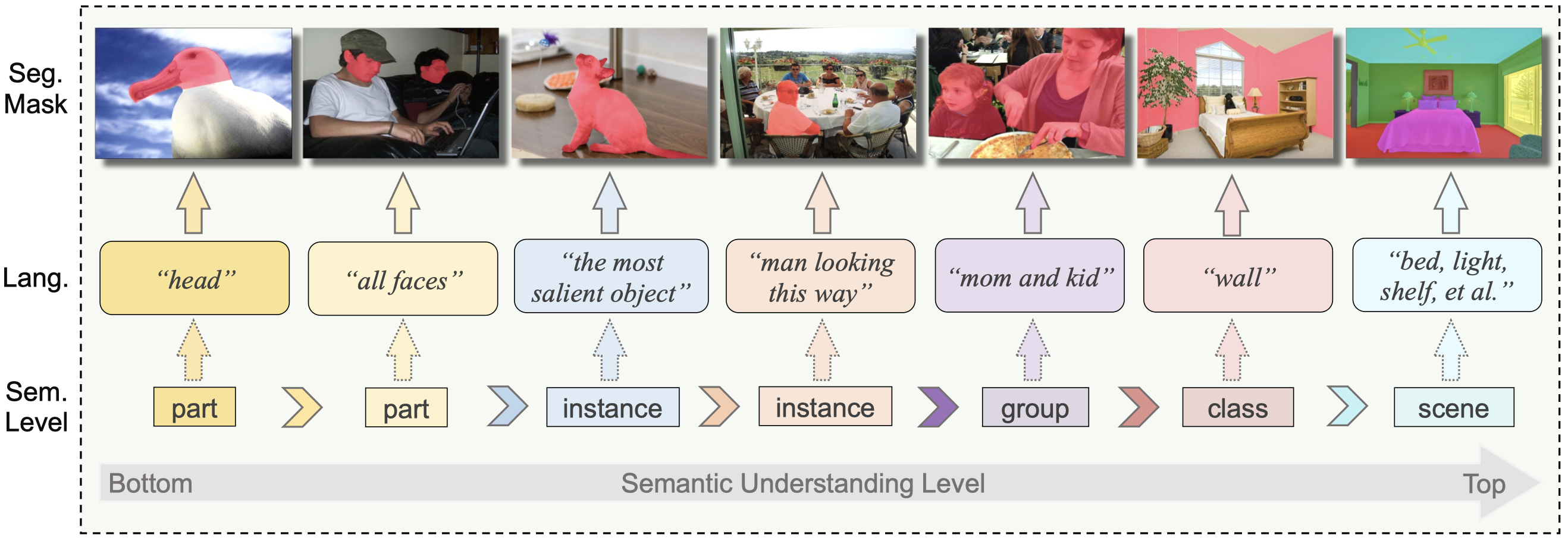

This paper aims to achieve universal segmentation of arbitrary semantic level. Despite significant progress in recent years, specialist segmentation approaches are limited to specific tasks and data distribution. Retraining a new model for adaptation to new scenarios or settings takes expensive computation and time cost, which raises the demand for versatile and universal segmentation model that can cater to various granularity. Although some attempts have been made for unifying different segmentation tasks or generalization to various scenarios, limitations in the definition of paradigms and input-output spaces make it difficult for them to achieve accurate understanding of content at arbitrary granularity. To this end, we present UniLSeg, a universal segmentation model that can perform segmentation at any semantic level with the guidance of language instructions. For training UniLSeg, we reorganize a group of tasks from original diverse distributions into a unified data format, where images with texts describing segmentation targets as input and corresponding masks are output. Combined with a automatic annotation engine for utilizing numerous unlabeled data, UniLSeg achieves excellent performance on various tasks and settings, surpassing both specialist and unified segmentation models.

PDF AbstractCode

Results from the Paper

Ranked #1 on

Referring Expression Segmentation

on RefCOCOg-test

(using extra training data)

Ranked #1 on

Referring Expression Segmentation

on RefCOCOg-test

(using extra training data)

ImageNet

ImageNet

MS COCO

MS COCO

ADE20K

ADE20K

RefCOCO

RefCOCO

PASCAL Context

PASCAL Context

SA-1B

SA-1B

Refer-YouTube-VOS

Refer-YouTube-VOS