UCMCTrack: Multi-Object Tracking with Uniform Camera Motion Compensation

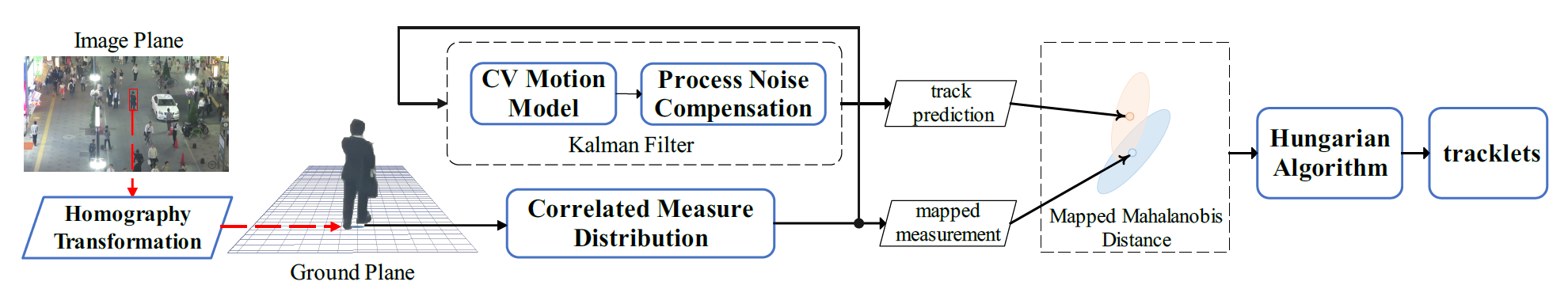

Multi-object tracking (MOT) in video sequences remains a challenging task, especially in scenarios with significant camera movements. This is because targets can drift considerably on the image plane, leading to erroneous tracking outcomes. Addressing such challenges typically requires supplementary appearance cues or Camera Motion Compensation (CMC). While these strategies are effective, they also introduce a considerable computational burden, posing challenges for real-time MOT. In response to this, we introduce UCMCTrack, a novel motion model-based tracker robust to camera movements. Unlike conventional CMC that computes compensation parameters frame-by-frame, UCMCTrack consistently applies the same compensation parameters throughout a video sequence. It employs a Kalman filter on the ground plane and introduces the Mapped Mahalanobis Distance (MMD) as an alternative to the traditional Intersection over Union (IoU) distance measure. By leveraging projected probability distributions on the ground plane, our approach efficiently captures motion patterns and adeptly manages uncertainties introduced by homography projections. Remarkably, UCMCTrack, relying solely on motion cues, achieves state-of-the-art performance across a variety of challenging datasets, including MOT17, MOT20, DanceTrack and KITTI. More details and code are available at https://github.com/corfyi/UCMCTrack

PDF AbstractCode

Datasets

Results from the Paper

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Multi-Object Tracking | DanceTrack | UCMCTrack | HOTA | 63.6 | # 10 | |

| IDF1 | 65.0 | # 11 | ||||

| Multiple Object Tracking | KITTI Tracking test | UCMCTrack | MOTA | 90.4 | # 1 | |

| HOTA | 77.1 | # 1 | ||||

| Multi-Object Tracking | MOT17 | UCMCTrack | MOTA | 80.5 | # 4 | |

| IDF1 | 81.1 | # 2 | ||||

| HOTA | 65.8 | # 2 | ||||

| Multi-Object Tracking | MOT20 | UCMCTrack | IDF1 | 77.4 | # 5 | |

| HOTA | 62.8 | # 6 |

KITTI

KITTI

MOT17

MOT17

MOTChallenge

MOTChallenge

DanceTrack

DanceTrack

MOT20

MOT20