SupeRGB-D: Zero-shot Instance Segmentation in Cluttered Indoor Environments

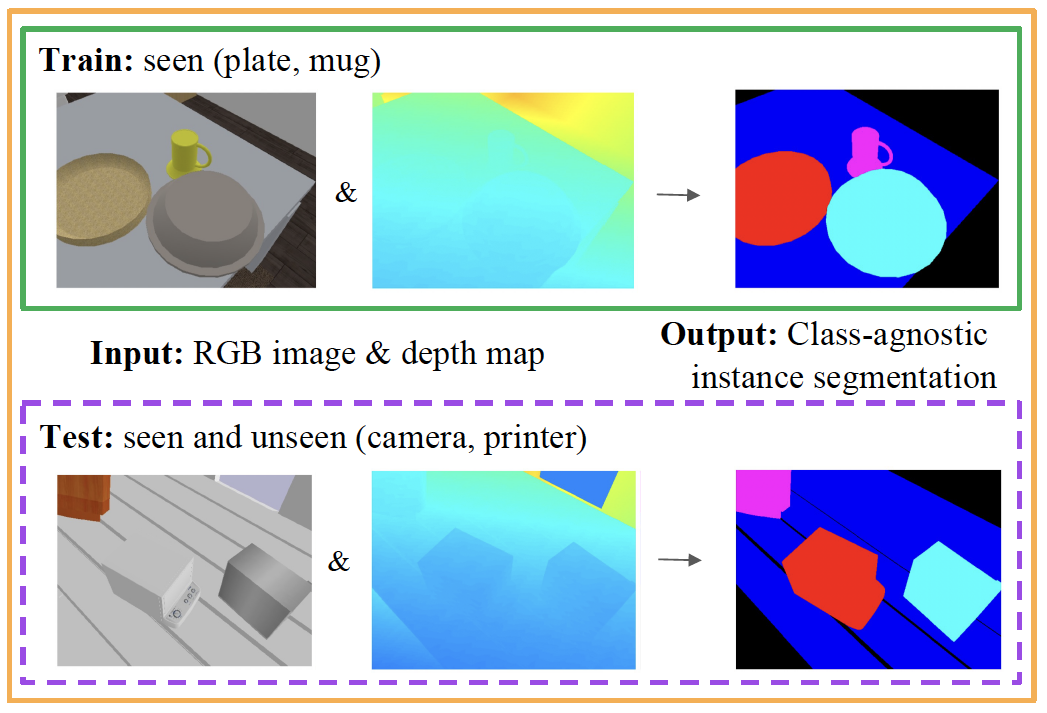

Object instance segmentation is a key challenge for indoor robots navigating cluttered environments with many small objects. Limitations in 3D sensing capabilities often make it difficult to detect every possible object. While deep learning approaches may be effective for this problem, manually annotating 3D data for supervised learning is time-consuming. In this work, we explore zero-shot instance segmentation (ZSIS) from RGB-D data to identify unseen objects in a semantic category-agnostic manner. We introduce a zero-shot split for Tabletop Objects Dataset (TOD-Z) to enable this study and present a method that uses annotated objects to learn the ``objectness'' of pixels and generalize to unseen object categories in cluttered indoor environments. Our method, SupeRGB-D, groups pixels into small patches based on geometric cues and learns to merge the patches in a deep agglomerative clustering fashion. SupeRGB-D outperforms existing baselines on unseen objects while achieving similar performance on seen objects. We further show competitive results on the real dataset OCID. With its lightweight design (0.4 MB memory requirement), our method is extremely suitable for mobile and robotic applications. Additional DINO features can increase performance with a higher memory requirement. The dataset split and code are available at https://github.com/evinpinar/supergb-d.

PDF Abstract

SUNCG

SUNCG