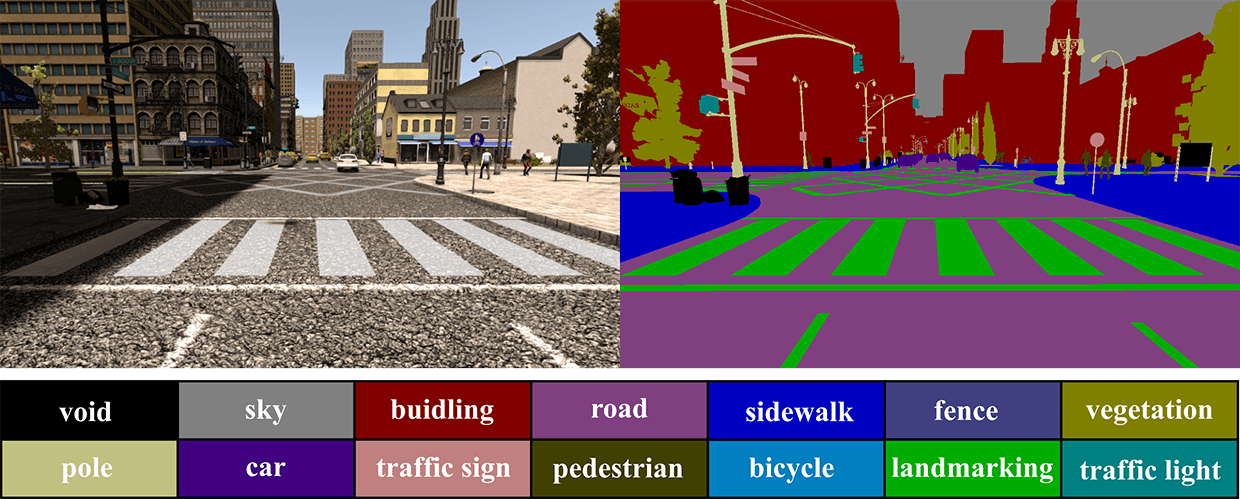

Semantic Segmentation of Panoramic Images Using a Synthetic Dataset

Panoramic images have advantages in information capacity and scene stability due to their large field of view (FoV). In this paper, we propose a method to synthesize a new dataset of panoramic image. We managed to stitch the images taken from different directions into panoramic images, together with their labeled images, to yield the panoramic semantic segmentation dataset denominated as SYNTHIA-PANO. For the purpose of finding out the effect of using panoramic images as training dataset, we designed and performed a comprehensive set of experiments. Experimental results show that using panoramic images as training data is beneficial to the segmentation result. In addition, it has been shown that by using panoramic images with a 180 degree FoV as training data the model has better performance. Furthermore, the model trained with panoramic images also has a better capacity to resist the image distortion.

PDF Abstract

SYNTHIA-PANO

SYNTHIA-PANO

Cityscapes

Cityscapes

SYNTHIA

SYNTHIA