Self-timed Reinforcement Learning using Tsetlin Machine

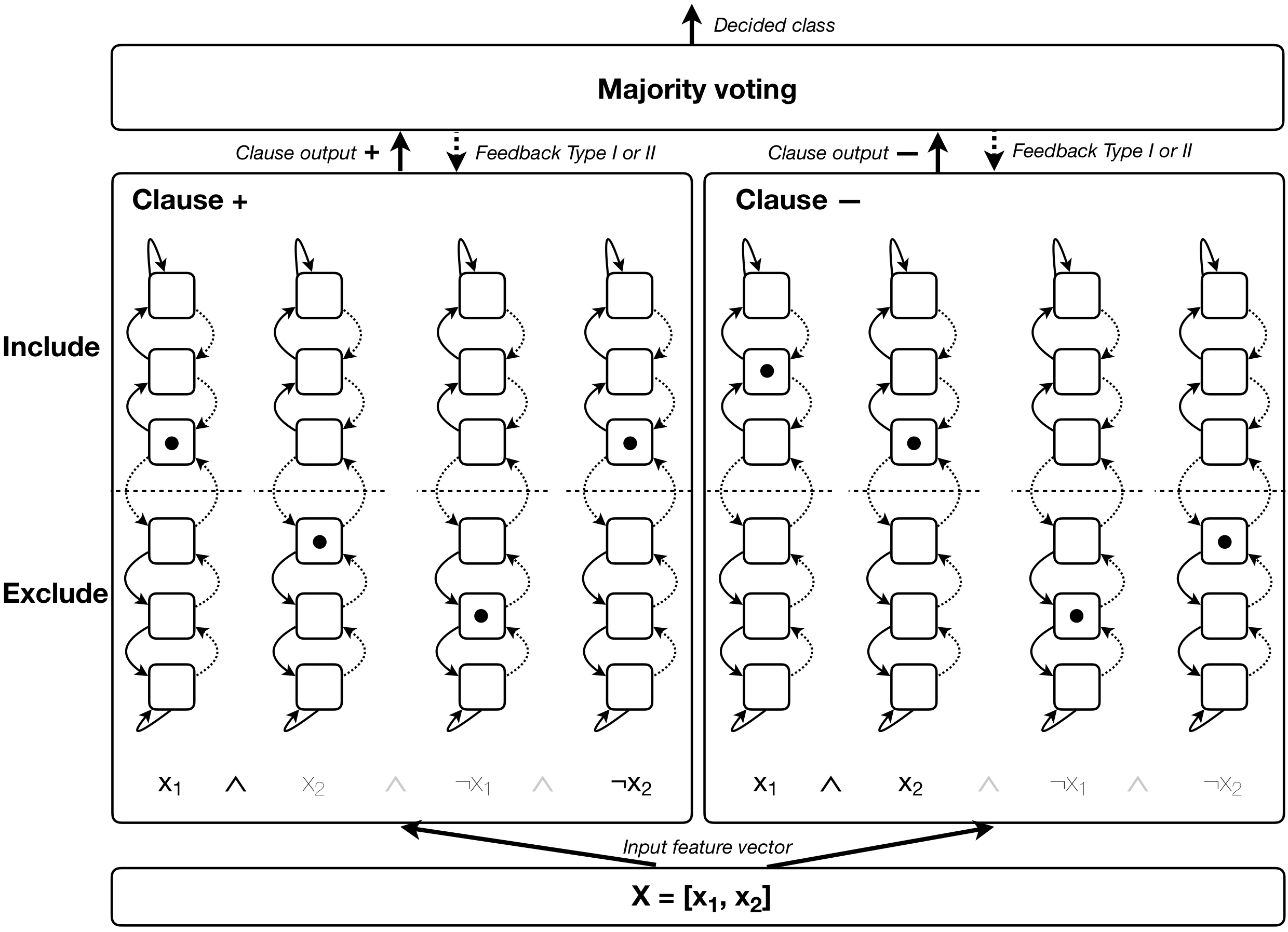

We present a hardware design for the learning datapath of the Tsetlin machine algorithm, along with a latency analysis of the inference datapath. In order to generate a low energy hardware which is suitable for pervasive artificial intelligence applications, we use a mixture of asynchronous design techniques - including Petri nets, signal transition graphs, dual-rail and bundled-data. The work builds on previous design of the inference hardware, and includes an in-depth breakdown of the automaton feedback, probability generation and Tsetlin automata. Results illustrate the advantages of asynchronous design in applications such as personalized healthcare and battery-powered internet of things devices, where energy is limited and latency is an important figure of merit. Challenges of static timing analysis in asynchronous circuits are also addressed.

PDF Abstract