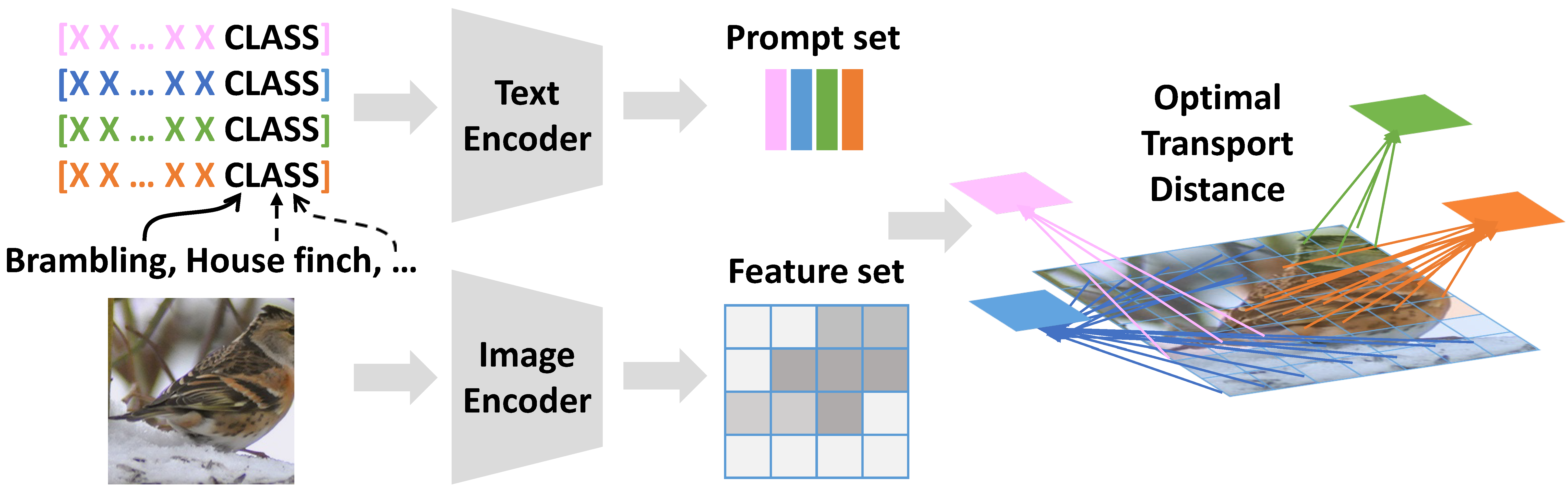

PLOT: Prompt Learning with Optimal Transport for Vision-Language Models

With the increasing attention to large vision-language models such as CLIP, there has been a significant amount of effort dedicated to building efficient prompts. Unlike conventional methods of only learning one single prompt, we propose to learn multiple comprehensive prompts to describe diverse characteristics of categories such as intrinsic attributes or extrinsic contexts. However, directly matching each prompt to the same visual feature is problematic, as it pushes the prompts to converge to one point. To solve this problem, we propose to apply optimal transport to match the vision and text modalities. Specifically, we first model images and the categories with visual and textual feature sets. Then, we apply a two-stage optimization strategy to learn the prompts. In the inner loop, we optimize the optimal transport distance to align visual features and prompts by the Sinkhorn algorithm, while in the outer loop, we learn the prompts by this distance from the supervised data. Extensive experiments are conducted on the few-shot recognition task and the improvement demonstrates the superiority of our method. The code is available at https://github.com/CHENGY12/PLOT.

PDF Abstract

ImageNet

ImageNet

UCF101

UCF101

Oxford 102 Flower

Oxford 102 Flower

Stanford Cars

Stanford Cars

DTD

DTD

Food-101

Food-101

Caltech-101

Caltech-101

EuroSAT

EuroSAT

FGVC-Aircraft

FGVC-Aircraft