PonderV2: Pave the Way for 3D Foundation Model with A Universal Pre-training Paradigm

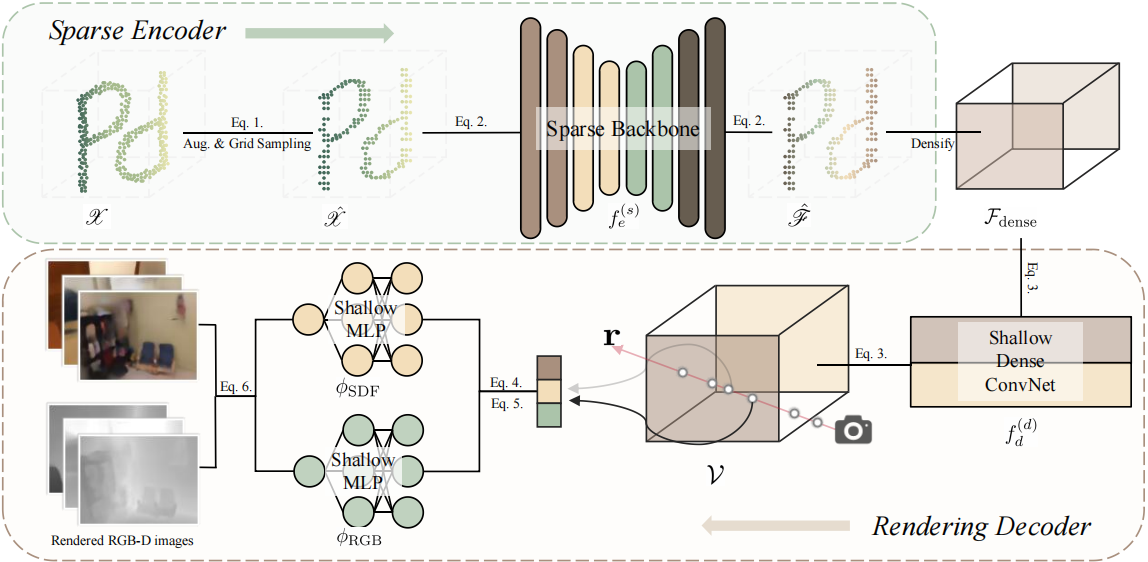

In contrast to numerous NLP and 2D vision foundational models, learning a 3D foundational model poses considerably greater challenges. This is primarily due to the inherent data variability and diversity of downstream tasks. In this paper, we introduce a novel universal 3D pre-training framework designed to facilitate the acquisition of efficient 3D representation, thereby establishing a pathway to 3D foundational models. Considering that informative 3D features should encode rich geometry and appearance cues that can be utilized to render realistic images, we propose to learn 3D representations by differentiable neural rendering. We train a 3D backbone with a devised volumetric neural renderer by comparing the rendered with the real images. Notably, our approach seamlessly integrates the learned 3D encoder into various downstream tasks. These tasks encompass not only high-level challenges such as 3D detection and segmentation but also low-level objectives like 3D reconstruction and image synthesis, spanning both indoor and outdoor scenarios. Besides, we also illustrate the capability of pre-training a 2D backbone using the proposed methodology, surpassing conventional pre-training methods by a large margin. For the first time, PonderV2 achieves state-of-the-art performance on 11 indoor and outdoor benchmarks, implying its effectiveness. Code and models are available at https://github.com/OpenGVLab/PonderV2.

PDF AbstractCode

Datasets

Results from the Paper

Ranked #1 on

3D Semantic Segmentation

on ScanNet++

(using extra training data)

Ranked #1 on

3D Semantic Segmentation

on ScanNet++

(using extra training data)

nuScenes

nuScenes

ScanNet

ScanNet

SUN RGB-D

SUN RGB-D

S3DIS

S3DIS

Structured3D

Structured3D