MVP-Net: Multi-view FPN with Position-aware Attention for Deep Universal Lesion Detection

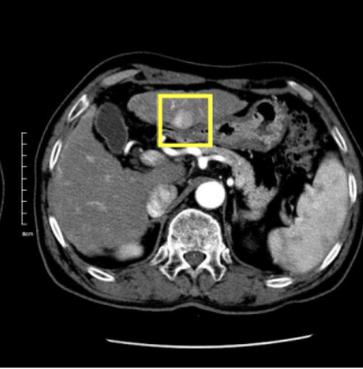

Universal lesion detection (ULD) on computed tomography (CT) images is an important but underdeveloped problem. Recently, deep learning-based approaches have been proposed for ULD, aiming to learn representative features from annotated CT data. However, the hunger for data of deep learning models and the scarcity of medical annotation hinders these approaches to advance further. In this paper, we propose to incorporate domain knowledge in clinical practice into the model design of universal lesion detectors. Specifically, as radiologists tend to inspect multiple windows for an accurate diagnosis, we explicitly model this process and propose a multi-view feature pyramid network (FPN), where multi-view features are extracted from images rendered with varied window widths and window levels; to effectively combine this multi-view information, we further propose a position-aware attention module. With the proposed model design, the data-hunger problem is relieved as the learning task is made easier with the correctly induced clinical practice prior. We show promising results with the proposed model, achieving an absolute gain of $\mathbf{5.65\%}$ (in the sensitivity of FPs@4.0) over the previous state-of-the-art on the NIH DeepLesion dataset.

PDF Abstract

DeepLesion

DeepLesion