Metric3D: Towards Zero-shot Metric 3D Prediction from A Single Image

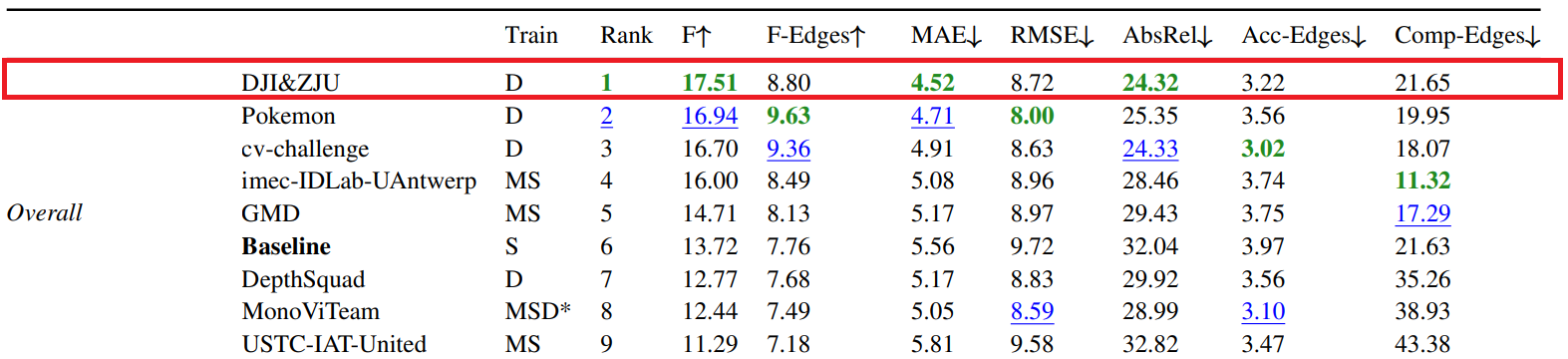

Reconstructing accurate 3D scenes from images is a long-standing vision task. Due to the ill-posedness of the single-image reconstruction problem, most well-established methods are built upon multi-view geometry. State-of-the-art (SOTA) monocular metric depth estimation methods can only handle a single camera model and are unable to perform mixed-data training due to the metric ambiguity. Meanwhile, SOTA monocular methods trained on large mixed datasets achieve zero-shot generalization by learning affine-invariant depths, which cannot recover real-world metrics. In this work, we show that the key to a zero-shot single-view metric depth model lies in the combination of large-scale data training and resolving the metric ambiguity from various camera models. We propose a canonical camera space transformation module, which explicitly addresses the ambiguity problems and can be effortlessly plugged into existing monocular models. Equipped with our module, monocular models can be stably trained with over 8 million images with thousands of camera models, resulting in zero-shot generalization to in-the-wild images with unseen camera settings. Experiments demonstrate SOTA performance of our method on 7 zero-shot benchmarks. Notably, our method won the championship in the 2nd Monocular Depth Estimation Challenge. Our method enables the accurate recovery of metric 3D structures on randomly collected internet images, paving the way for plausible single-image metrology. The potential benefits extend to downstream tasks, which can be significantly improved by simply plugging in our model. For example, our model relieves the scale drift issues of monocular-SLAM (Fig. 1), leading to high-quality metric scale dense mapping. The code is available at https://github.com/YvanYin/Metric3D.

PDF Abstract ICCV 2023 PDF ICCV 2023 AbstractCode

Results from the Paper

Ranked #19 on

Monocular Depth Estimation

on NYU-Depth V2

(using extra training data)

Ranked #19 on

Monocular Depth Estimation

on NYU-Depth V2

(using extra training data)

Cityscapes

Cityscapes

KITTI

KITTI

nuScenes

nuScenes

ScanNet

ScanNet

NYUv2

NYUv2

Taskonomy

Taskonomy

DIODE

DIODE

DDAD

DDAD

DrivingStereo

DrivingStereo

PandaSet

PandaSet

IBims-1

IBims-1