LLaMA-VID: An Image is Worth 2 Tokens in Large Language Models

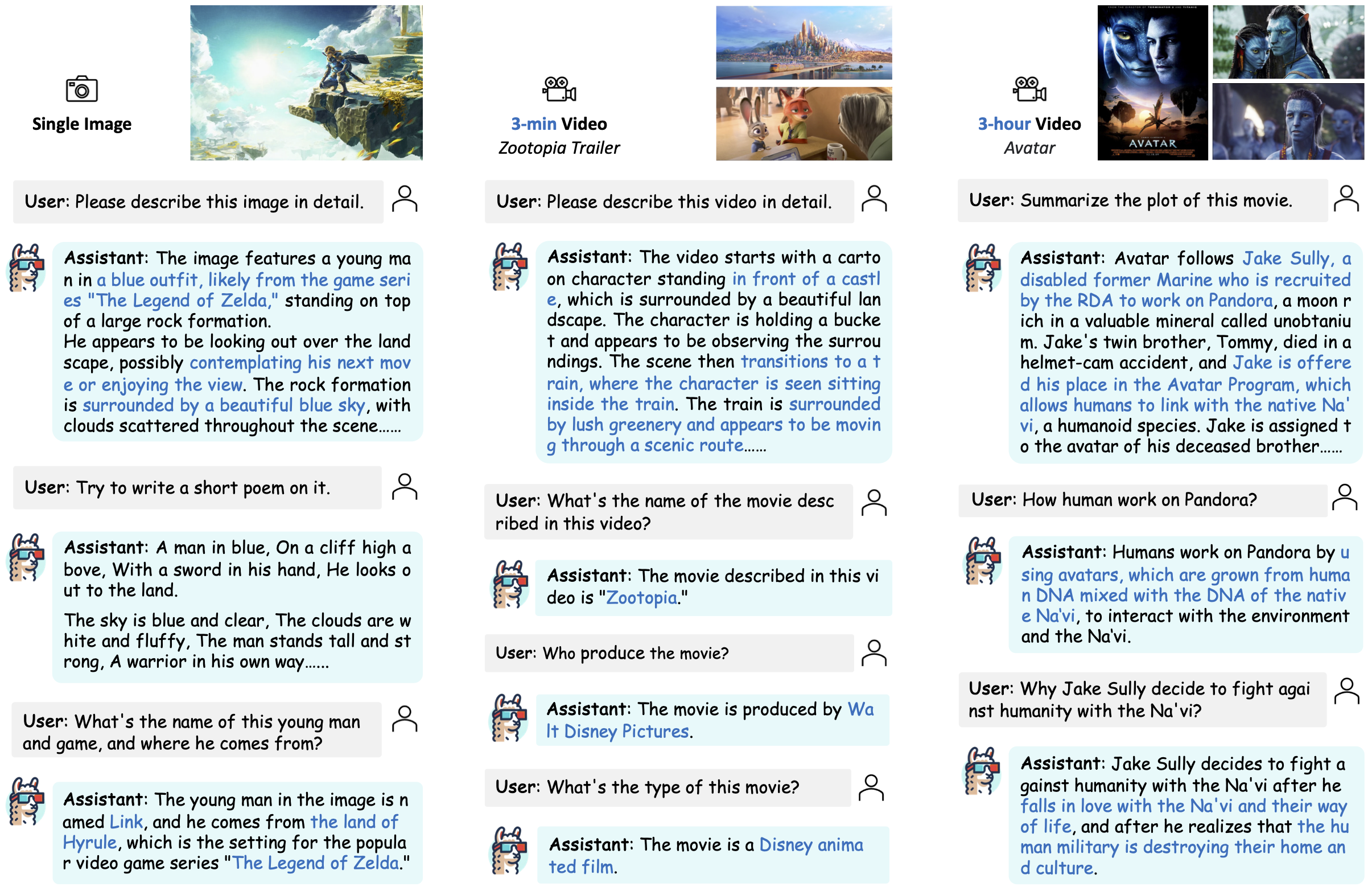

In this work, we present a novel method to tackle the token generation challenge in Vision Language Models (VLMs) for video and image understanding, called LLaMA-VID. Current VLMs, while proficient in tasks like image captioning and visual question answering, face computational burdens when processing long videos due to the excessive visual tokens. LLaMA-VID addresses this issue by representing each frame with two distinct tokens, namely context token and content token. The context token encodes the overall image context based on user input, whereas the content token encapsulates visual cues in each frame. This dual-token strategy significantly reduces the overload of long videos while preserving critical information. Generally, LLaMA-VID empowers existing frameworks to support hour-long videos and pushes their upper limit with an extra context token. It is proved to surpass previous methods on most of video- or image-based benchmarks. Code is available https://github.com/dvlab-research/LLaMA-VID}{https://github.com/dvlab-research/LLaMA-VID

PDF Abstract

Visual Question Answering

Visual Question Answering

ActivityNet

ActivityNet

MSR-VTT

MSR-VTT

GQA

GQA

Visual Question Answering v2.0

Visual Question Answering v2.0

MSVD

MSVD

VizWiz

VizWiz

ScienceQA

ScienceQA

ActivityNet-QA

ActivityNet-QA

MovieNet

MovieNet