GQA

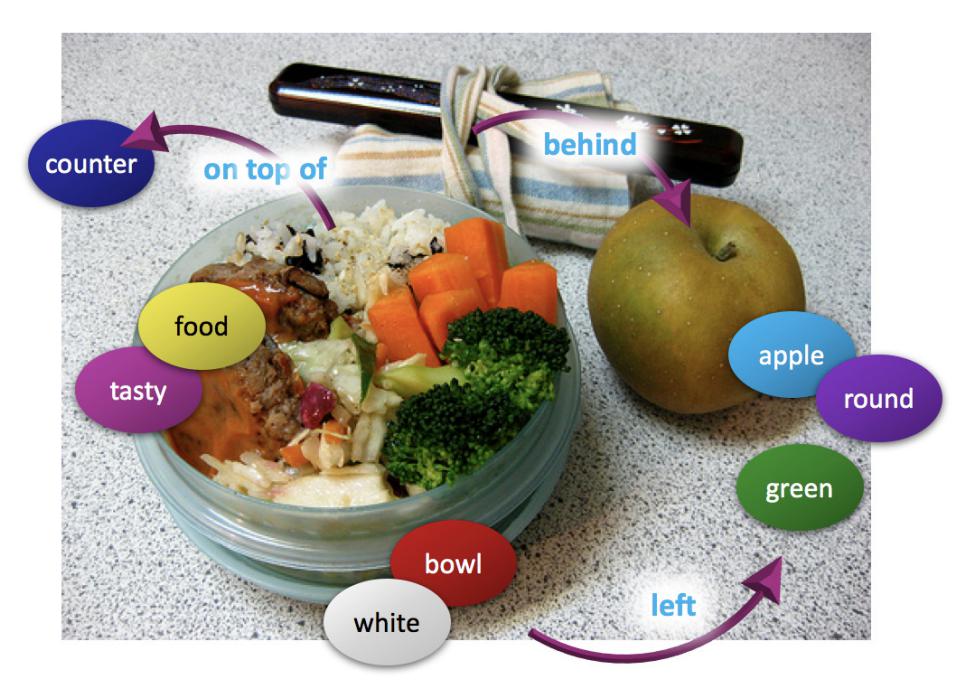

Introduced by Hudson et al. in GQA: A New Dataset for Real-World Visual Reasoning and Compositional Question AnsweringThe GQA dataset is a large-scale visual question answering dataset with real images from the Visual Genome dataset and balanced question-answer pairs. Each training and validation image is also associated with scene graph annotations describing the classes and attributes of those objects in the scene, and their pairwise relations. Along with the images and question-answer pairs, the GQA dataset provides two types of pre-extracted visual features for each image – convolutional grid features of size 7×7×2048 extracted from a ResNet-101 network trained on ImageNet, and object detection features of size Ndet×2048 (where Ndet is the number of detected objects in each image with a maximum of 100 per image) from a Faster R-CNN detector.

Source: Language-Conditioned Graph Networks for Relational ReasoningBenchmarks

Papers

| Paper | Code | Results | Date | Stars |

|---|