Dynamic Emotion Modeling with Learnable Graphs and Graph Inception Network

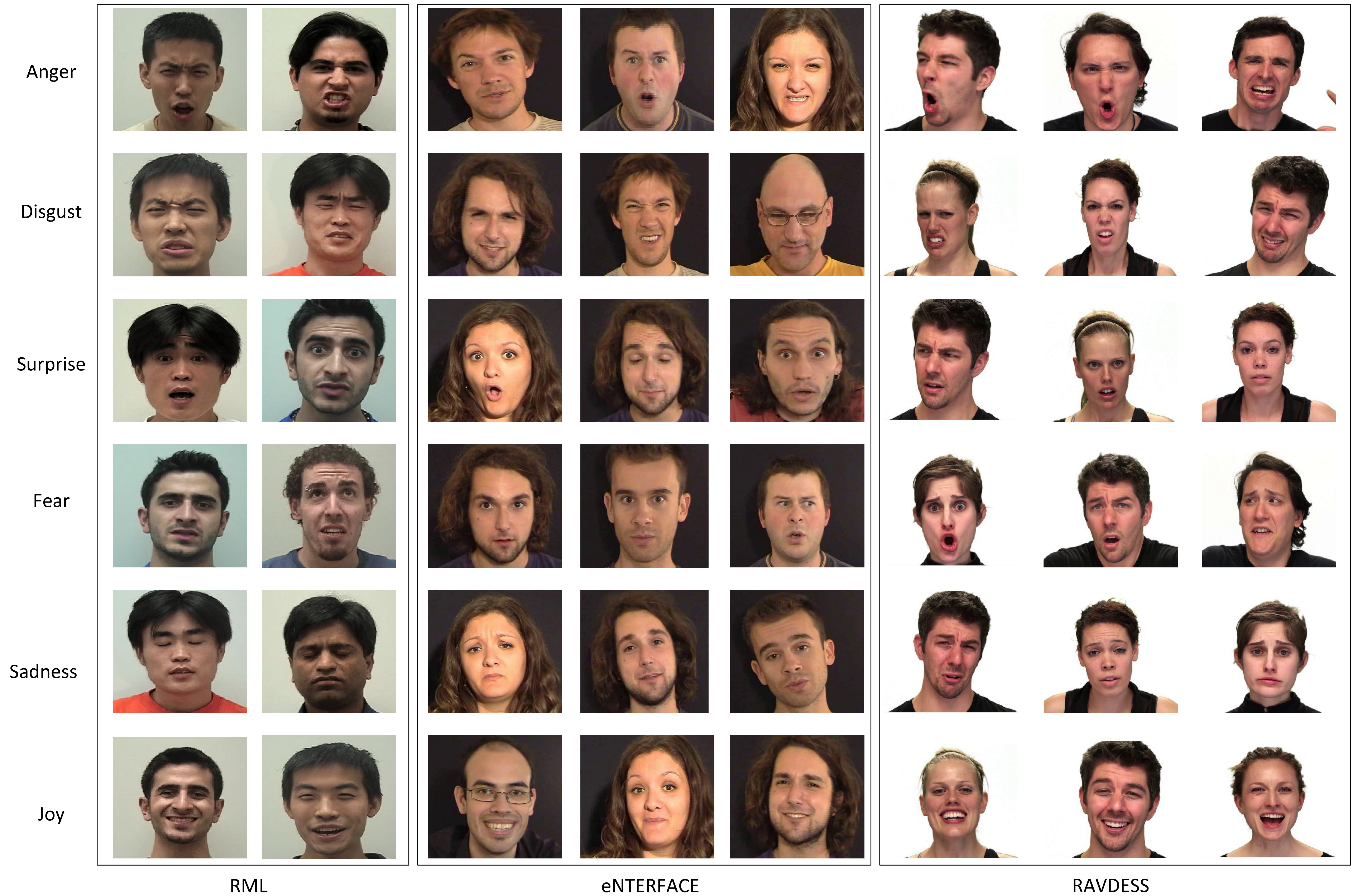

Human emotion is expressed, perceived and captured using a variety of dynamic data modalities, such as speech (verbal), videos (facial expressions) and motion sensors (body gestures). We propose a generalized approach to emotion recognition that can adapt across modalities by modeling dynamic data as structured graphs. The motivation behind the graph approach is to build compact models without compromising on performance. To alleviate the problem of optimal graph construction, we cast this as a joint graph learning and classification task. To this end, we present the Learnable Graph Inception Network (L-GrIN) that jointly learns to recognize emotion and to identify the underlying graph structure in the dynamic data. Our architecture comprises multiple novel components: a new graph convolution operation, a graph inception layer, learnable adjacency, and a learnable pooling function that yields a graph-level embedding. We evaluate the proposed architecture on five benchmark emotion recognition databases spanning three different modalities (video, audio, motion capture), where each database captures one of the following emotional cues: facial expressions, speech and body gestures. We achieve state-of-the-art performance on all five databases outperforming several competitive baselines and relevant existing methods. Our graph architecture shows superior performance with significantly fewer parameters (compared to convolutional or recurrent neural networks) promising its applicability to resource-constrained devices.

PDF Abstract

IEMOCAP

IEMOCAP