IEMOCAP (The Interactive Emotional Dyadic Motion Capture (IEMOCAP) Database)

Introduced by Carlos Busso et al. in IEMOCAP: interactive emotional dyadic motion capture databaseMultimodal Emotion Recognition IEMOCAP The IEMOCAP dataset consists of 151 videos of recorded dialogues, with 2 speakers per session for a total of 302 videos across the dataset. Each segment is annotated for the presence of 9 emotions (angry, excited, fear, sad, surprised, frustrated, happy, disappointed and neutral) as well as valence, arousal and dominance. The dataset is recorded across 5 sessions with 5 pairs of speakers.

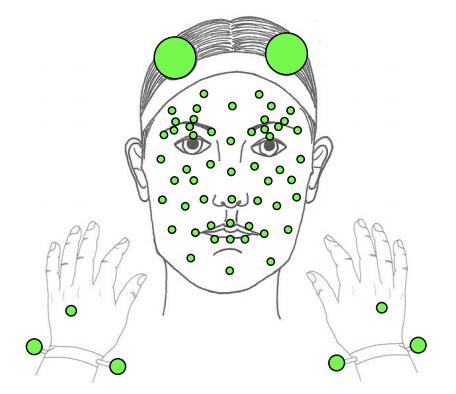

Source: Multi-attention Recurrent Network for Human Communication ComprehensionPapers

| Paper | Code | Results | Date | Stars |

|---|