Joint Audio and Speech Understanding

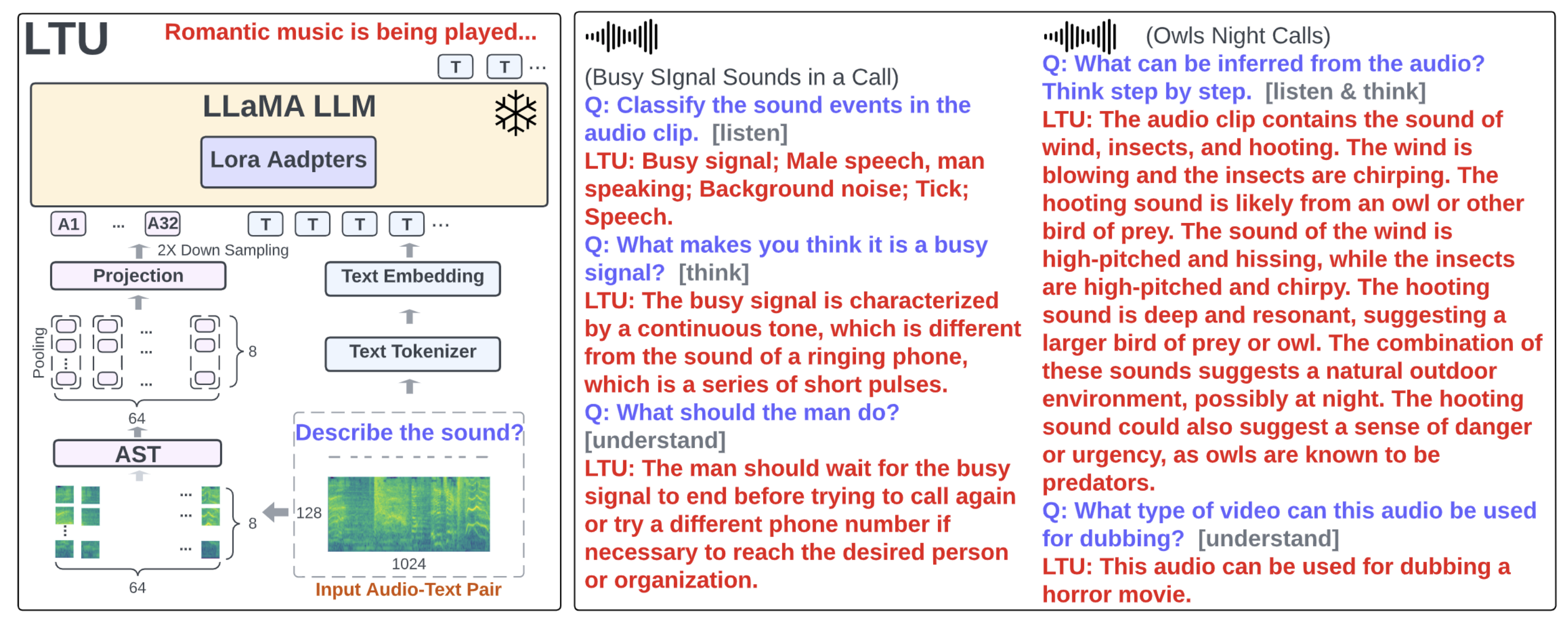

Humans are surrounded by audio signals that include both speech and non-speech sounds. The recognition and understanding of speech and non-speech audio events, along with a profound comprehension of the relationship between them, constitute fundamental cognitive capabilities. For the first time, we build a machine learning model, called LTU-AS, that has a conceptually similar universal audio perception and advanced reasoning ability. Specifically, by integrating Whisper as a perception module and LLaMA as a reasoning module, LTU-AS can simultaneously recognize and jointly understand spoken text, speech paralinguistics, and non-speech audio events - almost everything perceivable from audio signals.

PDF Abstract

IEMOCAP

IEMOCAP

AudioSet

AudioSet

VoxCeleb2

VoxCeleb2

AudioCaps

AudioCaps

FSD50K

FSD50K