Improving the Fairness of Deep Generative Models without Retraining

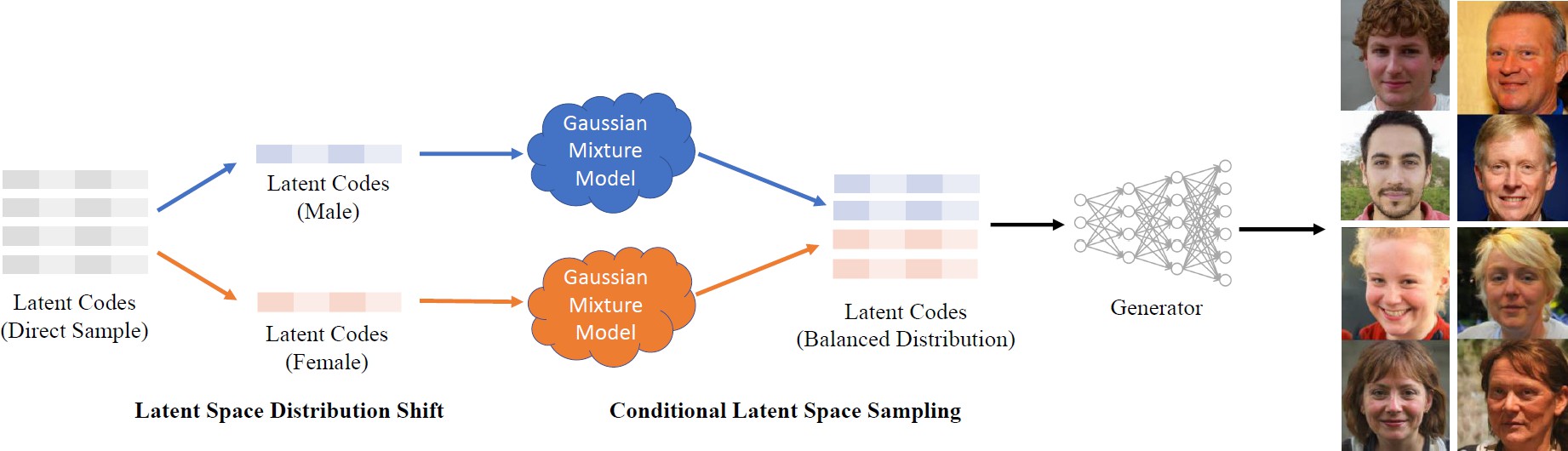

Generative Adversarial Networks (GANs) advance face synthesis through learning the underlying distribution of observed data. Despite the high-quality generated faces, some minority groups can be rarely generated from the trained models due to a biased image generation process. To study the issue, we first conduct an empirical study on a pre-trained face synthesis model. We observe that after training the GAN model not only carries the biases in the training data but also amplifies them to some degree in the image generation process. To further improve the fairness of image generation, we propose an interpretable baseline method to balance the output facial attributes without retraining. The proposed method shifts the interpretable semantic distribution in the latent space for a more balanced image generation while preserving the sample diversity. Besides producing more balanced data regarding a particular attribute (e.g., race, gender, etc.), our method is generalizable to handle more than one attribute at a time and synthesize samples of fine-grained subgroups. We further show the positive applicability of the balanced data sampled from GANs to quantify the biases in other face recognition systems, like commercial face attribute classifiers and face super-resolution algorithms.

PDF Abstract

FFHQ

FFHQ