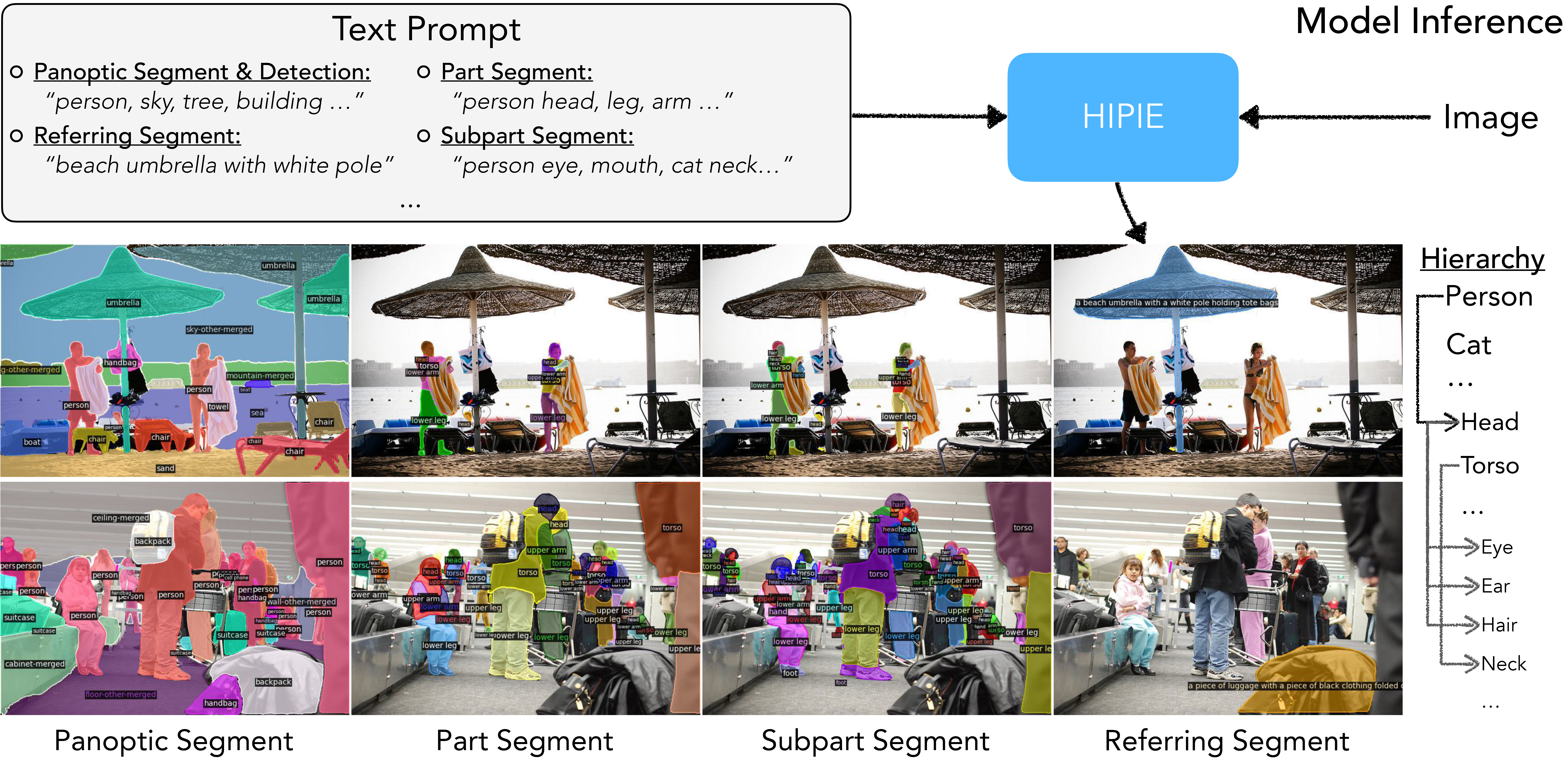

Hierarchical Open-vocabulary Universal Image Segmentation

Open-vocabulary image segmentation aims to partition an image into semantic regions according to arbitrary text descriptions. However, complex visual scenes can be naturally decomposed into simpler parts and abstracted at multiple levels of granularity, introducing inherent segmentation ambiguity. Unlike existing methods that typically sidestep this ambiguity and treat it as an external factor, our approach actively incorporates a hierarchical representation encompassing different semantic-levels into the learning process. We propose a decoupled text-image fusion mechanism and representation learning modules for both "things" and "stuff". Additionally, we systematically examine the differences that exist in the textual and visual features between these types of categories. Our resulting model, named HIPIE, tackles HIerarchical, oPen-vocabulary, and unIvErsal segmentation tasks within a unified framework. Benchmarked on over 40 datasets, e.g., ADE20K, COCO, Pascal-VOC Part, RefCOCO/RefCOCOg, ODinW and SeginW, HIPIE achieves the state-of-the-art results at various levels of image comprehension, including semantic-level (e.g., semantic segmentation), instance-level (e.g., panoptic/referring segmentation and object detection), as well as part-level (e.g., part/subpart segmentation) tasks. Our code is released at https://github.com/berkeley-hipie/HIPIE.

PDF Abstract NeurIPS 2023 PDF NeurIPS 2023 AbstractCode

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Uses Extra Training Data |

Benchmark |

|---|---|---|---|---|---|---|---|

| Panoptic Segmentation | COCO minival | HIPIE (ViT-H, single-scale) | PQ | 58.1 | # 7 | ||

| mIoU | 66.8 | # 5 | |||||

| Image Segmentation | Pascal Panoptic Parts | HIPIE (ViT-H) | mIoUPartS | 63.8 | # 1 | ||

| Image Segmentation | Pascal Panoptic Parts | HIPIE (ResNet-50) | mIoUPartS | 57.2 | # 3 | ||

| Referring Expression Segmentation | RefCoCo val | HIPIE | Overall IoU | 82.8 | # 1 | ||

| Referring Expression Segmentation | RefCOCO+ val | HIPIE | Overall IoU | 73.9 | # 1 | ||

| Zero Shot Segmentation | Segmentation in the Wild | HIPIE | Mean AP | 41.6 | # 4 |

MS COCO

MS COCO

RefCOCO

RefCOCO

PASCAL Context

PASCAL Context

SA-1B

SA-1B

Segmentation in the Wild

Segmentation in the Wild

Pascal Panoptic Parts

Pascal Panoptic Parts