DyStyle: Dynamic Neural Network for Multi-Attribute-Conditioned Style Editing

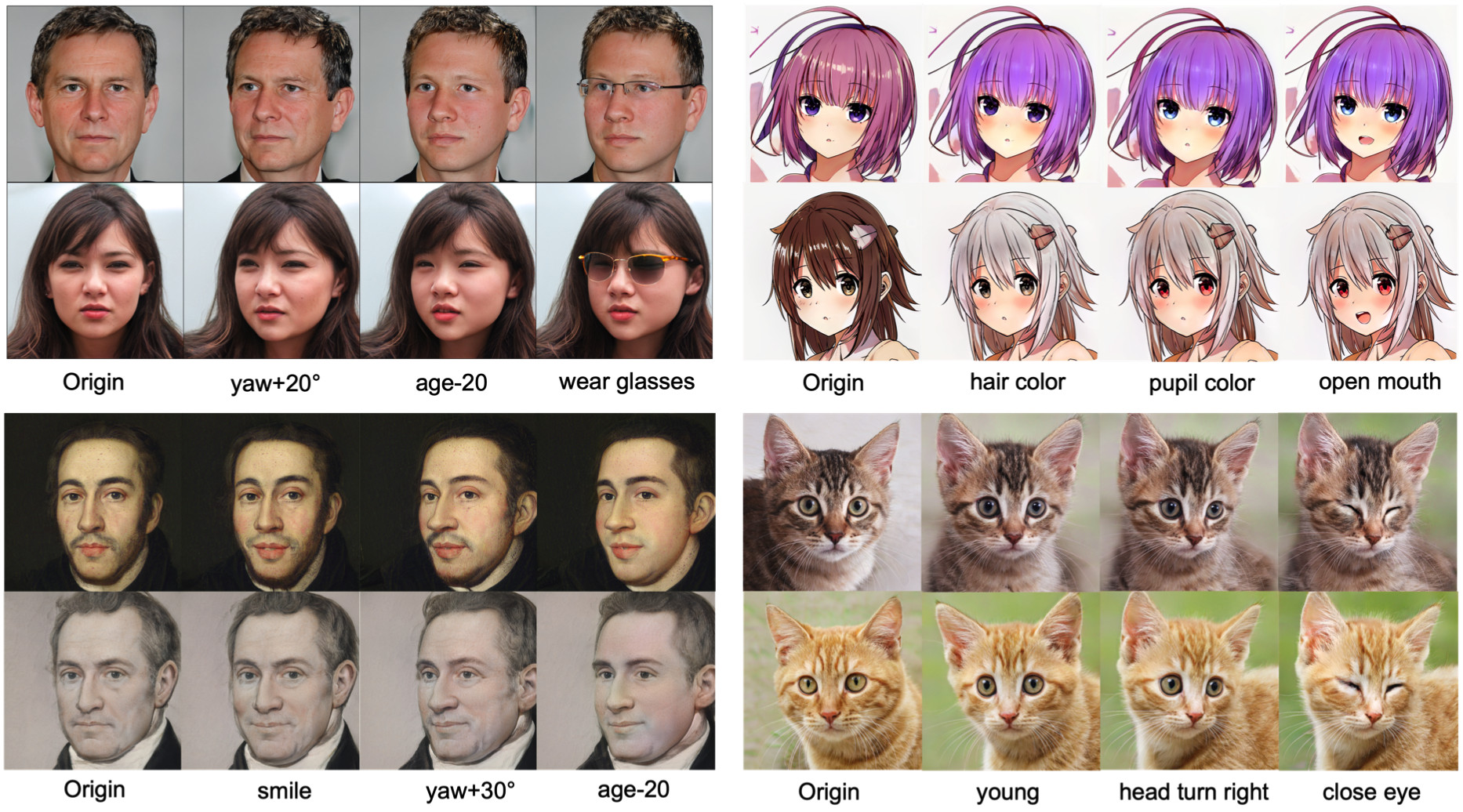

The semantic controllability of StyleGAN is enhanced by unremitting research. Although the existing weak supervision methods work well in manipulating the style codes along one attribute, the accuracy of manipulating multiple attributes is neglected. Multi-attribute representations are prone to entanglement in the StyleGAN latent space, while sequential editing leads to error accumulation. To address these limitations, we design a Dynamic Style Manipulation Network (DyStyle) whose structure and parameters vary by input samples, to perform nonlinear and adaptive manipulation of latent codes for flexible and precise attribute control. In order to efficient and stable optimization of the DyStyle network, we propose a Dynamic Multi-Attribute Contrastive Learning (DmaCL) method: including dynamic multi-attribute contrastor and dynamic multi-attribute contrastive loss, which simultaneously disentangle a variety of attributes from the generative image and latent space of model. As a result, our approach demonstrates fine-grained disentangled edits along multiple numeric and binary attributes. Qualitative and quantitative comparisons with existing style manipulation methods verify the superiority of our method in terms of the multi-attribute control accuracy and identity preservation without compromising photorealism.

PDF Abstract

FFHQ

FFHQ