Generative Models

Generative Models

Sparse Autoencoder

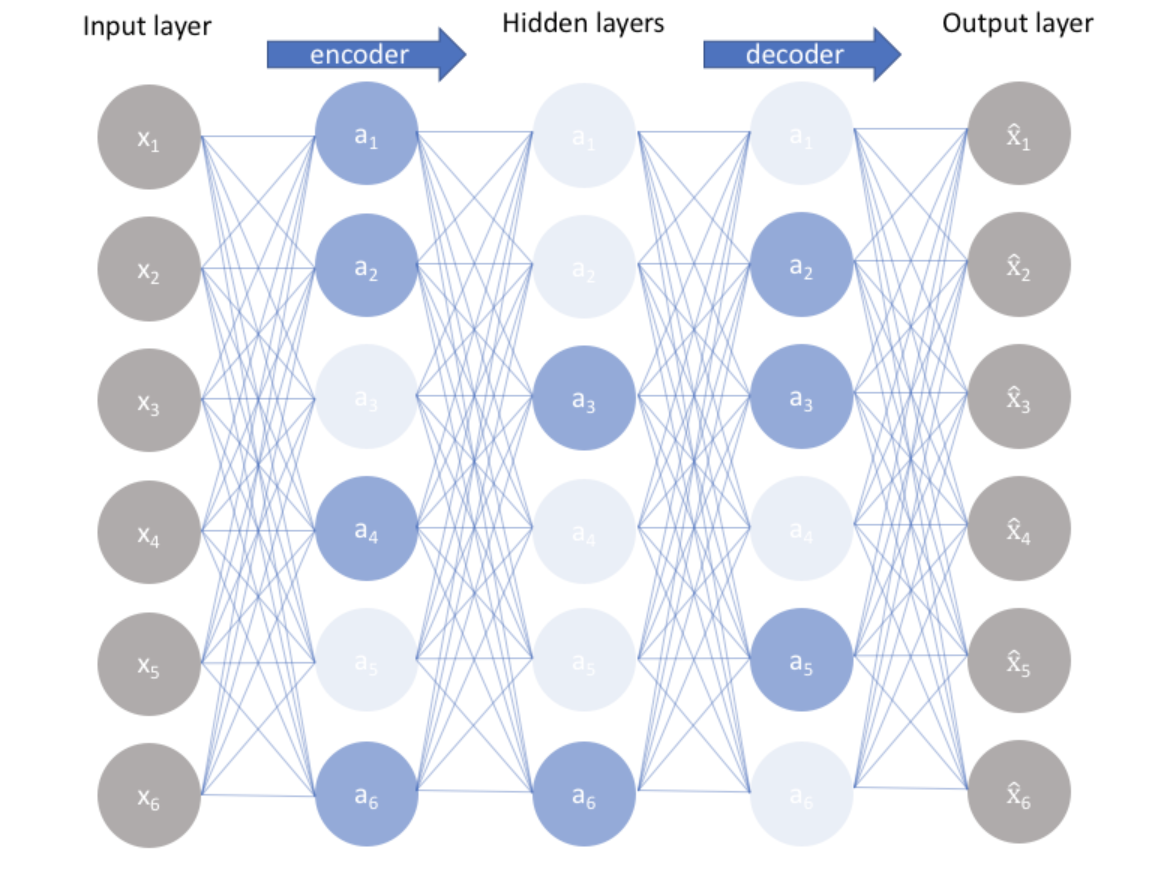

A Sparse Autoencoder is a type of autoencoder that employs sparsity to achieve an information bottleneck. Specifically the loss function is constructed so that activations are penalized within a layer. The sparsity constraint can be imposed with L1 regularization or a KL divergence between expected average neuron activation to an ideal distribution $p$.

Image: Jeff Jordan. Read his blog post (click) for a detailed summary of autoencoders.

Papers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| General Classification | 4 | 7.84% |

| Classification | 3 | 5.88% |

| Denoising | 3 | 5.88% |

| EEG | 2 | 3.92% |

| Electroencephalogram (EEG) | 2 | 3.92% |

| Dimensionality Reduction | 2 | 3.92% |

| Clustering | 2 | 3.92% |

| Small Data Image Classification | 2 | 3.92% |

| Data Compression | 1 | 1.96% |

L1 Regularization

L1 Regularization