Regularization

General • 58 methods

Regularization strategies are designed to reduce the test error of a machine learning algorithm, possibly at the expense of training error. Many different forms of regularization exist in the field of deep learning. Below you can find a constantly updating list of regularization strategies.

Subcategories

Methods

| Method | Year | Papers |

|---|---|---|

| 2014 | 19547 | |

| 1985 | 9693 | |

| 2018 | 7735 | |

| 1943 | 7700 | |

| 2016 | 786 | |

| 2018 | 467 | |

| 1995 | 378 | |

| 2016 | 327 | |

| 2019 | 169 | |

| 2015 | 133 | |

| 2018 | 130 | |

| 2018 | 121 | |

| 2018 | 89 | |

| 2013 | 78 | |

| 1986 | 70 | |

| 2015 | 58 | |

| 2020 | 57 | |

| 2000 | 54 | |

| 2017 | 49 | |

| 2017 | 49 | |

| 2014 | 27 | |

| 2016 | 27 | |

| 2018 | 26 | |

| 2018 | 23 | |

| 2016 | 22 | |

| 2016 | 20 | |

| 2022 | 17 | |

| 2016 | 15 | |

| 2017 | 14 | |

| 2020 | 13 | |

| 2019 | 12 | |

| 2019 | 10 | |

| 2021 | 10 | |

| 2019 | 9 | |

| 2013 | 7 | |

| 2017 | 5 | |

| 2018 | 4 | |

| 2022 | 4 | |

| 2018 | 3 | |

| 2020 | 3 | |

| 2016 | 3 | |

| 2021 | 3 | |

| 2020 | 3 | |

| 2019 | 3 | |

| 2016 | 2 | |

| 2019 | 2 | |

| 2017 | 2 | |

| 2023 | 2 | |

| 2017 | 2 | |

| 2020 | 1 | |

| 2021 | 1 | |

| 2017 | 1 | |

| 2023 | 1 | |

| 2021 | 1 | |

| 2000 | 0 | |

| 2015 | 0 |

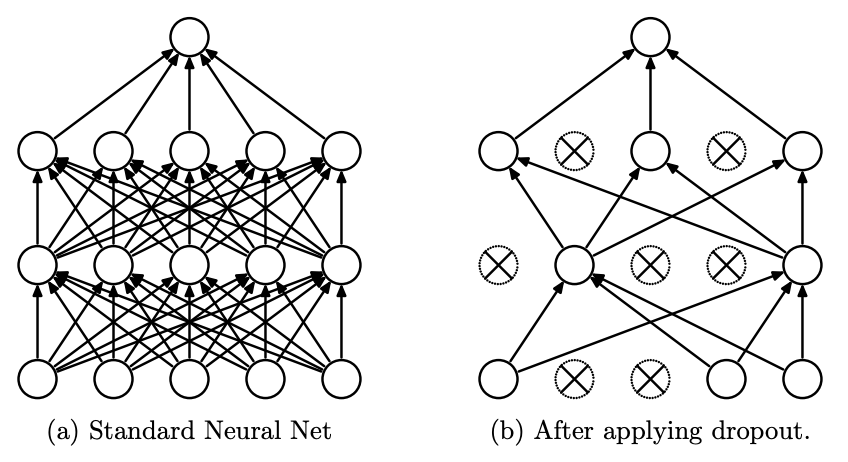

Dropout

Dropout

Label Smoothing

Label Smoothing

Attention Dropout

Attention Dropout

Weight Decay

Weight Decay

Entropy Regularization

Entropy Regularization

R1 Regularization

R1 Regularization

Early Stopping

Early Stopping

Stochastic Depth

Stochastic Depth

Path Length Regularization

Path Length Regularization

Variational Dropout

Variational Dropout

Off-Diagonal Orthogonal Regularization

Off-Diagonal Orthogonal Regularization

DropBlock

DropBlock

Target Policy Smoothing

Target Policy Smoothing

DropConnect

DropConnect

L1 Regularization

L1 Regularization

Embedding Dropout

Embedding Dropout

ALS

ALS

PGM

PGM

Temporal Activation Regularization

Temporal Activation Regularization

Activation Regularization

Activation Regularization

SpatialDropout

SpatialDropout

Zoneout

Zoneout

Manifold Mixup

Manifold Mixup

DropPath

DropPath

Orthogonal Regularization

Orthogonal Regularization

GAN Feature Matching

GAN Feature Matching

SCN

SCN

SRN

SRN

Auxiliary Batch Normalization

Auxiliary Batch Normalization

LayerScale

LayerScale

Euclidean Norm Regularization

Euclidean Norm Regularization

Adaptive Dropout

Adaptive Dropout

Shake-Shake Regularization

Shake-Shake Regularization

ShakeDrop

ShakeDrop

GradDrop

GradDrop

Recurrent Dropout

Recurrent Dropout

GMVAE

GMVAE

Batch Nuclear-norm Maximization

Batch Nuclear-norm Maximization

LayerDrop

LayerDrop

Discriminative Regularization

Discriminative Regularization

Fraternal Dropout

Fraternal Dropout

ScheduledDropPath

ScheduledDropPath

DropPathway

DropPathway

AutoDropout

AutoDropout

Weights Reset

Weights Reset

rnnDrop

rnnDrop