Generative Models

Generative Models

InfoGAN

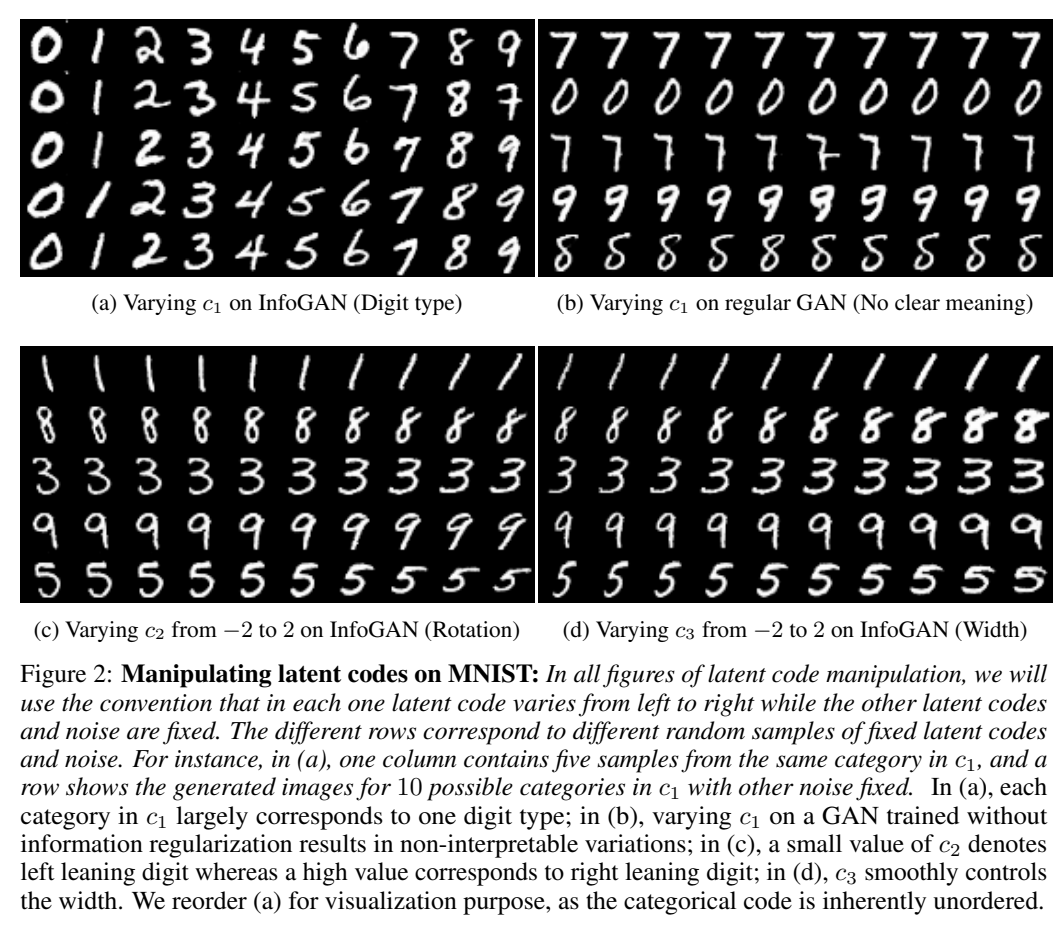

Introduced by Chen et al. in InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial NetsInfoGAN is a type of generative adversarial network that modifies the GAN objective to encourage it to learn interpretable and meaningful representations. This is done by maximizing the mutual information between a fixed small subset of the GAN’s noise variables and the observations.

Formally, InfoGAN is defined as a minimax game with a variational regularization of mutual information and the hyperparameter $\lambda$:

$$ \min_{G, Q}\max_{D}V_{INFOGAN}\left(D, G, Q\right) = V\left(D, G\right) - \lambda{L}_{I}\left(G, Q\right) $$

Where $Q$ is an auxiliary distribution that approximates the posterior $P\left(c\mid{x}\right)$ - the probability of the latent code $c$ given the data $x$ - and $L_{I}$ is the variational lower bound of the mutual information between the latent code and the observations.

In the practical implementation, there is another fully-connected layer to output parameters for the conditional distribution $Q$ (negligible computation ontop of regular GAN structures). Q is represented with a softmax non-linearity for a categorical latent code. For a continuous latent code, the authors assume a factored Gaussian.

Source: InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial NetsPapers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Disentanglement | 8 | 18.60% |

| Image Generation | 5 | 11.63% |

| General Classification | 3 | 6.98% |

| Image Classification | 2 | 4.65% |

| Unsupervised Image Classification | 2 | 4.65% |

| Translation | 2 | 4.65% |

| Medical Image Generation | 1 | 2.33% |

| Clustering | 1 | 2.33% |

| Dimensionality Reduction | 1 | 2.33% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

Feedforward Network

Feedforward Network

|

Feedforward Networks | |

Leaky ReLU

Leaky ReLU

|

Activation Functions | |

ReLU

ReLU

|

Activation Functions | |

Softmax

Softmax

|

Output Functions |