Search Results for author: Shikhar Tuli

Found 11 papers, 5 papers with code

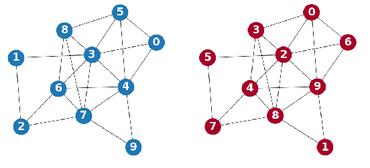

BREATHE: Second-Order Gradients and Heteroscedastic Emulation based Design Space Exploration

no code implementations • 16 Aug 2023 • Shikhar Tuli, Niraj K. Jha

In graph-based search, BREATHE outperforms the next-best baseline, i. e., a graphical version of Gaussian-process-based Bayesian optimization, with up to 64. 9% higher performance.

TransCODE: Co-design of Transformers and Accelerators for Efficient Training and Inference

no code implementations • 27 Mar 2023 • Shikhar Tuli, Niraj K. Jha

To effectively execute this method on hardware for a diverse set of transformer architectures, we propose ELECTOR, a framework that simulates transformer inference and training on a design space of accelerators.

EdgeTran: Co-designing Transformers for Efficient Inference on Mobile Edge Platforms

no code implementations • 24 Mar 2023 • Shikhar Tuli, Niraj K. Jha

In this work, we propose a framework, called ProTran, to profile the hardware performance measures for a design space of transformer architectures and a diverse set of edge devices.

AccelTran: A Sparsity-Aware Accelerator for Dynamic Inference with Transformers

1 code implementation • 28 Feb 2023 • Shikhar Tuli, Niraj K. Jha

On the other hand, AccelTran-Server achieves 5. 73$\times$ higher throughput and 3. 69$\times$ lower energy consumption compared to the state-of-the-art transformer co-processor, Energon.

CODEBench: A Neural Architecture and Hardware Accelerator Co-Design Framework

2 code implementations • 7 Dec 2022 • Shikhar Tuli, Chia-Hao Li, Ritvik Sharma, Niraj K. Jha

AccelBench performs cycle-accurate simulations for a diverse set of accelerator architectures in a vast design space.

FlexiBERT: Are Current Transformer Architectures too Homogeneous and Rigid?

no code implementations • 23 May 2022 • Shikhar Tuli, Bhishma Dedhia, Shreshth Tuli, Niraj K. Jha

We also propose a novel NAS policy, called BOSHNAS, that leverages this new scheme, Bayesian modeling, and second-order optimization, to quickly train and use a neural surrogate model to converge to the optimal architecture.

Generative Optimization Networks for Memory Efficient Data Generation

no code implementations • 6 Oct 2021 • Shreshth Tuli, Shikhar Tuli, Giuliano Casale, Nicholas R. Jennings

In standard generative deep learning models, such as autoencoders or GANs, the size of the parameter set is proportional to the complexity of the generated data distribution.

Are Convolutional Neural Networks or Transformers more like human vision?

1 code implementation • 15 May 2021 • Shikhar Tuli, Ishita Dasgupta, Erin Grant, Thomas L. Griffiths

Our focus is on comparing a suite of standard Convolutional Neural Networks (CNNs) and a recently-proposed attention-based network, the Vision Transformer (ViT), which relaxes the translation-invariance constraint of CNNs and therefore represents a model with a weaker set of inductive biases.

AVAC: A Machine Learning based Adaptive RRAM Variability-Aware Controller for Edge Devices

no code implementations • 6 May 2020 • Shikhar Tuli, Shreshth Tuli

Recently, the Edge Computing paradigm has gained significant popularity both in industry and academia.

APEX: Adaptive Ext4 File System for Enhanced Data Recoverability in Edge Devices

2 code implementations • 3 Oct 2019 • Shreshth Tuli, Shikhar Tuli, Udit Jain, Rajkumar Buyya

We demonstrate the effectiveness of APEX through a case study of overwriting surveillance videos by CryPy malware on Raspberry-Pi based Edge deployment and show 678% and 32% higher recovery than Ext4 and current state-of-the-art File Systems.

Operating Systems

FogBus: A Blockchain-based Lightweight Framework for Edge and Fog Computing

2 code implementations • 29 Nov 2018 • Shreshth Tuli, Redowan Mahmud, Shikhar Tuli, Rajkumar Buyya

The requirement of supporting both latency sensitive and computing intensive Internet of Things (IoT) applications is consistently boosting the necessity for integrating Edge, Fog and Cloud infrastructure.

Distributed, Parallel, and Cluster Computing