Search Results for author: Shant Navasardyan

Found 11 papers, 8 papers with code

StreamingT2V: Consistent, Dynamic, and Extendable Long Video Generation from Text

1 code implementation • 21 Mar 2024 • Roberto Henschel, Levon Khachatryan, Daniil Hayrapetyan, Hayk Poghosyan, Vahram Tadevosyan, Zhangyang Wang, Shant Navasardyan, Humphrey Shi

To overcome these limitations, we introduce StreamingT2V, an autoregressive approach for long video generation of 80, 240, 600, 1200 or more frames with smooth transitions.

HD-Painter: High-Resolution and Prompt-Faithful Text-Guided Image Inpainting with Diffusion Models

1 code implementation • 21 Dec 2023 • Hayk Manukyan, Andranik Sargsyan, Barsegh Atanyan, Zhangyang Wang, Shant Navasardyan, Humphrey Shi

Recent progress in text-guided image inpainting, based on the unprecedented success of text-to-image diffusion models, has led to exceptionally realistic and visually plausible results.

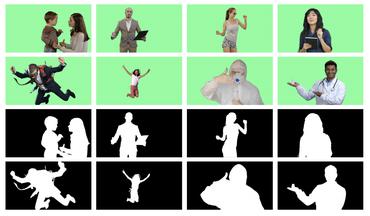

Video Instance Matting

1 code implementation • 7 Nov 2023 • Jiachen Li, Roberto Henschel, Vidit Goel, Marianna Ohanyan, Shant Navasardyan, Humphrey Shi

To remedy this deficiency, we propose Video Instance Matting~(VIM), that is, estimating alpha mattes of each instance at each frame of a video sequence.

Multi-Concept T2I-Zero: Tweaking Only The Text Embeddings and Nothing Else

no code implementations • 11 Oct 2023 • Hazarapet Tunanyan, Dejia Xu, Shant Navasardyan, Zhangyang Wang, Humphrey Shi

To achieve this goal, we identify the limitations in the text embeddings used for the pre-trained text-to-image diffusion models.

Text2Video-Zero: Text-to-Image Diffusion Models are Zero-Shot Video Generators

1 code implementation • ICCV 2023 • Levon Khachatryan, Andranik Movsisyan, Vahram Tadevosyan, Roberto Henschel, Zhangyang Wang, Shant Navasardyan, Humphrey Shi

Recent text-to-video generation approaches rely on computationally heavy training and require large-scale video datasets.

Specialist Diffusion: Plug-and-Play Sample-Efficient Fine-Tuning of Text-to-Image Diffusion Models To Learn Any Unseen Style

no code implementations • CVPR 2023 • Haoming Lu, Hazarapet Tunanyan, Kai Wang, Shant Navasardyan, Zhangyang Wang, Humphrey Shi

Diffusion models have demonstrated impressive capability of text-conditioned image synthesis, and broader application horizons are emerging by personalizing those pretrained diffusion models toward generating some specialized target object or style.

MI-GAN: A Simple Baseline for Image Inpainting on Mobile Devices

1 code implementation • ICCV 2023 • Andranik Sargsyan, Shant Navasardyan, Xingqian Xu, Humphrey Shi

In this paper we present a simple image inpainting baseline, Mobile Inpainting GAN (MI-GAN), which is approximately one order of magnitude computationally cheaper and smaller than existing state-of-the-art inpainting models, and can be efficiently deployed on mobile devices.

Mask Matching Transformer for Few-Shot Segmentation

1 code implementation • 5 Dec 2022 • Siyu Jiao, Gengwei Zhang, Shant Navasardyan, Ling Chen, Yao Zhao, Yunchao Wei, Humphrey Shi

Typical methods follow the paradigm to firstly learn prototypical features from support images and then match query features in pixel-level to obtain segmentation results.

Image Completion with Heterogeneously Filtered Spectral Hints

1 code implementation • 7 Nov 2022 • Xingqian Xu, Shant Navasardyan, Vahram Tadevosyan, Andranik Sargsyan, Yadong Mu, Humphrey Shi

We also prove the effectiveness of our design via ablation studies, from which one may notice that the aforementioned challenges, i. e. pattern unawareness, blurry textures, and structure distortion, can be noticeably resolved.

Ranked #1 on

Image Inpainting

on FFHQ 512 x 512

Ranked #1 on

Image Inpainting

on FFHQ 512 x 512

VMFormer: End-to-End Video Matting with Transformer

1 code implementation • 26 Aug 2022 • Jiachen Li, Vidit Goel, Marianna Ohanyan, Shant Navasardyan, Yunchao Wei, Humphrey Shi

In this paper, we propose VMFormer: a transformer-based end-to-end method for video matting.

Image Inpainting with Onion Convolutions

no code implementations • 4 Dec 2020 • Shant Navasardyan, Marianna Ohanyan

The concept of onion convolution is introduced with the purpose of preserving feature continuities and semantic coherence.