Search Results for author: Qing Chang

Found 7 papers, 5 papers with code

UltraLight VM-UNet: Parallel Vision Mamba Significantly Reduces Parameters for Skin Lesion Segmentation

1 code implementation • 29 Mar 2024 • Renkai Wu, Yinghao Liu, Pengchen Liang, Qing Chang

In this paper, we deeply explore the key elements of parameter influence in Mamba and propose an UltraLight Vision Mamba UNet (UltraLight VM-UNet) based on this.

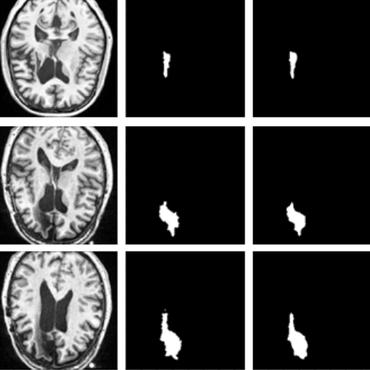

H-vmunet: High-order Vision Mamba UNet for Medical Image Segmentation

1 code implementation • 20 Mar 2024 • Renkai Wu, Yinghao Liu, Pengchen Liang, Qing Chang

In the field of medical image segmentation, variant models based on Convolutional Neural Networks (CNNs) and Visual Transformers (ViTs) as the base modules have been very widely developed and applied.

Only Positive Cases: 5-fold High-order Attention Interaction Model for Skin Segmentation Derived Classification

1 code implementation • 27 Nov 2023 • Renkai Wu, Yinghao Liu, Pengchen Liang, Qing Chang

In this paper, we propose a multiple high-order attention interaction model (MHA-UNet) for use in a highly explainable skin lesion segmentation task.

Generalized Kernel Regularized Least Squares

1 code implementation • 28 Sep 2022 • Qing Chang, Max Goplerud

We note that KRLS can be re-formulated as a hierarchical model thereby allowing easy inference and modular model construction where KRLS can be used alongside random effects, splines, and unregularized fixed effects.

Reinforcement Learning Based User-Guided Motion Planning for Human-Robot Collaboration

no code implementations • 1 Jul 2022 • Tian Yu, Qing Chang

Therefore, it is highly desirable to have a flexible motion planning method, with which robots can adapt to specific task changes in unstructured environments, such as production systems or warehouses, with little or no intervention from non-expert personnel.

DATA: Domain-Aware and Task-Aware Self-supervised Learning

1 code implementation • CVPR 2022 • Qing Chang, Junran Peng, Lingxie Xie, Jiajun Sun, Haoran Yin, Qi Tian, Zhaoxiang Zhang

However, due to the high training costs and the unconsciousness of downstream usages, most self-supervised learning methods lack the capability to correspond to the diversities of downstream scenarios, as there are various data domains, different vision tasks and latency constraints on models.

A Novel Deep Arrhythmia-Diagnosis Network for Atrial Fibrillation Classification Using Electrocardiogram Signals

no code implementations • IEEE Access 2019 • Hao Dang, Muyi Sun, Guanhong Zhang, Xingqun Qi, Xiaoguang Zhou, Qing Chang

Atrial fibrillation (AF), a common abnormal heartbeat rhythm, is a life-threatening recurrent disease that affects older adults.

Ranked #4 on

Atrial Fibrillation Detection

on MIT-BIH AF

Ranked #4 on

Atrial Fibrillation Detection

on MIT-BIH AF