Image-to-Image Translation

490 papers with code • 37 benchmarks • 29 datasets

Image-to-Image Translation is a task in computer vision and machine learning where the goal is to learn a mapping between an input image and an output image, such that the output image can be used to perform a specific task, such as style transfer, data augmentation, or image restoration.

( Image credit: Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks )

Libraries

Use these libraries to find Image-to-Image Translation models and implementationsDatasets

Subtasks

Most implemented papers

Learning to Adapt Structured Output Space for Semantic Segmentation

In this paper, we propose an adversarial learning method for domain adaptation in the context of semantic segmentation.

Joint Discriminative and Generative Learning for Person Re-identification

To this end, we propose a joint learning framework that couples re-id learning and data generation end-to-end.

Taming Transformers for High-Resolution Image Synthesis

We demonstrate how combining the effectiveness of the inductive bias of CNNs with the expressivity of transformers enables them to model and thereby synthesize high-resolution images.

Multimodal Token Fusion for Vision Transformers

Many adaptations of transformers have emerged to address the single-modal vision tasks, where self-attention modules are stacked to handle input sources like images.

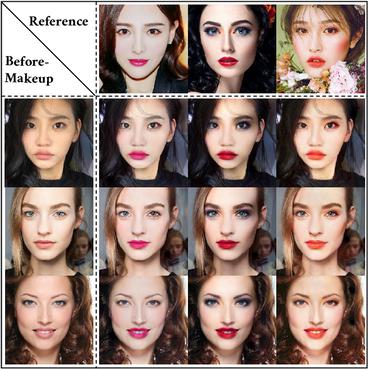

Few-Shot Unsupervised Image-to-Image Translation

Unsupervised image-to-image translation methods learn to map images in a given class to an analogous image in a different class, drawing on unstructured (non-registered) datasets of images.

Contrastive Learning for Unpaired Image-to-Image Translation

Furthermore, we draw negatives from within the input image itself, rather than from the rest of the dataset.

Encoding in Style: a StyleGAN Encoder for Image-to-Image Translation

We present a generic image-to-image translation framework, pixel2style2pixel (pSp).

Adversarially Learned Inference

We introduce the adversarially learned inference (ALI) model, which jointly learns a generation network and an inference network using an adversarial process.

Learning from Simulated and Unsupervised Images through Adversarial Training

With recent progress in graphics, it has become more tractable to train models on synthetic images, potentially avoiding the need for expensive annotations.

Unsupervised Image-to-Image Translation Networks

Unsupervised image-to-image translation aims at learning a joint distribution of images in different domains by using images from the marginal distributions in individual domains.

Cityscapes

Cityscapes

KITTI

KITTI

ADE20K

ADE20K

CelebA-HQ

CelebA-HQ

SYNTHIA

SYNTHIA

GTA5

GTA5

DeepFashion

DeepFashion

Perceptual Similarity

Perceptual Similarity

AFHQ

AFHQ

COCO-Stuff

COCO-Stuff