Image-to-Image Translation

490 papers with code • 37 benchmarks • 29 datasets

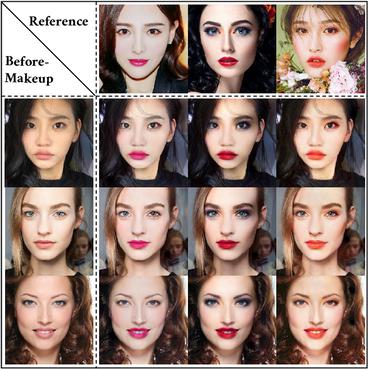

Image-to-Image Translation is a task in computer vision and machine learning where the goal is to learn a mapping between an input image and an output image, such that the output image can be used to perform a specific task, such as style transfer, data augmentation, or image restoration.

( Image credit: Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks )

Libraries

Use these libraries to find Image-to-Image Translation models and implementationsDatasets

Subtasks

Latest papers with no code

Optical Image-to-Image Translation Using Denoising Diffusion Models: Heterogeneous Change Detection as a Use Case

We introduce an innovative deep learning-based method that uses a denoising diffusion-based model to translate low-resolution images to high-resolution ones from different optical sensors while preserving the contents and avoiding undesired artifacts.

Exploring selective image matching methods for zero-shot and few-sample unsupervised domain adaptation of urban canopy prediction

We explore simple methods for adapting a trained multi-task UNet which predicts canopy cover and height to a new geographic setting using remotely sensed data without the need of training a domain-adaptive classifier and extensive fine-tuning.

SyntStereo2Real: Edge-Aware GAN for Remote Sensing Image-to-Image Translation while Maintaining Stereo Constraint

The use of synthetically generated images as an alternative, alleviates this problem but suffers from the problem of domain generalization.

DiffHarmony: Latent Diffusion Model Meets Image Harmonization

To deal with these issues, in this paper, we first adapt a pre-trained latent diffusion model to the image harmonization task to generate the harmonious but potentially blurry initial images.

MoMA: Multimodal LLM Adapter for Fast Personalized Image Generation

This approach effectively synergizes reference image and text prompt information to produce valuable image features, facilitating an image diffusion model.

Dynamic Conditional Optimal Transport through Simulation-Free Flows

We study the geometry of conditional optimal transport (COT) and prove a dynamical formulation which generalizes the Benamou-Brenier Theorem.

Translation-based Video-to-Video Synthesis

Translation-based Video Synthesis (TVS) has emerged as a vital research area in computer vision, aiming to facilitate the transformation of videos between distinct domains while preserving both temporal continuity and underlying content features.

Diffusion based Zero-shot Medical Image-to-Image Translation for Cross Modality Segmentation

To leverage generative learning for zero-shot cross-modality image segmentation, we propose a novel unsupervised image translation method.

GAN with Skip Patch Discriminator for Biological Electron Microscopy Image Generation

Generating realistic electron microscopy (EM) images has been a challenging problem due to their complex global and local structures.

StegoGAN: Leveraging Steganography for Non-Bijective Image-to-Image Translation

Most image-to-image translation models postulate that a unique correspondence exists between the semantic classes of the source and target domains.

Cityscapes

Cityscapes

KITTI

KITTI

ADE20K

ADE20K

CelebA-HQ

CelebA-HQ

SYNTHIA

SYNTHIA

GTA5

GTA5

DeepFashion

DeepFashion

Perceptual Similarity

Perceptual Similarity

AFHQ

AFHQ

COCO-Stuff

COCO-Stuff