YOLOV: Making Still Image Object Detectors Great at Video Object Detection

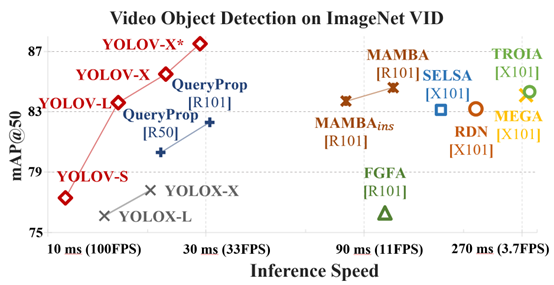

Video object detection (VID) is challenging because of the high variation of object appearance as well as the diverse deterioration in some frames. On the positive side, the detection in a certain frame of a video, compared with that in a still image, can draw support from other frames. Hence, how to aggregate features across different frames is pivotal to VID problem. Most of existing aggregation algorithms are customized for two-stage detectors. However, these detectors are usually computationally expensive due to their two-stage nature. This work proposes a simple yet effective strategy to address the above concerns, which costs marginal overheads with significant gains in accuracy. Concretely, different from traditional two-stage pipeline, we select important regions after the one-stage detection to avoid processing massive low-quality candidates. Besides, we evaluate the relationship between a target frame and reference frames to guide the aggregation. We conduct extensive experiments and ablation studies to verify the efficacy of our design, and reveal its superiority over other state-of-the-art VID approaches in both effectiveness and efficiency. Our YOLOX-based model can achieve promising performance (\emph{e.g.}, 87.5\% AP50 at over 30 FPS on the ImageNet VID dataset on a single 2080Ti GPU), making it attractive for large-scale or real-time applications. The implementation is simple, we have made the demo codes and models available at \url{https://github.com/YuHengsss/YOLOV}.

PDF Abstract

ssd

ssd