When, Where, and What? A New Dataset for Anomaly Detection in Driving Videos

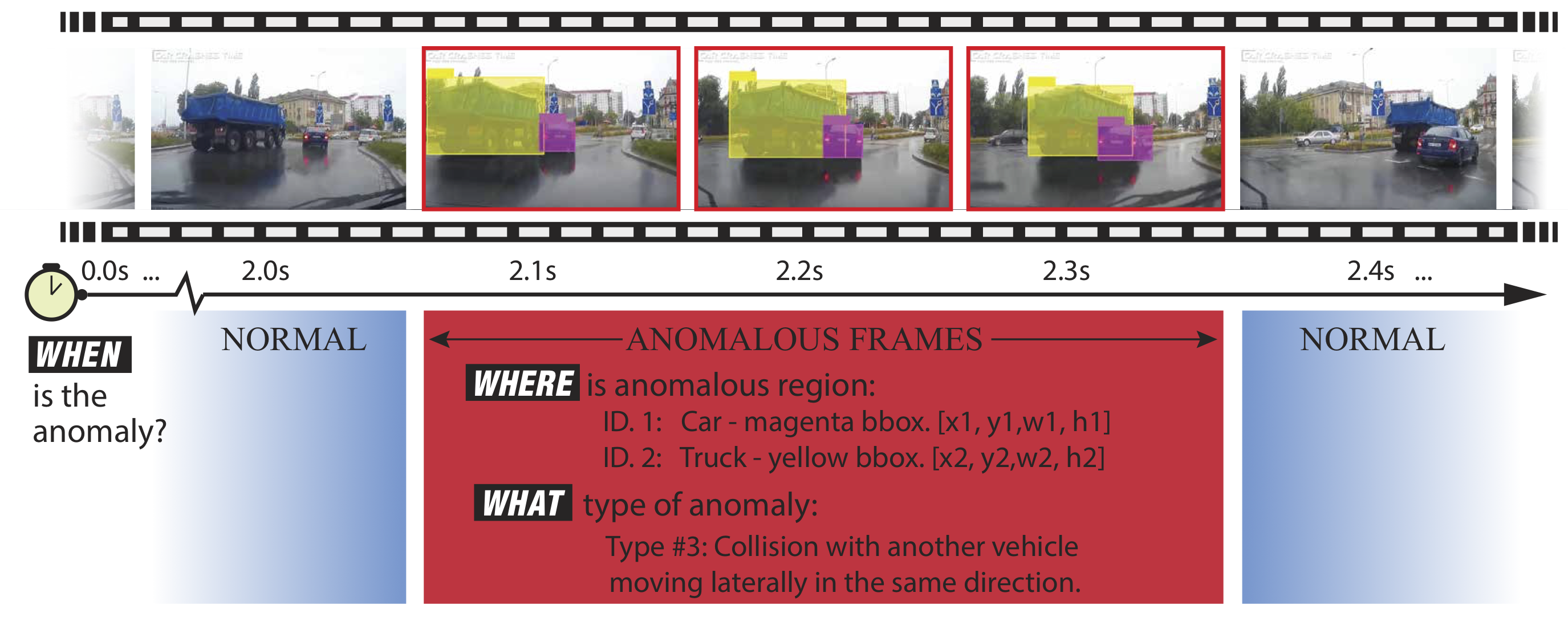

Video anomaly detection (VAD) has been extensively studied. However, research on egocentric traffic videos with dynamic scenes lacks large-scale benchmark datasets as well as effective evaluation metrics. This paper proposes traffic anomaly detection with a \textit{when-where-what} pipeline to detect, localize, and recognize anomalous events from egocentric videos. We introduce a new dataset called Detection of Traffic Anomaly (DoTA) containing 4,677 videos with temporal, spatial, and categorical annotations. A new spatial-temporal area under curve (STAUC) evaluation metric is proposed and used with DoTA. State-of-the-art methods are benchmarked for two VAD-related tasks.Experimental results show STAUC is an effective VAD metric. To our knowledge, DoTA is the largest traffic anomaly dataset to-date and is the first supporting traffic anomaly studies across when-where-what perspectives. Our code and dataset can be found in: https://github.com/MoonBlvd/Detection-of-Traffic-Anomaly

PDF AbstractCode

Datasets

Introduced in the Paper:

Detection of Traffic AnomalyUsed in the Paper:

Kinetics

Kinetics

ShanghaiTech Campus

ShanghaiTech Campus

UCF-Crime

A3D

UCF-Crime

A3D