"What's This?" -- Learning to Segment Unknown Objects from Manipulation Sequences

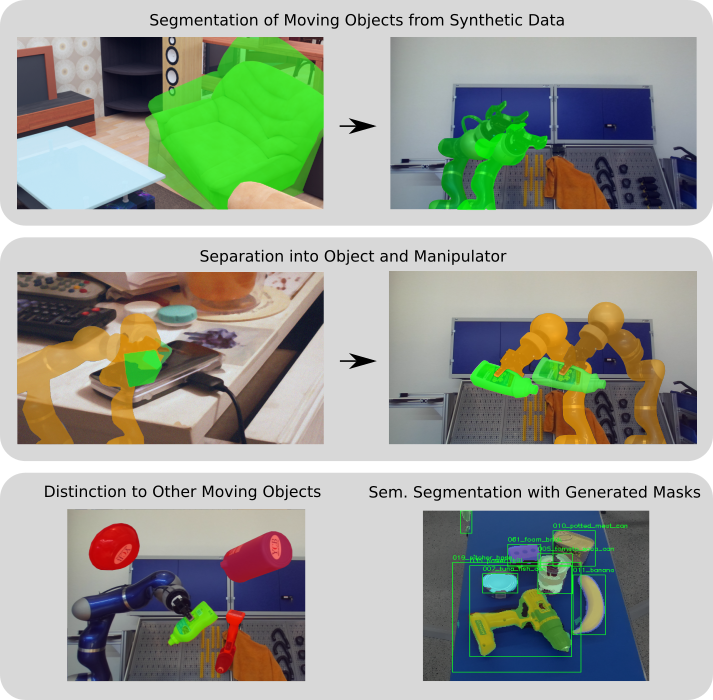

We present a novel framework for self-supervised grasped object segmentation with a robotic manipulator. Our method successively learns an agnostic foreground segmentation followed by a distinction between manipulator and object solely by observing the motion between consecutive RGB frames. In contrast to previous approaches, we propose a single, end-to-end trainable architecture which jointly incorporates motion cues and semantic knowledge. Furthermore, while the motion of the manipulator and the object are substantial cues for our algorithm, we present means to robustly deal with distraction objects moving in the background, as well as with completely static scenes. Our method neither depends on any visual registration of a kinematic robot or 3D object models, nor on precise hand-eye calibration or any additional sensor data. By extensive experimental evaluation we demonstrate the superiority of our framework and provide detailed insights on its capability of dealing with the aforementioned extreme cases of motion. We also show that training a semantic segmentation network with the automatically labeled data achieves results on par with manually annotated training data. Code and pretrained model are available at https://github.com/DLR-RM/DistinctNet.

PDF Abstract

MS COCO

MS COCO