What Makes A Good Story? Designing Composite Rewards for Visual Storytelling

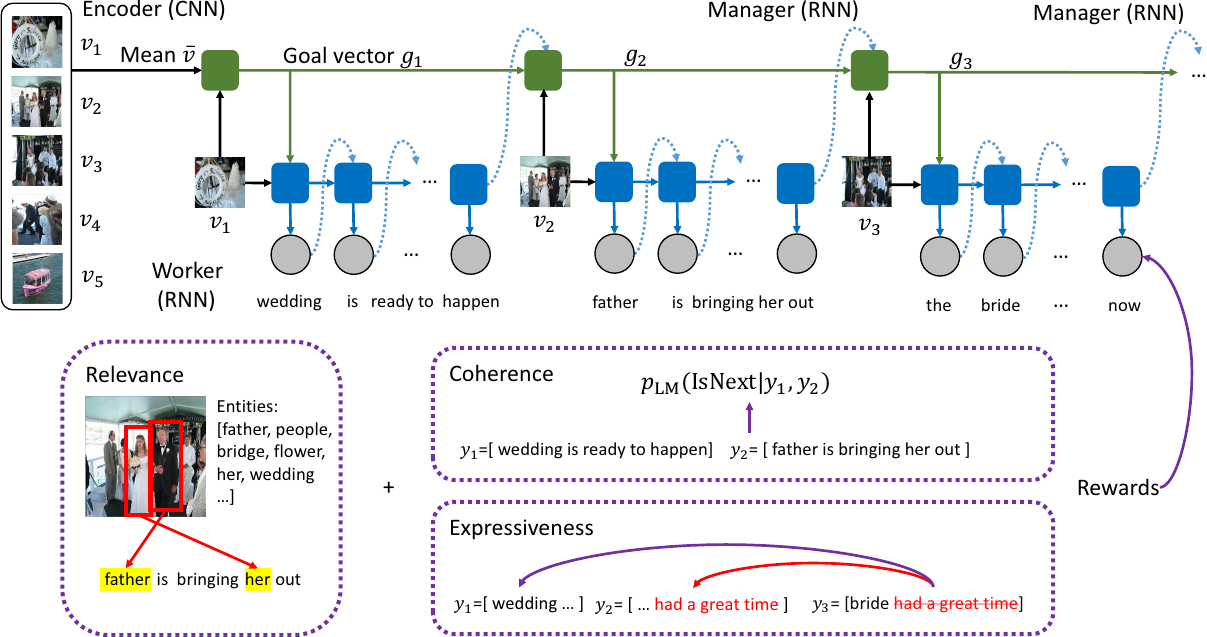

Previous storytelling approaches mostly focused on optimizing traditional metrics such as BLEU, ROUGE and CIDEr. In this paper, we re-examine this problem from a different angle, by looking deep into what defines a realistically-natural and topically-coherent story. To this end, we propose three assessment criteria: relevance, coherence and expressiveness, which we observe through empirical analysis could constitute a "high-quality" story to the human eye. Following this quality guideline, we propose a reinforcement learning framework, ReCo-RL, with reward functions designed to capture the essence of these quality criteria. Experiments on the Visual Storytelling Dataset (VIST) with both automatic and human evaluations demonstrate that our ReCo-RL model achieves better performance than state-of-the-art baselines on both traditional metrics and the proposed new criteria.

PDF Abstract

VIST

VIST