Weakly Supervised Online Action Detection for Infant General Movements

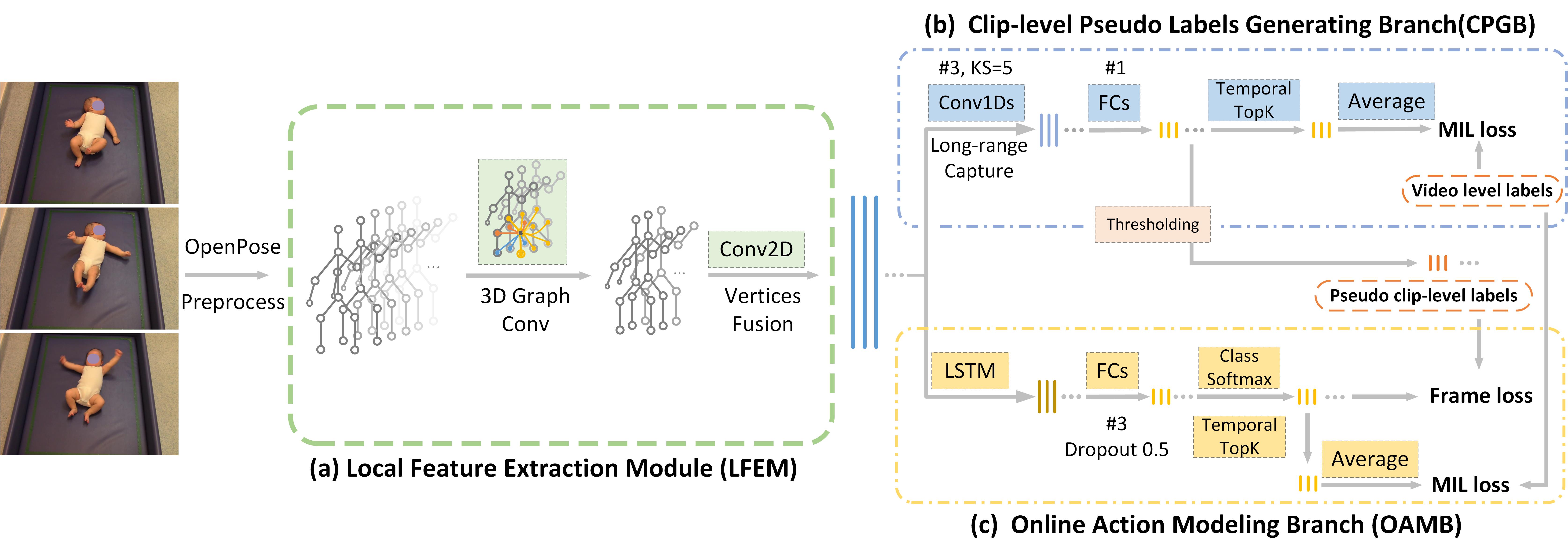

To make the earlier medical intervention of infants' cerebral palsy (CP), early diagnosis of brain damage is critical. Although general movements assessment(GMA) has shown promising results in early CP detection, it is laborious. Most existing works take videos as input to make fidgety movements(FMs) classification for the GMA automation. Those methods require a complete observation of videos and can not localize video frames containing normal FMs. Therefore we propose a novel approach named WO-GMA to perform FMs localization in the weakly supervised online setting. Infant body keypoints are first extracted as the inputs to WO-GMA. Then WO-GMA performs local spatio-temporal extraction followed by two network branches to generate pseudo clip labels and model online actions. With the clip-level pseudo labels, the action modeling branch learns to detect FMs in an online fashion. Experimental results on a dataset with 757 videos of different infants show that WO-GMA can get state-of-the-art video-level classification and cliplevel detection results. Moreover, only the first 20% duration of the video is needed to get classification results as good as fully observed, implying a significantly shortened FMs diagnosis time. Code is available at: https://github.com/scofiedluo/WO-GMA.

PDF Abstract