Visually-Grounded Planning without Vision: Language Models Infer Detailed Plans from High-level Instructions

The recently proposed ALFRED challenge task aims for a virtual robotic agent to complete complex multi-step everyday tasks in a virtual home environment from high-level natural language directives, such as "put a hot piece of bread on a plate". Currently, the best-performing models are able to complete less than 5% of these tasks successfully. In this work we focus on modeling the translation problem of converting natural language directives into detailed multi-step sequences of actions that accomplish those goals in the virtual environment. We empirically demonstrate that it is possible to generate gold multi-step plans from language directives alone without any visual input in 26% of unseen cases. When a small amount of visual information is incorporated, namely the starting location in the virtual environment, our best-performing GPT-2 model successfully generates gold command sequences in 58% of cases. Our results suggest that contextualized language models may provide strong visual semantic planning modules for grounded virtual agents.

PDF Abstract

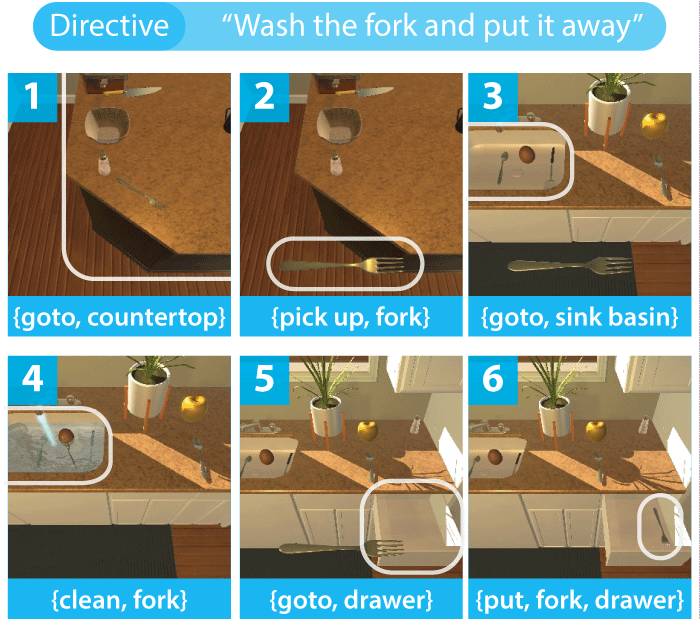

ALFRED

ALFRED