Visualizing Representations of Adversarially Perturbed Inputs

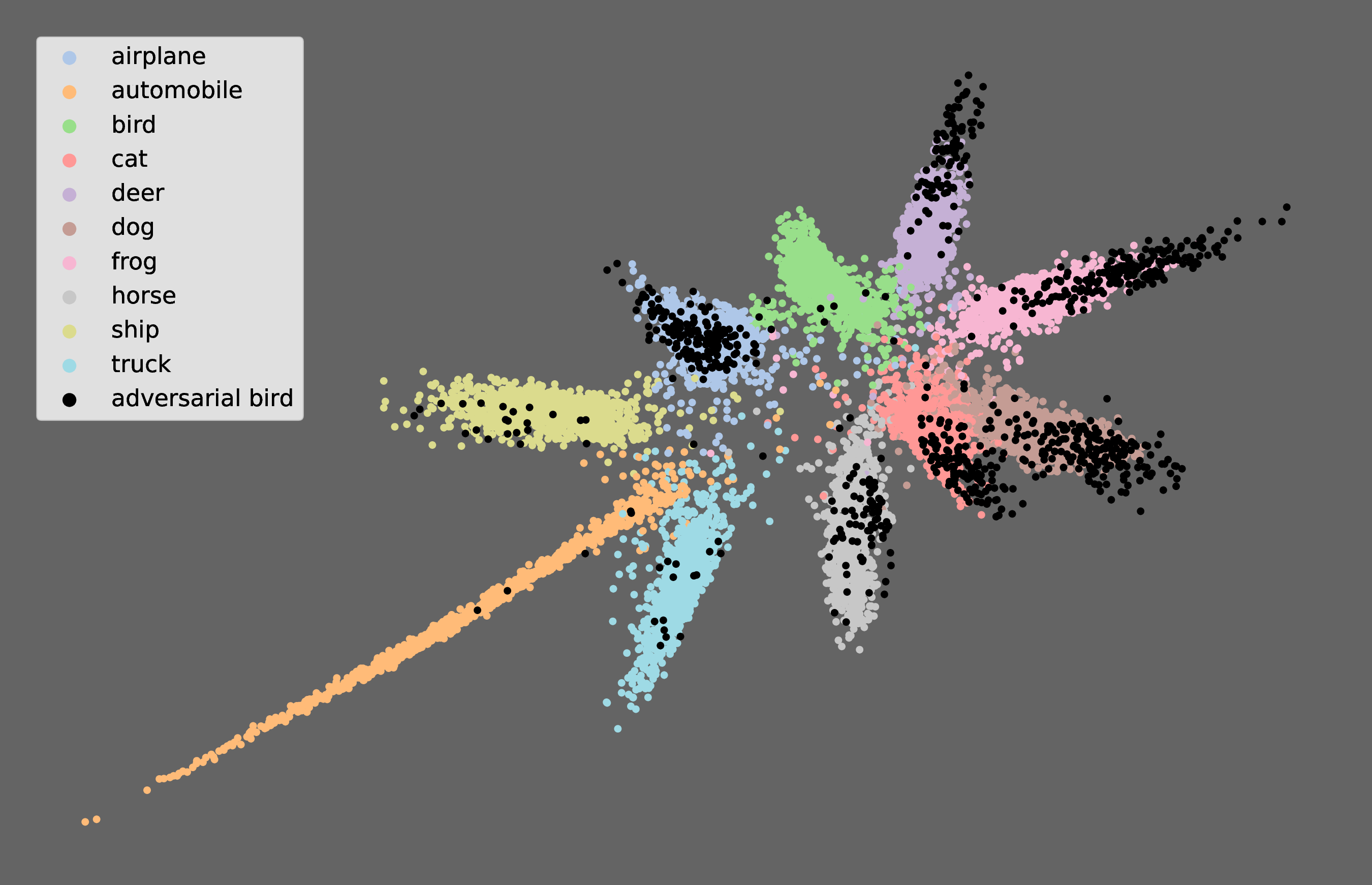

It has been shown that deep learning models are vulnerable to adversarial attacks. We seek to further understand the consequence of such attacks on the intermediate activations of neural networks. We present an evaluation metric, POP-N, which scores the effectiveness of projecting data to N dimensions under the context of visualizing representations of adversarially perturbed inputs. We conduct experiments on CIFAR-10 to compare the POP-2 score of several dimensionality reduction algorithms across various adversarial attacks. Finally, we utilize the 2D data corresponding to high POP-2 scores to generate example visualizations.

PDF Abstract

CIFAR-10

CIFAR-10