Visual Relationship Detection with Language prior and Softmax

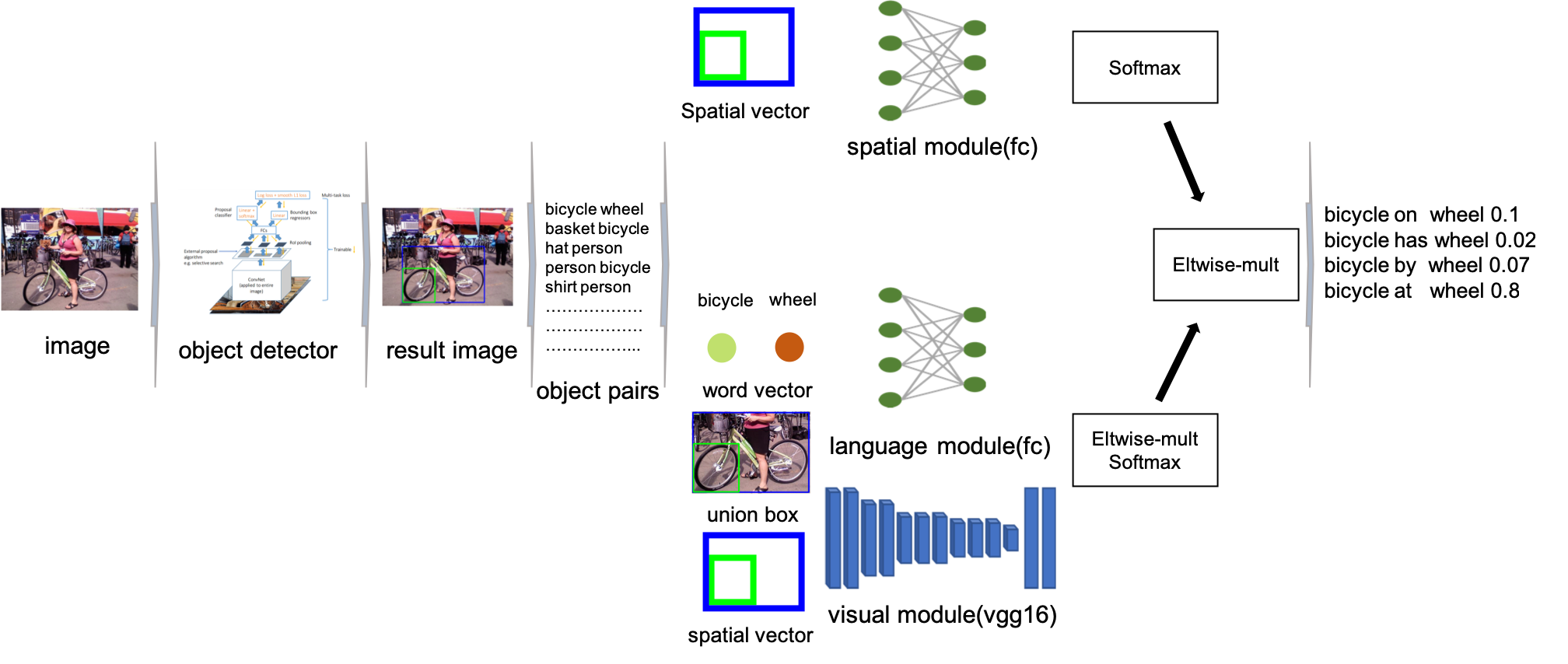

Visual relationship detection is an intermediate image understanding task that detects two objects and classifies a predicate that explains the relationship between two objects in an image. The three components are linguistically and visually correlated (e.g. "wear" is related to "person" and "shirt", while "laptop" is related to "table" and "on") thus, the solution space is huge because there are many possible cases between them. Language and visual modules are exploited and a sophisticated spatial vector is proposed. The models in this work outperformed the state of arts without costly linguistic knowledge distillation from a large text corpus and building complex loss functions. All experiments were only evaluated on Visual Relationship Detection and Visual Genome dataset.

PDF Abstract

Visual Genome

Visual Genome

VRD

VRD