Visibility-aware Multi-view Stereo Network

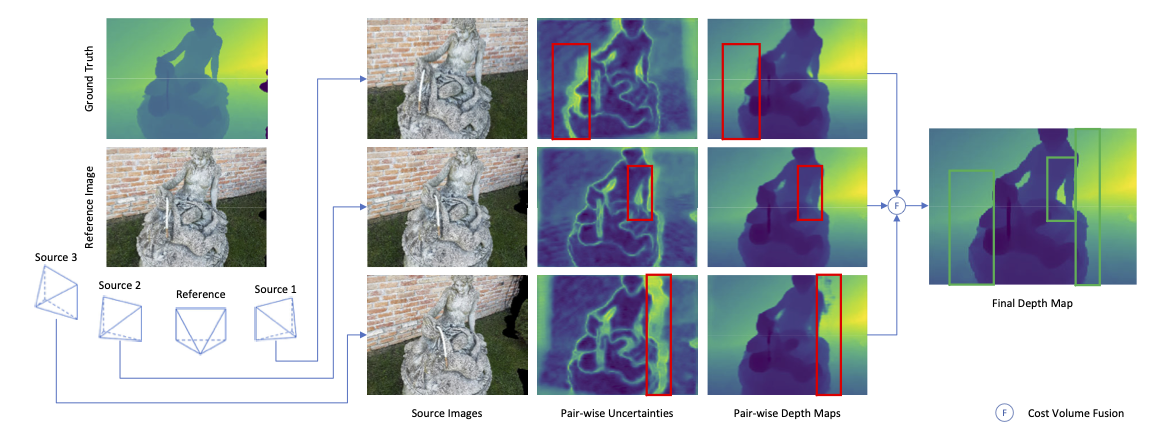

Learning-based multi-view stereo (MVS) methods have demonstrated promising results. However, very few existing networks explicitly take the pixel-wise visibility into consideration, resulting in erroneous cost aggregation from occluded pixels. In this paper, we explicitly infer and integrate the pixel-wise occlusion information in the MVS network via the matching uncertainty estimation. The pair-wise uncertainty map is jointly inferred with the pair-wise depth map, which is further used as weighting guidance during the multi-view cost volume fusion. As such, the adverse influence of occluded pixels is suppressed in the cost fusion. The proposed framework Vis-MVSNet significantly improves depth accuracies in the scenes with severe occlusion. Extensive experiments are performed on DTU, BlendedMVS, and Tanks and Temples datasets to justify the effectiveness of the proposed framework.

PDF Abstract

DTU

DTU

BlendedMVS

BlendedMVS

Tanks and Temples

Tanks and Temples