Video Matting via Sparse and Low-Rank Representation

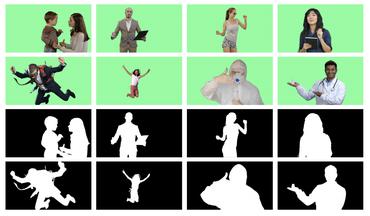

We introduce a novel method of video matting via sparse and low-rank representation. Previous matting methods [10, 9] introduced a nonlocal prior to estimate the alpha matte and have achieved impressive results on some data. However, on one hand, searching inadequate or excessive samples may miss good samples or introduce noise; on the other hand, it is difficult to construct consistent nonlocal structures for pixels with similar features, yielding spatially and temporally inconsistent video mattes. In this paper, we proposed a novel video matting method to achieve spatially and temporally consistent matting result. Toward this end, a sparse and low-rank representation model is introduced to pursue consistent nonlocal structures for pixels with similar features. The sparse representation is used to adaptively select best samples and accurately construct the nonlocal structures for all pixels, while the low-rank representation is used to globally ensure consistent nonlocal structures for pixels with similar features. The two representations are combined to generate consistent video mattes. Experimental results show that our method has achieved high quality results in a variety of challenging examples featuring illumination changes, feature ambiguity, topology changes, transparency variation, dis-occlusion, fast motion and motion blur.

PDF Abstract