vid-TLDR: Training Free Token merging for Light-weight Video Transformer

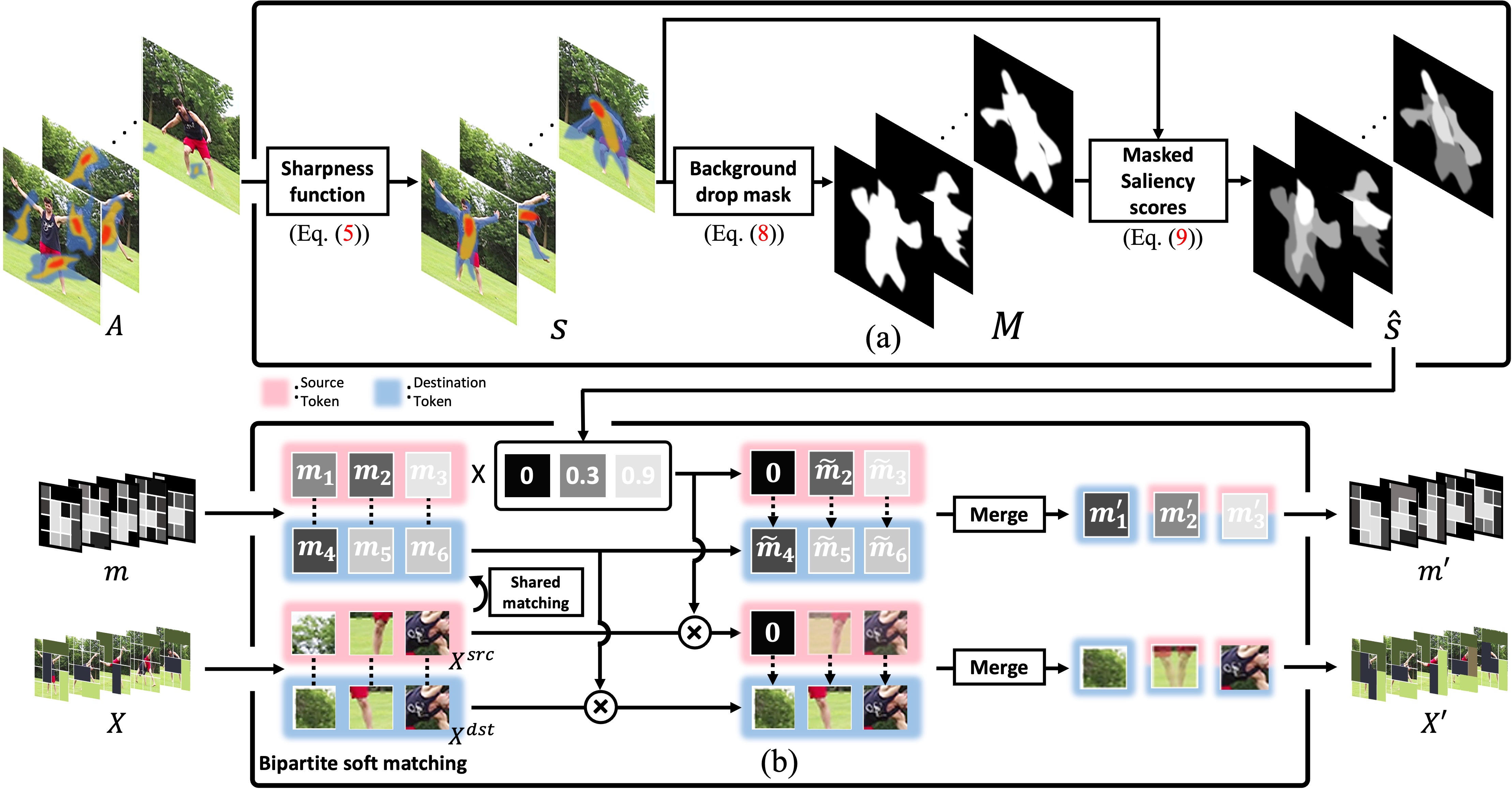

Video Transformers have become the prevalent solution for various video downstream tasks with superior expressive power and flexibility. However, these video transformers suffer from heavy computational costs induced by the massive number of tokens across the entire video frames, which has been the major barrier to training the model. Further, the patches irrelevant to the main contents, e.g., backgrounds, degrade the generalization performance of models. To tackle these issues, we propose training free token merging for lightweight video Transformer (vid-TLDR) that aims to enhance the efficiency of video Transformers by merging the background tokens without additional training. For vid-TLDR, we introduce a novel approach to capture the salient regions in videos only with the attention map. Further, we introduce the saliency-aware token merging strategy by dropping the background tokens and sharpening the object scores. Our experiments show that vid-TLDR significantly mitigates the computational complexity of video Transformers while achieving competitive performance compared to the base model without vid-TLDR. Code is available at https://github.com/mlvlab/vid-TLDR.

PDF AbstractCode

Results from the Paper

Ranked #2 on

Video Retrieval

on SSv2-template retrieval

(using extra training data)

Ranked #2 on

Video Retrieval

on SSv2-template retrieval

(using extra training data)

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Uses Extra Training Data |

Benchmark |

|---|---|---|---|---|---|---|---|

| Zero-Shot Video Retrieval | ActivityNet | vid-TLDR (UMT-L) | text-to-video R@1 | 42.8 | # 3 | ||

| video-to-text R@1 | 41.2 | # 3 | |||||

| text-to-video R@10 | 79.6 | # 5 | |||||

| text-to-video R@5 | 69.4 | # 4 | |||||

| video-to-text R@5 | 68.2 | # 4 | |||||

| video-to-text R@10 | 79.1 | # 4 | |||||

| Video Retrieval | ActivityNet | vid-TLDR (UMT-L) | text-to-video R@1 | 66.7 | # 6 | ||

| text-to-video R@5 | 88.6 | # 4 | |||||

| text-to-video R@10 | 94.4 | # 4 | |||||

| video-to-text R@1 | 63.9 | # 3 | |||||

| video-to-text R@5 | 88.7 | # 2 | |||||

| video-to-text R@10 | 94.5 | # 2 | |||||

| Zero-Shot Video Retrieval | DiDeMo | vid-TLDR (UMT-L) | text-to-video R@1 | 52.0 | # 4 | ||

| text-to-video R@5 | 74.0 | # 4 | |||||

| text-to-video R@10 | 81.0 | # 3 | |||||

| video-to-text R@1 | 52.0 | # 3 | |||||

| video-to-text R@5 | 75.9 | # 3 | |||||

| video-to-text R@10 | 83.8 | # 2 | |||||

| Video Retrieval | DiDeMo | vid-TLDR (UMT-L) | text-to-video R@1 | 72.3 | # 2 | ||

| text-to-video R@5 | 91.2 | # 1 | |||||

| text-to-video R@10 | 94.2 | # 1 | |||||

| video-to-text R@1 | 68.5 | # 2 | |||||

| video-to-text R@10 | 93.8 | # 1 | |||||

| video-to-text R@5 | 89.8 | # 1 | |||||

| Video Retrieval | LSMDC | vid-TLDR (UMT-L) | text-to-video R@1 | 43.1 | # 2 | ||

| text-to-video R@5 | 64.5 | # 3 | |||||

| text-to-video R@10 | 71.4 | # 3 | |||||

| video-to-text R@1 | 40.7 | # 3 | |||||

| video-to-text R@5 | 70.2 | # 2 | |||||

| video-to-text R@10 | 63.6 | # 3 | |||||

| Zero-Shot Video Retrieval | MSR-VTT | vid-TLDR (UMT-L) | text-to-video R@1 | 42.1 | # 8 | ||

| text-to-video R@5 | 63.9 | # 9 | |||||

| text-to-video R@10 | 72.4 | # 10 | |||||

| video-to-text R@1 | 37.7 | # 7 | |||||

| video-to-text R@5 | 59.8 | # 5 | |||||

| video-to-text R@10 | 69.4 | # 6 | |||||

| Video Retrieval | MSR-VTT | vid-TLDR (UMT-L) | text-to-video R@1 | 58.1 | # 5 | ||

| text-to-video R@5 | 81.0 | # 3 | |||||

| text-to-video R@10 | 81.6 | # 10 | |||||

| video-to-text R@1 | 58.7 | # 4 | |||||

| video-to-text R@5 | 81.6 | # 4 | |||||

| video-to-text R@10 | 86.9 | # 4 | |||||

| Visual Question Answering (VQA) | MSRVTT-QA | vid-TLDR (UMT-L) | Accuracy | 0.470 | # 8 | ||

| Video Retrieval | MSVD | vid-TLDR (UMT-L) | text-to-video R@1 | 57.9 | # 5 | ||

| text-to-video R@5 | 83.8 | # 3 | |||||

| text-to-video R@10 | 89.4 | # 4 | |||||

| video-to-text R@1 | 82.7 | # 2 | |||||

| video-to-text R@5 | 94.5 | # 1 | |||||

| video-to-text R@10 | 96.3 | # 3 | |||||

| Zero-Shot Video Retrieval | MSVD | vid-TLDR (UMT-L) | text-to-video R@1 | 50.0 | # 5 | ||

| video-to-text R@1 | 75.7 | # 3 | |||||

| text-to-video R@5 | 77.6 | # 5 | |||||

| text-to-video R@10 | 85.5 | # 6 | |||||

| video-to-text R@5 | 90.0 | # 5 | |||||

| video-to-text R@10 | 95.1 | # 5 | |||||

| Visual Question Answering (VQA) | MSVD-QA | vid-TLDR (UMT-L) | Accuracy | 0.549 | # 14 | ||

| Video Retrieval | SSv2-label retrieval | vid-TLDR (UMT-L) | text-to-video R@1 | 73.1 | # 2 | ||

| text-to-video R@5 | 93.3 | # 1 | |||||

| text-to-video R@10 | 96.6 | # 1 | |||||

| Video Retrieval | SSv2-template retrieval | vid-TLDR (UMT-L) | text-to-video R@1 | 90.2 | # 2 | ||

| text-to-video R@5 | 100.0 | # 1 | |||||

| text-to-video R@10 | 100.0 | # 1 |

UCF101

UCF101

ActivityNet

ActivityNet

MSR-VTT

MSR-VTT

MSVD

MSVD

Something-Something V2

Something-Something V2

DiDeMo

DiDeMo

LSMDC

LSMDC