Vertical Federated Principal Component Analysis and Its Kernel Extension on Feature-wise Distributed Data

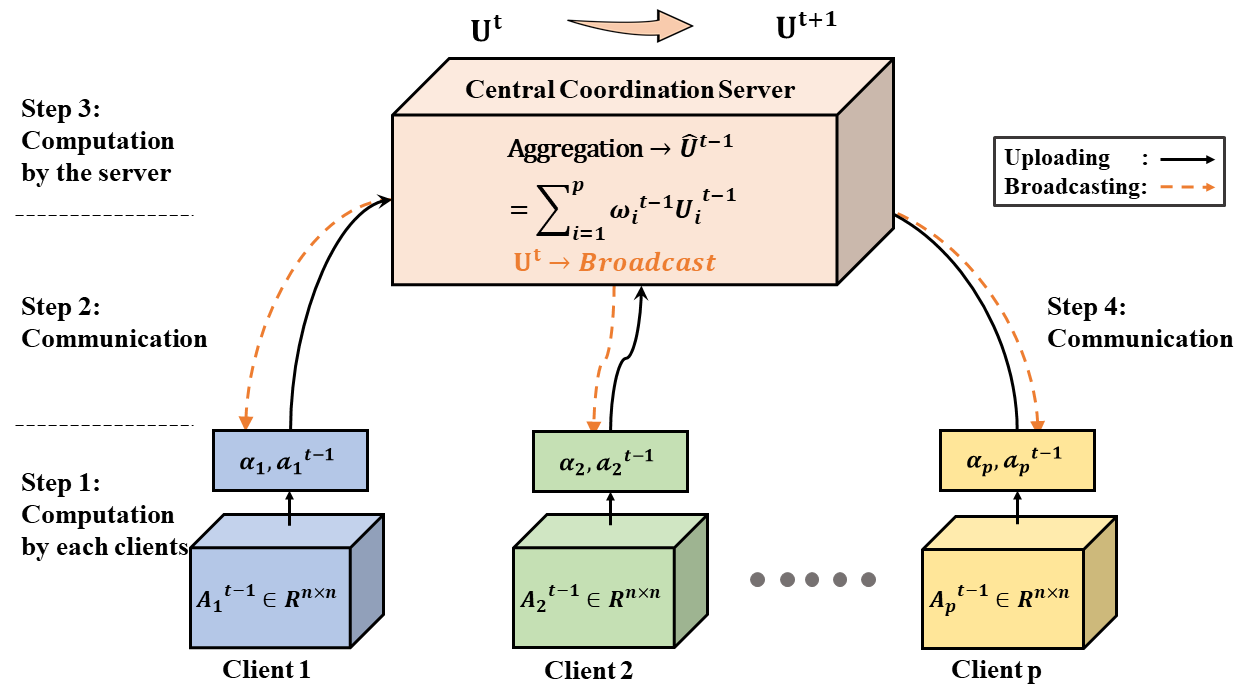

Despite enormous research interest and rapid application of federated learning (FL) to various areas, existing studies mostly focus on supervised federated learning under the horizontally partitioned local dataset setting. This paper will study the unsupervised FL under the vertically partitioned dataset setting. Accordingly, we propose the federated principal component analysis for vertically partitioned dataset (VFedPCA) method, which reduces the dimensionality across the joint datasets over all the clients and extracts the principal component feature information for downstream data analysis. We further take advantage of the nonlinear dimensionality reduction and propose the vertical federated advanced kernel principal component analysis (VFedAKPCA) method, which can effectively and collaboratively model the nonlinear nature existing in many real datasets. In addition, we study two communication topologies. The first is a server-client topology where a semi-trusted server coordinates the federated training, while the second is the fully-decentralized topology which further eliminates the requirement of the server by allowing clients themselves to communicate with their neighbors. Extensive experiments conducted on five types of real-world datasets corroborate the efficacy of VFedPCA and VFedAKPCA under the vertically partitioned FL setting. Code is available at: https://github.com/juyongjiang/VFedPCA-VFedAKPCA

PDF Abstract

CUHK03

CUHK03