Unsupervised Text-to-Speech Synthesis by Unsupervised Automatic Speech Recognition

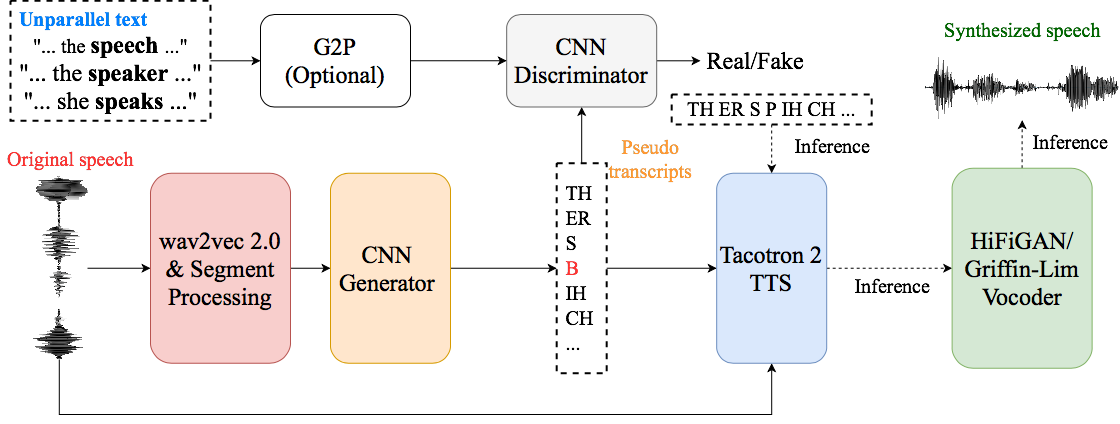

An unsupervised text-to-speech synthesis (TTS) system learns to generate speech waveforms corresponding to any written sentence in a language by observing: 1) a collection of untranscribed speech waveforms in that language; 2) a collection of texts written in that language without access to any transcribed speech. Developing such a system can significantly improve the availability of speech technology to languages without a large amount of parallel speech and text data. This paper proposes an unsupervised TTS system based on an alignment module that outputs pseudo-text and another synthesis module that uses pseudo-text for training and real text for inference. Our unsupervised system can achieve comparable performance to the supervised system in seven languages with about 10-20 hours of speech each. A careful study on the effect of text units and vocoders has also been conducted to better understand what factors may affect unsupervised TTS performance. The samples generated by our models can be found at https://cactuswiththoughts.github.io/UnsupTTS-Demo, and our code can be found at https://github.com/lwang114/UnsupTTS.

PDF Abstract

LibriSpeech

LibriSpeech

LJSpeech

LJSpeech