Unrestricted Black-box Adversarial Attack Using GAN with Limited Queries

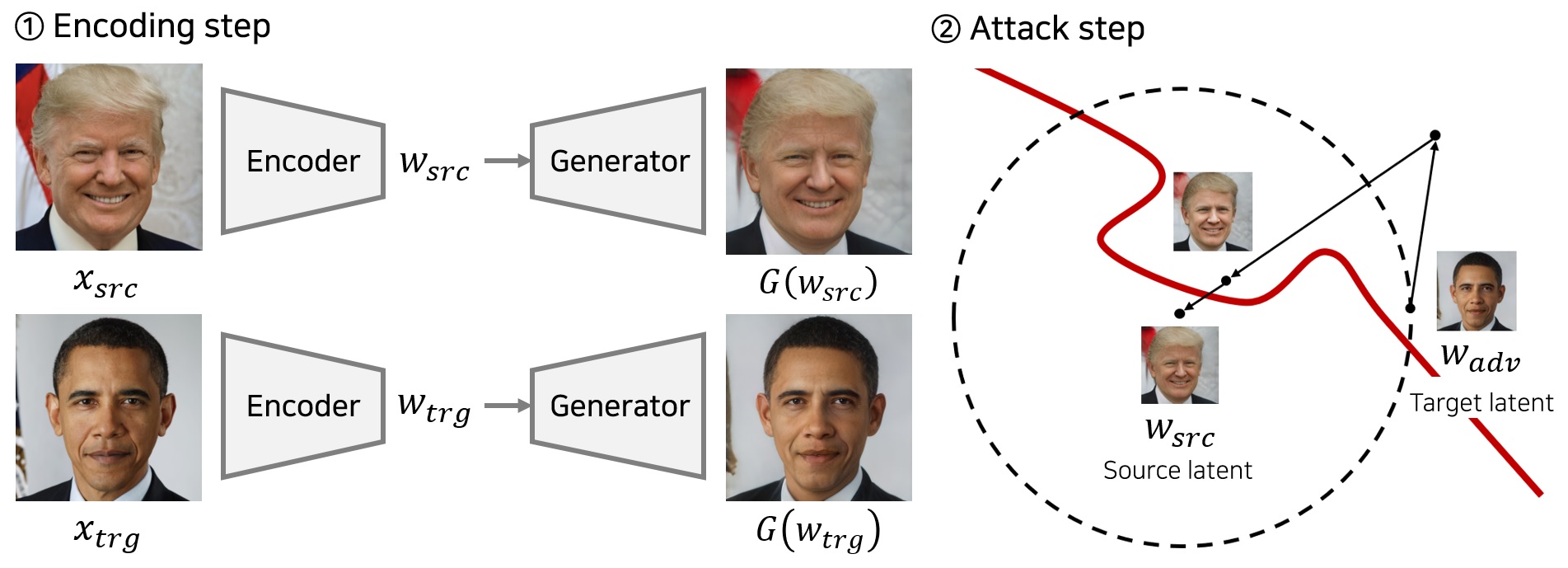

Adversarial examples are inputs intentionally generated for fooling a deep neural network. Recent studies have proposed unrestricted adversarial attacks that are not norm-constrained. However, the previous unrestricted attack methods still have limitations to fool real-world applications in a black-box setting. In this paper, we present a novel method for generating unrestricted adversarial examples using GAN where an attacker can only access the top-1 final decision of a classification model. Our method, Latent-HSJA, efficiently leverages the advantages of a decision-based attack in the latent space and successfully manipulates the latent vectors for fooling the classification model. With extensive experiments, we demonstrate that our proposed method is efficient in evaluating the robustness of classification models with limited queries in a black-box setting. First, we demonstrate that our targeted attack method is query-efficient to produce unrestricted adversarial examples for a facial identity recognition model that contains 307 identities. Then, we demonstrate that the proposed method can also successfully attack a real-world celebrity recognition service.

PDF AbstractCode

Datasets

Introduced in the Paper:

Celeb-HQ Facial Identity Recognition Dataset

Celeb-HQ Facial Identity Recognition Dataset

Celeb-HQ Face Gender Recognition Dataset

Celeb-HQ Face Gender Recognition Dataset

Used in the Paper:

CelebA-HQ

CelebA-HQ