Unmasked Teacher: Towards Training-Efficient Video Foundation Models

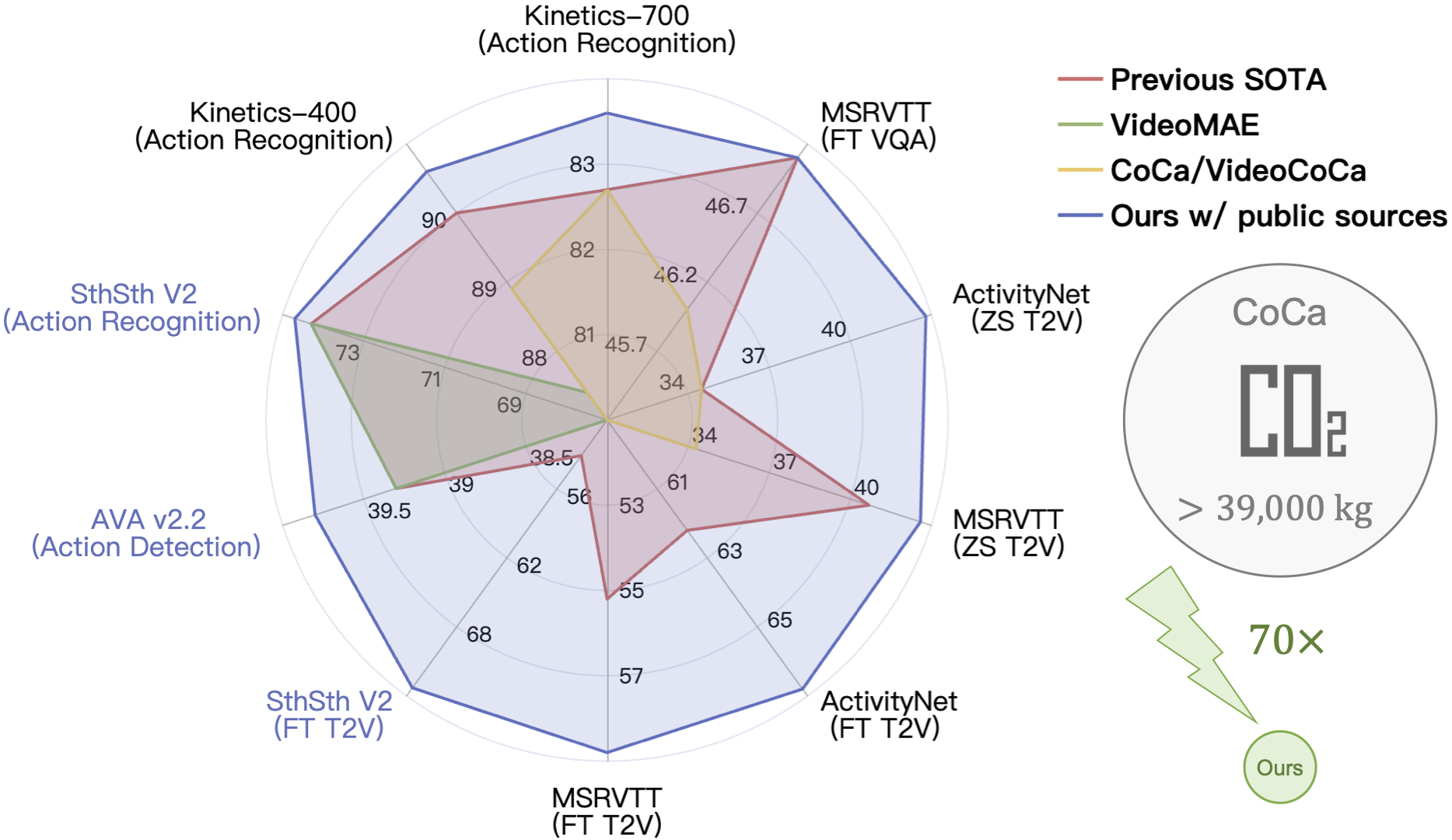

Video Foundation Models (VFMs) have received limited exploration due to high computational costs and data scarcity. Previous VFMs rely on Image Foundation Models (IFMs), which face challenges in transferring to the video domain. Although VideoMAE has trained a robust ViT from limited data, its low-level reconstruction poses convergence difficulties and conflicts with high-level cross-modal alignment. This paper proposes a training-efficient method for temporal-sensitive VFMs that integrates the benefits of existing methods. To increase data efficiency, we mask out most of the low-semantics video tokens, but selectively align the unmasked tokens with IFM, which serves as the UnMasked Teacher (UMT). By providing semantic guidance, our method enables faster convergence and multimodal friendliness. With a progressive pre-training framework, our model can handle various tasks including scene-related, temporal-related, and complex video-language understanding. Using only public sources for pre-training in 6 days on 32 A100 GPUs, our scratch-built ViT-L/16 achieves state-of-the-art performances on various video tasks. The code and models will be released at https://github.com/OpenGVLab/unmasked_teacher.

PDF Abstract ICCV 2023 PDF ICCV 2023 AbstractCode

Results from the Paper

Ranked #1 on

Video Retrieval

on SSv2-template retrieval

(using extra training data)

Ranked #1 on

Video Retrieval

on SSv2-template retrieval

(using extra training data)

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Uses Extra Training Data |

Benchmark |

|---|---|---|---|---|---|---|---|

| Video Retrieval | ActivityNet | UMT-L (ViT-L/16) | text-to-video R@1 | 66.8 | # 5 | ||

| text-to-video R@5 | 89.1 | # 3 | |||||

| text-to-video R@10 | 94.9 | # 3 | |||||

| video-to-text R@1 | 64.4 | # 2 | |||||

| video-to-text R@5 | 89.1 | # 1 | |||||

| video-to-text R@10 | 94.8 | # 1 | |||||

| Zero-Shot Video Retrieval | ActivityNet | UMT-L (ViT-L/16) | text-to-video R@1 | 42.8 | # 3 | ||

| video-to-text R@1 | 40.7 | # 4 | |||||

| text-to-video R@10 | 79.8 | # 4 | |||||

| text-to-video R@5 | 69.6 | # 3 | |||||

| video-to-text R@5 | 67.6 | # 5 | |||||

| video-to-text R@10 | 78.6 | # 5 | |||||

| Video Question Answering | ActivityNet-QA | UMT-L (ViT-L/16) | Accuracy | 47.9 | # 10 | ||

| Action Recognition | AVA v2.2 | UMT-L (ViT-L/16) | mAP | 39.8 | # 8 | ||

| Zero-Shot Video Retrieval | DiDeMo | UMT-L (ViT-L/16) | text-to-video R@1 | 48.6 | # 5 | ||

| text-to-video R@5 | 72.9 | # 5 | |||||

| text-to-video R@10 | 79.0 | # 6 | |||||

| video-to-text R@1 | 49.9 | # 4 | |||||

| video-to-text R@5 | 74.8 | # 4 | |||||

| video-to-text R@10 | 81.4 | # 4 | |||||

| Video Retrieval | DiDeMo | UMT-L (ViT-L/16) | text-to-video R@1 | 70.4 | # 5 | ||

| text-to-video R@5 | 90.1 | # 2 | |||||

| text-to-video R@10 | 93.5 | # 2 | |||||

| video-to-text R@1 | 65.7 | # 3 | |||||

| video-to-text R@10 | 93.3 | # 2 | |||||

| video-to-text R@5 | 89.6 | # 2 | |||||

| Action Classification | Kinetics-400 | UMT-L (ViT-L/16) | Acc@1 | 90.6 | # 6 | ||

| Acc@5 | 98.7 | # 2 | |||||

| Action Classification | Kinetics-400 | Unmasked Teacher (ViT-L) | Acc@1 | 90.6 | # 6 | ||

| Acc@5 | 98.7 | # 2 | |||||

| FLOPs (G) x views | 1434×3×4 | # 1 | |||||

| Parameters (M) | 304 | # 26 | |||||

| Action Classification | Kinetics-600 | UMT-L (ViT-L/16) | Top-1 Accuracy | 90.5 | # 8 | ||

| Top-5 Accuracy | 98.8 | # 2 | |||||

| Action Classification | Kinetics-700 | UMT-L (ViT-L/16) | Top-1 Accuracy | 83.6 | # 5 | ||

| Top-5 Accuracy | 96.7 | # 1 | |||||

| Video Retrieval | LSMDC | UMT-L (ViT-L/16) | text-to-video R@1 | 43.0 | # 3 | ||

| text-to-video R@5 | 65.5 | # 2 | |||||

| text-to-video R@10 | 73.0 | # 2 | |||||

| video-to-text R@1 | 41.4 | # 2 | |||||

| video-to-text R@5 | 64.3 | # 3 | |||||

| video-to-text R@10 | 71.5 | # 2 | |||||

| Zero-Shot Video Retrieval | LSMDC | UMT-L (ViT-L/16) | text-to-video R@1 | 25.2 | # 3 | ||

| video-to-text R@1 | 23.2 | # 3 | |||||

| text-to-video R@5 | 43.0 | # 4 | |||||

| text-to-video R@10 | 50.5 | # 4 | |||||

| video-to-text R@5 | 37.7 | # 3 | |||||

| video-to-text R@10 | 44.2 | # 3 | |||||

| Action Classification | MiT | UMT-L (ViT-L/16) | Top 1 Accuracy | 48.7 | # 4 | ||

| Top 5 Accuracy | 78.2 | # 1 | |||||

| Video Retrieval | MSR-VTT | UMT-L (ViT-L/16) | text-to-video R@1 | 58.8 | # 4 | ||

| text-to-video R@5 | 81.0 | # 3 | |||||

| text-to-video R@10 | 87.1 | # 4 | |||||

| video-to-text R@1 | 58.6 | # 5 | |||||

| video-to-text R@5 | 81.6 | # 4 | |||||

| video-to-text R@10 | 86.5 | # 5 | |||||

| Zero-Shot Video Retrieval | MSR-VTT | UMT-L (ViT-L/16) | text-to-video R@1 | 42.6 | # 7 | ||

| text-to-video R@5 | 64.4 | # 8 | |||||

| text-to-video R@10 | 73.1 | # 8 | |||||

| video-to-text R@1 | 38.6 | # 5 | |||||

| video-to-text R@5 | 59.8 | # 5 | |||||

| video-to-text R@10 | 69.6 | # 5 | |||||

| Visual Question Answering (VQA) | MSRVTT-QA | UMT-L (ViT-L/16) | Accuracy | 0.471 | # 6 | ||

| Zero-Shot Video Retrieval | MSVD | UMT-L (ViT-L/16) | text-to-video R@1 | 49.0 | # 6 | ||

| video-to-text R@1 | 74.5 | # 4 | |||||

| text-to-video R@5 | 76.9 | # 6 | |||||

| text-to-video R@10 | 84.7 | # 8 | |||||

| video-to-text R@5 | 89.7 | # 6 | |||||

| video-to-text R@10 | 92.8 | # 6 | |||||

| Visual Question Answering (VQA) | MSVD-QA | UMT-L (ViT-L/16) | Accuracy | 0.552 | # 13 | ||

| Video Retrieval | SSv2-label retrieval | UMT-L (ViT-L/16) | text-to-video R@1 | 73.3 | # 1 | ||

| text-to-video R@5 | 92.7 | # 2 | |||||

| text-to-video R@10 | 96.6 | # 1 | |||||

| Video Retrieval | SSv2-template retrieval | UMT-L (ViT-L/16) | text-to-video R@1 | 90.8 | # 1 | ||

| text-to-video R@5 | 100.0 | # 1 | |||||

| text-to-video R@10 | 100.0 | # 1 | |||||

| Video Retrieval | VATEX | Unmasked Teacher | text-to-video R@1 | 72 | # 4 | ||

| text-to-video R@10 | 97.8 | # 3 | |||||

| video-to-text R@1 | 86.0 | # 3 | |||||

| video-to-text R@10 | 99.6 | # 1 | |||||

| text-to-video R@5 | 95.1 | # 3 |

Kinetics

Kinetics

ActivityNet

ActivityNet

Kinetics 400

Kinetics 400

MSR-VTT

MSR-VTT

MSVD

MSVD

Something-Something V2

Something-Something V2

ActivityNet Captions

ActivityNet Captions

DiDeMo

DiDeMo

WebVid

WebVid

Kinetics-600

Kinetics-600

LSMDC

LSMDC

CC12M

CC12M

VATEX

VATEX

AVA

AVA

MiT

MiT

ActivityNet-QA

ActivityNet-QA

Kinetics-700

Kinetics-700