Understanding Humans in Crowded Scenes: Deep Nested Adversarial Learning and A New Benchmark for Multi-Human Parsing

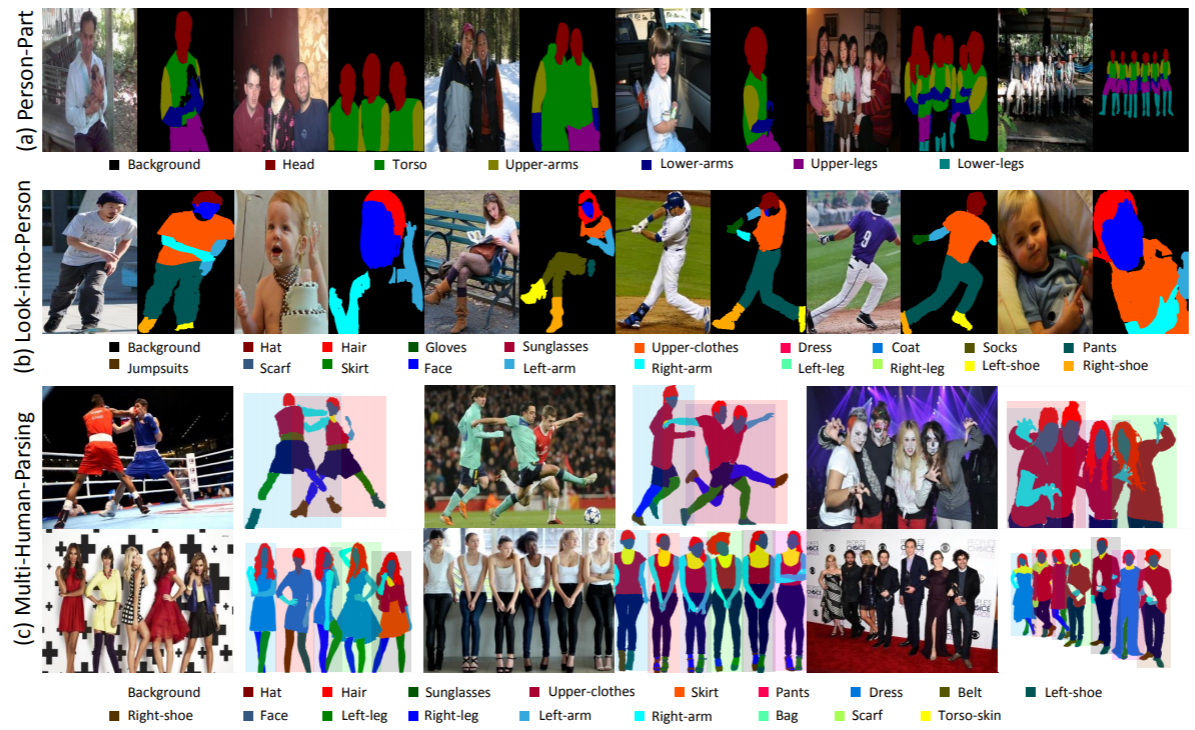

Despite the noticeable progress in perceptual tasks like detection, instance segmentation and human parsing, computers still perform unsatisfactorily on visually understanding humans in crowded scenes, such as group behavior analysis, person re-identification and autonomous driving, etc. To this end, models need to comprehensively perceive the semantic information and the differences between instances in a multi-human image, which is recently defined as the multi-human parsing task. In this paper, we present a new large-scale database "Multi-Human Parsing (MHP)" for algorithm development and evaluation, and advances the state-of-the-art in understanding humans in crowded scenes. MHP contains 25,403 elaborately annotated images with 58 fine-grained semantic category labels, involving 2-26 persons per image and captured in real-world scenes from various viewpoints, poses, occlusion, interactions and background. We further propose a novel deep Nested Adversarial Network (NAN) model for multi-human parsing. NAN consists of three Generative Adversarial Network (GAN)-like sub-nets, respectively performing semantic saliency prediction, instance-agnostic parsing and instance-aware clustering. These sub-nets form a nested structure and are carefully designed to learn jointly in an end-to-end way. NAN consistently outperforms existing state-of-the-art solutions on our MHP and several other datasets, and serves as a strong baseline to drive the future research for multi-human parsing.

PDF Abstract

MS COCO

MS COCO

LIP

LIP

PASCAL-Part

PASCAL-Part

MHP

MHP