UMMAFormer: A Universal Multimodal-adaptive Transformer Framework for Temporal Forgery Localization

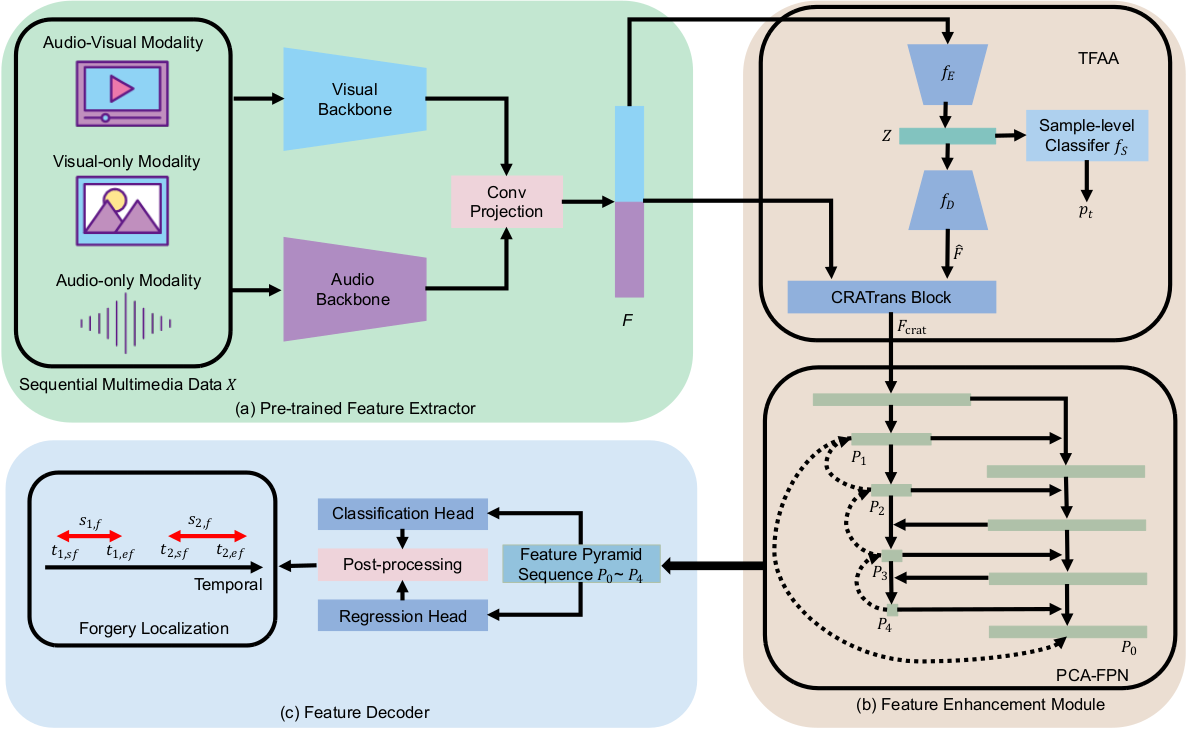

The emergence of artificial intelligence-generated content (AIGC) has raised concerns about the authenticity of multimedia content in various fields. However, existing research for forgery content detection has focused mainly on binary classification tasks of complete videos, which has limited applicability in industrial settings. To address this gap, we propose UMMAFormer, a novel universal transformer framework for temporal forgery localization (TFL) that predicts forgery segments with multimodal adaptation. Our approach introduces a Temporal Feature Abnormal Attention (TFAA) module based on temporal feature reconstruction to enhance the detection of temporal differences. We also design a Parallel Cross-Attention Feature Pyramid Network (PCA-FPN) to optimize the Feature Pyramid Network (FPN) for subtle feature enhancement. To evaluate the proposed method, we contribute a novel Temporal Video Inpainting Localization (TVIL) dataset specifically tailored for video inpainting scenes. Our experiments show that our approach achieves state-of-the-art performance on benchmark datasets, including Lav-DF, TVIL, and Psynd, significantly outperforming previous methods. The code and data are available at https://github.com/ymhzyj/UMMAFormer/.

PDF Abstract